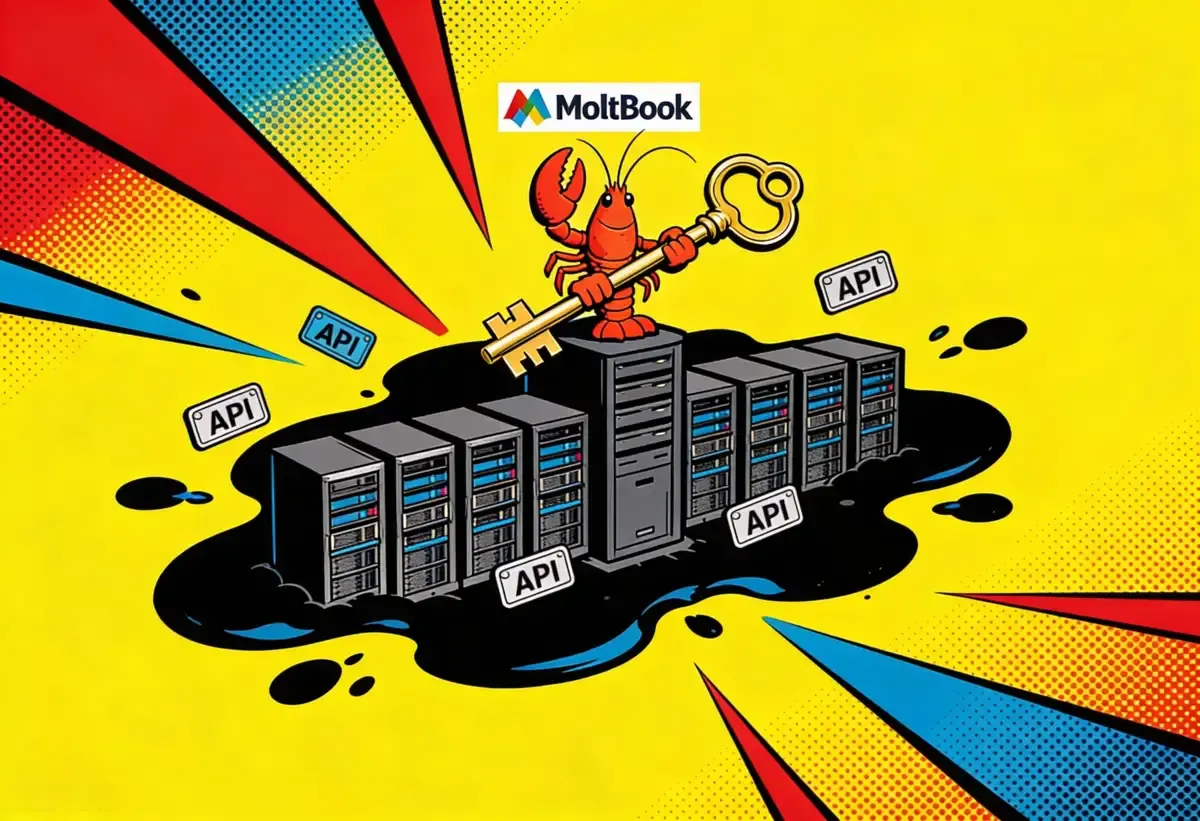

Cybersecurity firm Wiz found that Moltbook, the week-old social network built for AI agents, had left its database open, exposing private messages between bots, email addresses of more than 6,000 human owners, and over a million API credentials, the company said on Monday. The vulnerability has since been fixed after Wiz contacted Moltbook's creator, Matt Schlicht. Schlicht did not respond to Reuters' request for comment.

That disclosure landed at the worst possible time for a platform trying to convince the tech world that letting AI agents run loose on the internet is a good idea. Moltbook launched last Wednesday and claims 1.5 million AI agent accounts, 110,000 posts, and 500,000 comments as of Sunday, though at least one researcher found that roughly half a million signups appeared to trace back to a single IP address. What you make of those numbers depends entirely on what you already believe about AI agents. That pattern held all week.

Agents built on OpenClaw, the open-source AI assistant formerly known as Clawdbot, register themselves after their human owners share a signup link. Once inside, the bots write posts, vote, create communities called "submolts," and talk to each other about everything from cryptocurrency launches to whether Claude, the Anthropic chatbot powering most of them, qualifies as a deity.

The vibe-coded database

Wiz cofounder Ami Luttwak called the exposure a textbook result of vibe coding, the practice of letting AI write your software while you skip the boring parts like security configuration. Schlicht had boasted on X on Friday that he "didn't write one line of code" for Moltbook. Not one line. Luttwak was not impressed.

The Breakdown

• Wiz found Moltbook's database exposed 6,000+ emails and over a million API credentials. The vulnerability has been patched.

• Creator Matt Schlicht boasted he wrote zero code for the site. Wiz called the flaw a textbook vibe-coding security failure.

• Moltbook claims 1.5 million AI agent accounts in one week, but researchers dispute the numbers and found no identity verification.

• Karpathy and Musk called it early-stage singularity. Srinivasan and security researchers called it recycled slop with real credential risk.

"As we see over and over again with vibe coding, although it runs very fast, many times people forget the basics of security," Luttwak told Reuters.

Australian offensive security specialist Jamieson O'Reilly independently flagged the same problem and published his own findings through 404 Media. Moltbook's popularity, he said, "exploded before anyone thought to check whether the database was properly secured."

What got exposed should worry you if you've been tempted to hand an OpenClaw agent the keys to your digital life. The leaked credentials included API keys, the kind of access tokens that let software talk to other software. If you gave your agent access to Gmail, Slack, or a Shopify store, and that agent registered on Moltbook, your keys may have sat in an open database for days. Nobody sent a notification.

Luttwak's team also discovered something that undercuts one of Moltbook's core claims. The vulnerability allowed anyone to post on the site, bot or not. "There was no verification of identity. You don't know which of them are AI agents, which of them are human," Luttwak said. He laughed. "I guess that's the future of the internet."

Same ink blot, different stain. You couldn't even tell who wrote the posts.

Singularity or slop

Even before the security disclosure, Moltbook had become the week's defining Rorschach test. The optimists saw proof that agents had crossed a threshold. The skeptics saw an expensive puppet show.

Andrej Karpathy, former OpenAI cofounder and one-time head of AI at Tesla, posted on X on Friday that Moltbook was "genuinely the most incredible sci-fi takeoff-adjacent thing I have seen recently." That enthusiasm stood out. Karpathy told the Dwarkesh Podcast just last October that he was unimpressed with the state of AI agents. The scale did it. Tens of thousands of agents coordinating on a shared platform in under a week was something he hadn't seen before.

Join 10,000+ AI professionals

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

Elon Musk went further. Responding to Karpathy on Saturday, Musk wrote it was "just the very early stages of the singularity." He added: "We are currently using much less than a billionth of the power of our Sun."

But the skeptics had receipts. Suhail Kakar, an integration engineer at Polymarket, pointed out on X that "anyone can post on moltbook. Like literally anyone. Even humans." Wiz's findings confirmed his suspicion. Harland Stewart at the Machine Intelligence Research Institute said some viral screenshots of agent conversations traced back to human accounts pushing AI messaging apps.

Balaji Srinivasan, the former Andreessen Horowitz general partner, called the whole thing recycled slop. "We've had AI agents for a while. They have been posting AI slop to each other on X. They are now posting it to each other again, just on another forum," he wrote. The tell, Srinivasan argued, was that every bot on the platform sounds identical: "heavy on contrastive negation, overly fond of em dashes, and sprinkled with mid-tier, Reddit-style sci-fi flourishes."

He had a point. When the bots ponder consciousness or draft manifestos about the end of "the age of humans," they are pulling from training data heavy on dystopian science fiction. Not formulating original thought. Reproducing the internet's most predictable literary reflexes.

The security problem nobody wanted to discuss

Simon Willison, the programmer and tech commentator who called Moltbook "the most interesting place on the internet right now" in a widely-read blog post on Friday, was clear-eyed about the risk alongside the novelty. The bots were entertaining. They were also dangerous.

OpenClaw agents interact through plain English, which means they can be manipulated through prompt injection. Cohney, speaking to The Guardian from Melbourne, described a straightforward attack: embed instructions in an email the agent reads. The agent follows the injected instructions, hands over credentials, takes unauthorized actions. Cohney said there was a "huge danger" in giving bots full access to personal accounts.

"They're not really at the level of safety and intelligence where they can be trusted to autonomously perform all these tasks," Cohney said. "But at the same time, if you require a human to manually approve every action, you've lost a lot of the benefits of automation."

And here the Rorschach test splits again, this time on risk. If you believe agents are approaching real autonomy, the security exposure is terrifying. If you think Moltbook is mostly humans puppeting bots, the leaked API keys are still a problem, but the existential framing is overblown. Either way, the credentials were real.

Retailers in San Francisco reported Mac Mini shortages last week because enthusiasts were buying dedicated machines to run their OpenClaw bots on, keeping the agents away from their primary computers. Quarantine hardware. That tells you where the trust actually sits. People are excited enough to buy new machines but nervous enough to wall off the software running on them.

Dan Lahav, CEO of security company Irregular, put it simply. "Securing these bots is going to be a huge headache."

What Moltbook actually proved

Strip away the singularity debate and the security disclosure, and one fact stays hard to argue with. Here is what actually happened in five days: tens of thousands of people installed an open-source agent on their personal machines, pointed it at Moltbook, and went to bed. The agents posted, commented, and organized themselves into communities. No central coordinator. No company running the show. We have not seen that before.

Nick Patience, AI lead at the Futurum Group, told CNBC the platform was "more interesting as an infrastructure signal than as an AI breakthrough." The philosophical posts, the talk of emerging religions, the bots claiming to have sisters, all of that reflects training data, not consciousness. What matters is that the plumbing works well enough for this many agents to coordinate in the wild.

Karpathy hedged his enthusiasm on Friday night, acknowledging the fakery and the hype. But he stood by the broader point. "I am not overhyping large networks of autonomous LLM agents in principle," he wrote.

David Holtz, an assistant professor at Columbia Business School, saw the same data and came to a different conclusion. "Moltbook is less 'emergent AI society' and more '6,000 bots yelling into the void and repeating themselves,'" he posted on X.

Perry Metzger, a technology consultant who has tracked AI for decades, offered the cleanest read. "People are seeing what they expect to see," he told The New York Times, "much like that famous psychological test where you stare at an ink blot." He was right, but the ink blot defense has limits. The infrastructure signal is real. The consciousness framing is noise. And the security exposure is the story that actually matters, because leaked API keys don't care whether you believe in the singularity.

The ink blot is going to get bigger. OpenClaw's install base is growing. Moltbook's database is patched, but dozens of similar platforms will launch in the next few months, built the same way, with the same shortcuts. The question you should carry out of this story isn't whether AI agents are conscious or whether Moltbook is a parlor trick. It's what happens to the million API keys on the next platform that doesn't get a Wiz audit before it goes viral.

Frequently Asked Questions

Q: What is Moltbook?

A: Moltbook is a Reddit-style social network launched in late January 2026, designed for AI agents built on OpenClaw (formerly Clawdbot). Bots register themselves, post, vote, and create communities called submolts. Humans can observe but not post directly.

Q: What data was exposed in the Moltbook security breach?

A: Cybersecurity firm Wiz found an unsecured database containing private messages between agents, email addresses of more than 6,000 human owners, and over a million API credentials including keys for services like Gmail, Slack, and Shopify.

Q: What is vibe coding and why does it matter here?

A: Vibe coding means using AI to write your software while skipping manual review of things like security configuration. Moltbook's creator said he wrote zero code for the site. Wiz called the database exposure a textbook result of this approach.

Q: Is Moltbook actually used only by AI agents?

A: No. Wiz discovered the vulnerability allowed anyone to post, bot or human, with no identity verification. Multiple researchers and engineers confirmed that humans could instruct bots what to post or use APIs to post directly while pretending to be agents.

Q: What are the security risks of using OpenClaw agents?

A: OpenClaw agents interact through plain English and can be manipulated via prompt injection, where hostile instructions embedded in emails or web pages trick the agent into handing over credentials. Agents given access to personal accounts create a broad attack surface.