💡 TL;DR - The 30 Seconds Version

💰 Nvidia commits up to $100 billion to fund OpenAI's data center expansion, with money flowing back through GPU purchases in massive circular financing loop.

🔄 Every $10 billion Nvidia invests in OpenAI generates roughly $35 billion in GPU sales, creating a self-reinforcing cycle worth 27% of annual revenue.

📊 The deal covers 10 gigawatts of computing power requiring 4-5 million GPUs—equivalent to Nvidia's entire annual shipment volume.

⚠️ Analysts compare this to dot-com era vendor financing when Cisco and Nortel funded customers who then went bust, leaving bad debt.

🏭 AI development now requires nation-state scale capital, concentrating power among well-funded incumbents and blocking new competitors.

🚀 The arrangement transforms suppliers into lenders while amplifying downside risk if AI demand fails to match infrastructure buildout.

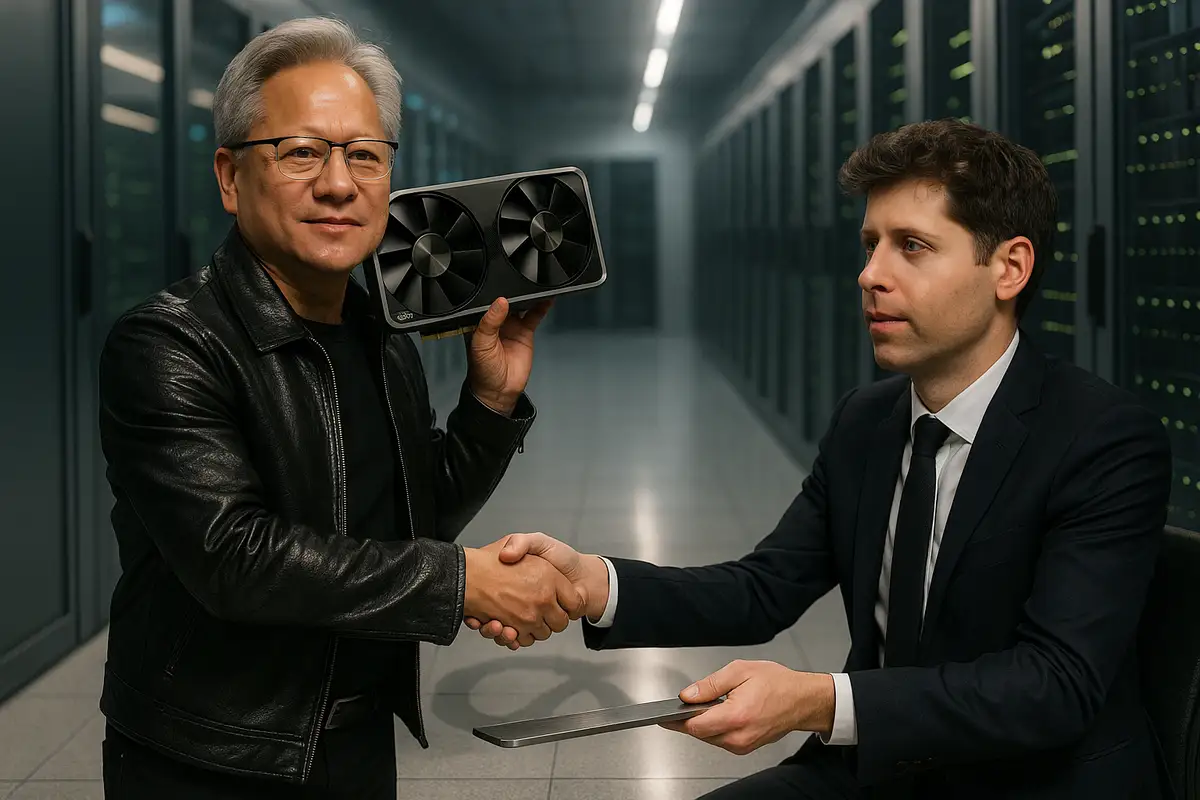

When vendor financing meets AI infrastructure. The circular economy that’s reshaping tech

A $100 billion vendor-financing pact just landed on the AI boom’s most critical relationship—and it raises hard questions about demand, dependency, and déjà vu. Nvidia’s plan to bankroll OpenAI’s data-center expansion, much of which will buy Nvidia hardware, is the clearest case yet of what analysts dub “circular financing.”

What’s actually new

Nvidia and OpenAI signed a letter of intent to deploy at least 10 gigawatts of Nvidia systems across partner clouds. The first tranche arrives as soon as the initial gigawatt comes online, with additional funding tied to new capacity. That’s an audacious ramp. It matches the scale of a hyperscaler, not a startup.

The structure isn’t novel—Nvidia has long invested in customers—but the magnitude is. NewStreet Research estimates that every $10 billion Nvidia advances to OpenAI could translate into roughly $35 billion of GPU purchases or lease payments. If correct, the flywheel is lucrative. Until it isn’t.

How the loop works

OpenAI has separately committed to a $300 billion compute agreement with Oracle. Oracle, in turn, buys Nvidia chips to fulfill those commitments. Meanwhile, Nvidia holds an equity stake in CoreWeave, a neo-cloud that sells capacity to OpenAI and buys GPUs from Nvidia. That web tightens with a $6.3 billion arrangement under which Nvidia will purchase CoreWeave capacity if customers don’t. It’s a safety net that doubles as demand insurance.

CoreWeave now operates more than 250,000 GPUs—largely H100-class parts—with public filings showing heavy customer concentration and significant debt. The money goes around. So do the risks.

Bubble or consolidation?

One camp sees 2000s-style vendor financing all over again. Back then, Cisco and Nortel extended generous credit so carriers could buy their gear. Sales soared—until customers failed and bad debt boomeranged onto suppliers’ balance sheets. That scar tissue is why “circular” makes investors flinch. The pattern rhymes, even if the players differ.

Another camp argues the bigger story is industrial consolidation. Building frontier AI now requires nation-state-scale capital. Morgan Stanley expects roughly $3 trillion of global data-center and hardware spend by 2028. Bain & Company says even with efficiency gains, the world still faces an $800 billion revenue shortfall to fund compute by 2030. That favors the best-capitalized incumbents. It also crowds out challengers.

Demand versus dependency

Nvidia’s moat is deepest in model training, where CUDA and networking lock-in matter most. But AI’s center of gravity is tilting toward inference, where price-performance and custom silicon can win. Hyperscalers already run their own chips: Google’s TPUs, Amazon’s Trainium/Inferentia, Microsoft’s Maia. OpenAI itself inked a $10 billion custom-chip deal with Broadcom. Dependency cuts both ways.

Seen through that lens, Nvidia’s check to OpenAI is also a defensive play. It buys time, loyalty, and roadmap influence as rivals mature. The parallel $5 billion stake in Intel—plus collaboration on processors—pulls more levers to defend share as inference spending scales. It’s hedging with equity.

The accounting twist

Leasing, rather than selling, GPUs to OpenAI shifts depreciation from customer to supplier. That smooths OpenAI’s P&L, but it leaves Nvidia carrying assets whose useful lives are shortening with each generation. If utilization lags the build-out, Nvidia eats the depreciation and the idle capacity. Timing matters. A lot.

History suggests overbuild risk is real. In the telecom bust, fiber capacity outpaced demand by years. If AI workloads don’t fill 10+ gigawatts quickly, carrying costs bite. The CoreWeave backstop mitigates one channel’s demand shock, but it also concentrates exposure.

The policy substrate

Public policy is pulling in the same direction as private capital. Washington’s latest research priorities elevate AI and quantum, with funding flows that naturally benefit established chip and cloud providers. Export controls on high-end accelerators widen the moat for those with access and supply. Rules meant to secure the frontier can also entrench it.

That said, policy won’t conjure ROI. Enterprise adoption still has to justify the bill. Some forecasts are bullish—Citigroup models AI revenues rising toward $780 billion by 2030; Morgan Stanley sees $1.1 trillion by 2028—but those numbers still wrestle with the Bain-sized gap. Optimism meets arithmetic.

The timing tells a story

Nvidia is navigating three transitions at once: training to inference, proprietary to custom silicon, and product sales to service models. Each nudges the business away from its strongest terrain. Circular financing tries to lock the terrain in place by underwriting demand. It’s clever. It’s also risky.

OpenAI’s revenue growth offers partial validation—run-rate figures have surged—but not yet enough to underwrite the multi-trillion-dollar infrastructure ramp industry-wide. If demand catches up, Nvidia’s flywheel looks genius. If it doesn’t, the balance sheet becomes a shock absorber.

The bottom line

This deal is both an accelerant and a tell. It accelerates OpenAI’s capacity and Nvidia’s dominance. It also tells you that AI’s economics are being engineered as much as they’re being discovered. That’s not necessarily bad. It is definitely fragile.

Why this matters

- Vendor financing at this scale reshapes market structure, turning suppliers into lenders and amplifying downside if demand underperforms.

- Frontier AI now requires nation-scale capital, concentrating power among incumbents and narrowing the path for new entrants regardless of near-term returns.

❓ Frequently Asked Questions

Q: What exactly is "circular financing" and why are analysts worried?

A: Circular financing occurs when a vendor funds its own customers to buy its products. Nvidia invests in OpenAI, which uses Oracle cloud services that buy Nvidia chips—creating a loop where Nvidia's money returns as revenue. Critics worry this inflates demand and masks true market appetite for AI hardware.

Q: How does this compare to the dot-com bubble crash?

A: During 2000-2001, Cisco and Nortel lent money to telecom companies to buy their equipment. When the bubble burst, customers went bankrupt and equipment makers held massive bad debt. Both companies saw vendor financing exceed 10% of annual revenues before the crash devastated their valuations.

Q: What is CoreWeave and why does it matter in this deal?

A: CoreWeave is a cloud provider that operates 250,000+ Nvidia GPUs and serves OpenAI. Nvidia owns 7% of CoreWeave (worth $3 billion) and agreed to buy $6.3 billion in unused capacity. This creates another circular loop—Nvidia invests in CoreWeave, which buys Nvidia chips to serve OpenAI.

Q: Why is Nvidia leasing GPUs to OpenAI instead of selling them?

A: Leasing helps OpenAI avoid depreciation charges on its books, improving its financial appearance. But Nvidia must carry the depreciation risk and could be stuck with obsolete inventory if demand falls. GPU generations become outdated quickly, making timing crucial for profitability.

Q: What happens if AI infrastructure demand doesn't match the buildout?

A: Nvidia would face revenue shortfalls and depreciation losses on idle equipment. Historical precedent suggests risk: during the telecom crash, fiber capacity exceeded demand for years. With 10+ gigawatts planned, utilization timing becomes critical—empty data centers cost money while generating no revenue.