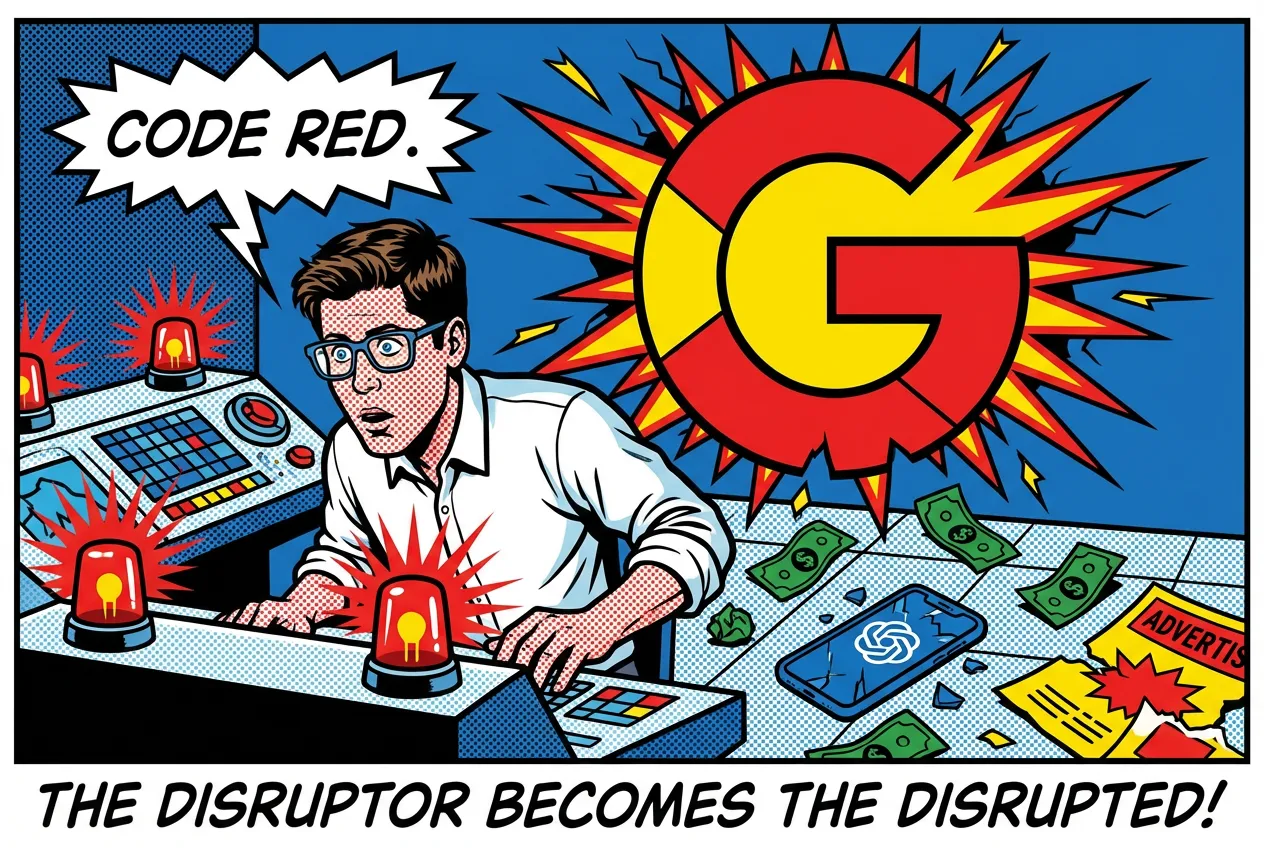

When ChatGPT launched in December 2022, Google responded by pulling engineers off other projects and rushing Bard to market. The demo flopped. Factual errors in the presentation. Stock took a hit. Two years of playing catch-up followed while OpenAI raised billions and owned the narrative.

On Monday, Sam Altman sent a memo to OpenAI employees declaring "code red" at his own company. The instruction: pause work on advertising integration, AI shopping agents, and the Pulse personal assistant. New priority is fixing ChatGPT. Speed problems. Reliability issues. Users complaining the thing can't handle basic arithmetic.

Google's stock is climbing. OpenAI is shelving revenue initiatives it can't afford to delay. Whatever advantages the startup once held have started working against it.

The Breakdown

• OpenAI declared "code red," its highest urgency level, delaying advertising, shopping agents, and Pulse to focus on fixing ChatGPT's core functionality

• Gemini grew from 450 million to 650 million monthly users between July and October; engagement data shows users spend more time with Gemini than ChatGPT

• OpenAI needs roughly $200 billion in annual revenue by 2030 to break even and has committed to $1.4 trillion in compute spending over the next decade

• Google's ownership of custom silicon and integrated ecosystem creates structural cost advantages that OpenAI, which rents GPUs through Azure, cannot match

The Reversal No One Predicted

Gemini 3 broke something at OpenAI. Google's latest model, released last month, outperformed GPT-5 on industry benchmark tests. The market responded immediately. But benchmark scores don't tell you much about what's actually happening with users.

Here's what does. Google said Gemini had 450 million monthly active users back in July. By October, that number reached 650 million. Forty-four percent growth in a single quarter, most of it driven by an image generator called Nano Banana that caught fire in August. Similarweb's engagement data paints an even starker picture: users are spending more time in Gemini sessions than ChatGPT ones.

OpenAI touts 800 million weekly users. Sounds dominant. But weekly uniques measure something different than session depth. People open the app, ask a question, leave. Session duration matters more for long-term retention, and that's where Gemini is winning.

Altman's memo didn't dance around the problem. He told staff the company had reached "a critical time for ChatGPT" and announced daily calls among senior leadership focused specifically on improvements. Employees might need to transfer between teams temporarily. The company had already hit "code orange" back in October. Red is the highest level. Whatever they tried in the interim didn't work.

Nick Turley runs ChatGPT for OpenAI. He posted on X Monday evening, and the tone felt defensive. The focus now, he wrote, was making ChatGPT "more capable" and expanding "access around the world." He claimed the product handles "roughly 10% of search activity." Ten percent of what, exactly? Global web searches? Some narrower category? He didn't say.

When Your Emergency Requires Abandoning Your Lifeboat

OpenAI is delaying advertising because the product has deteriorated too much to monetize safely. Push ads on users who are already frustrated with basic functionality and you accelerate the exodus. That's the calculation.

But the financial pressure hasn't eased. OpenAI loses money. It will keep losing money for years. According to "The Wall Street" Journal, the company's internal projections show it needs roughly $200 billion in annual revenue to break even by 2030. For context, Google's entire annual revenue is around $300 billion. OpenAI is targeting two-thirds of that within five years, starting from a base of maybe $4 billion today.

The compute situation makes it worse. OpenAI has signed deals with Oracle, SoftBank, and others committing to roughly $1.4 trillion in spending over the next decade. Not aspirational targets. Contractual commitments with penalties attached.

Advertising was supposed to help close the gap. Beta code from ChatGPT's Android app, version 1.2025.329, contains references to ad infrastructure. Engineers found strings mentioning "ads feature," "search ad," and something called "bazaar content." HSBC published an analysis recently suggesting OpenAI needs hundreds of billions in additional capital to remain operational even under optimistic assumptions.

That advertising push is now on hold. Same with AI shopping agents that might have generated affiliate revenue. Same with Pulse, the personalized morning briefing feature OpenAI launched in September.

The delay reveals something uncomfortable. While OpenAI chased expansion and announced ambitious projects, the core product degraded. GPT-5 arrived in August. Users complained about robotic responses and errors on basic math and geography. OpenAI patched it last month. Still not enough, apparently.

The Structural Disadvantage Was Always There

Google's advantages run deeper than any individual model. They're architectural.

Koray Kavukcuoglu, who serves as DeepMind's chief technology officer, explained that Google trained Gemini using custom Tensor chips the company designed in-house. Google doesn't rent compute. It builds data centers. It fabricates silicon. The economics are fundamentally different.

OpenAI rents GPUs through Microsoft's Azure. This fact gets mentioned in passing but its implications rarely get examined. Inference costs remain elevated. That $20 monthly subscription tier was never going to subsidize the free tier at scale, not with hundreds of millions of users generating queries. The free tier exists because OpenAI needs the data pipeline and user acquisition funnel. Necessary, expensive, and unsustainable without other revenue. Revenue that just got postponed.

Integration advantages matter too. Google can embed Gemini directly into Android, Gmail, Calendar, Meet, Search, eventually YouTube. Each touchpoint generates training data. Each improvement compounds into the next release. Calendar invites auto-generate. Meeting transcripts appear without prompting. Photo editing runs on-device through Pixel hardware.

Microsoft was supposed to deliver similar integration benefits through its OpenAI partnership. The results have been uneven at best. OpenAI's desktop app shipped first on Mac. Copilot remains fragmented across Microsoft's product suite. Windows Central's Jez Corden tested Microsoft's AI-powered photo editing features and found them essentially non-functional. Generative erase doesn't work properly. Object removal fails on basic tasks. Meanwhile the partnership that was supposed to challenge Google's ecosystem play keeps producing friction instead of synergy.

The Advertising That's Coming Anyway

Delayed isn't canceled. When ads arrive in ChatGPT, and they will, the privacy questions go beyond what we've encountered with search or social media.

ChatGPT knows things about users that Google Search never learned. The conversational format invites disclosure. People share health worries, relationship tensions, job anxieties, financial problems. They do this because the interface feels private, like journaling or talking to a therapist. It's not.

OpenAI released data recently about mental health signals in user conversations. The company reported that approximately 0.07% of weekly active users show possible indicators of severe psychological disturbance. Mania. Psychosis. Suicidal thinking. Against a base of 800 million users, that percentage translates to roughly 560,000 people. These users are sharing some of their most vulnerable moments with a platform preparing to monetize their attention through personalized advertising. The juxtaposition is hard to sit with.

What will those ads look like? The beta code suggests personalization is coming. Not banner ads in the sidebar. Not labeled sponsored links. Product recommendations woven directly into responses.

The advertising industry figured out years ago that disguised promotion outperforms obvious promotion. Sharethrough and IPG Media Labs published research in 2015 using eye-tracking equipment on 4,770 test subjects. They compared how people engaged with native ads versus traditional banner placements. The native formats, designed to mimic surrounding content, drew 53% more visual attention. Industry spending followed. Native advertising is projected to hit $400 billion globally by 2032.

Chatbots represent the logical endpoint of this trend. The model doesn't present a list of options with some marked "sponsored." It synthesizes. It recommends. Users can't trace why the response favored one brand over another because the architecture doesn't permit that kind of inspection.

Altman used to express discomfort with this direction. At Harvard Business School in May 2024, he called combining ads and AI "uniquely unsettling." A last resort, he said. Thirteen months later, on OpenAI's podcast, the language had changed. Not opposed to ads. Working through implementation details. The resistance dissolved faster than anyone expected.

Obligations Don't Wait

Altman told employees that growth might drop to single digits through 2026 while the company focuses on fixing its product. For a startup that has raised capital on the promise of hypergrowth, that's a significant concession.

Investors tolerate losses when trajectory stays steep. OpenAI's pitch has always been about the slope of the curve. Flatten that slope and the next funding conversation gets harder. The compute commitments don't pause while OpenAI debugs ChatGPT. Oracle and SoftBank expect their money regardless of product quality. Talent competition with Google and Anthropic doesn't pause either. Neither does the infrastructure buildout for an eventual IPO that CFO Sarah Friar says isn't imminent but obviously influences strategic planning.

Anthropic has been gaining ground with enterprise customers. Claude appeals to organizations that prioritize consistency and safety over raw capability. Business users don't want to explain to clients why the AI made an arithmetic error in a presentation. They want boring reliability.

The code red buys time. OpenAI couldn't keep building monetization infrastructure on top of a product users were abandoning. The decision makes tactical sense. But time bought isn't the same as problems solved.

Google can subsidize AI losses indefinitely. Search prints cash. OpenAI survives by convincing each new round of investors that profitability approaches despite revenue targets that look increasingly detached from reality. Advertising delayed. Shopping agents delayed. Monetization delayed.

Some delays you recover from. Others compound. Nokia discovered this. So did Yahoo, and BlackBerry, and a dozen other companies that led their categories until suddenly they didn't. OpenAI has time. Less than it did yesterday. Less than it will have tomorrow.

Why This Matters

- For investors in Nvidia, Microsoft, and Oracle: Those compute commitments represent future revenue only if OpenAI survives long enough to honor them. Single-digit growth projections and postponed monetization introduce execution risk that current valuations may not reflect.

- For enterprise customers choosing AI providers: Code red signals OpenAI is redirecting resources toward consumer ChatGPT. API performance and enterprise features could suffer. Organizations planning major AI deployments should factor this uncertainty into vendor selection.

- For regulators examining AI advertising: Beta code confirms what analysts suspected. Personalized native advertising in conversational AI is coming, and users won't be able to distinguish recommendations from paid placements. Disclosure frameworks designed for search engines and social feeds don't address this.

❓ Frequently Asked Questions

Q: What do OpenAI's color-coded alerts mean?

A: OpenAI uses three internal alert levels: yellow, orange, and red. Red is the highest urgency. The company hit code orange in October 2024 when competition intensified. Monday's escalation to code red triggers daily leadership calls, potential staff transfers between teams, and delays to non-essential projects until ChatGPT quality improves.

Q: What is Nano Banana and why does it matter?

A: Nano Banana is Google's image generator, released in August 2025. It drove much of Gemini's 44% user growth between July and October. The tool gained traction for turning dense text into accurate infographics, pulling users who previously relied on ChatGPT for visual tasks.

Q: What ad features did engineers find hidden in ChatGPT's code?

A: Beta version 1.2025.329 of ChatGPT's Android app contains references to "ads feature," "search ad," and "bazaar content." The code suggests OpenAI is building infrastructure for personalized native advertising embedded within responses, not traditional banner placements. OpenAI hasn't publicly acknowledged these features exist.

Q: Why can't OpenAI build its own AI chips like Google does?

A: Custom chip development requires billions in upfront investment and years of work. Google started its Tensor Processing Unit program in 2013 and now runs Gemini on fourth-generation hardware. OpenAI would need five to seven years minimum to match this. Renting GPUs through Microsoft's Azure is faster but significantly more expensive per query.

Q: What went wrong with GPT-5 when it launched?

A: GPT-5 released in August 2025 and drew complaints about robotic, cold responses and errors on basic math and geography questions. OpenAI patched it in November to improve warmth and instruction-following. The code red declaration suggests these fixes haven't resolved the quality problems pushing users toward Gemini.