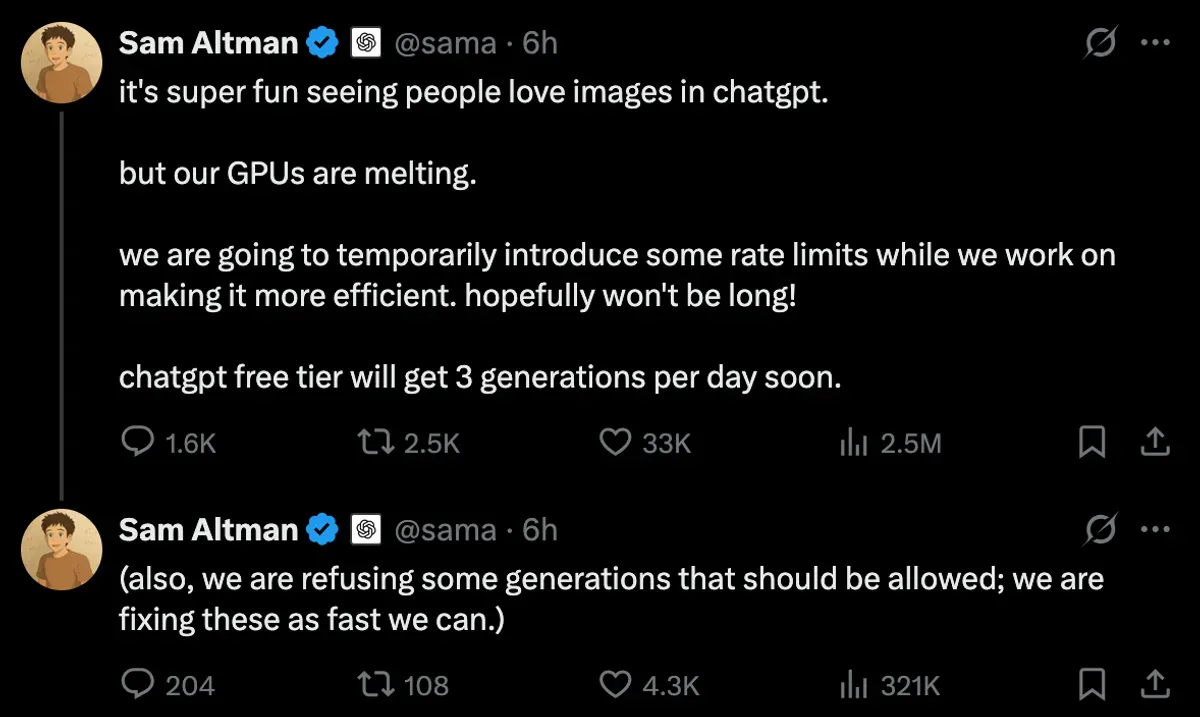

OpenAI's head of model behavior, Joanne Jang, revolutionized the company's image generation rules just in time for their servers to melt down. The new features proved so popular that CEO Sam Altman had to slam the brakes.

The scene echoes Twitter's infamous growing pains of 2008. Back then, a cute blue whale apologized when servers buckled under viral growth. Today, OpenAI faces its own version: GPUs pushed to their limits by enthusiastic users creating AI art.

"Our GPUs are melting," Altman posted on X today. His team scrambled to add rate limits as demand overwhelmed their infrastructure. Free users will soon get three images per day - once the servers stop smoking.

The crisis emerged from Jang's bold new vision for AI safety. She scrapped the old "block everything risky" rulebook for a system that trusts users while targeting specific harms. The change unleashed a flood of creativity - and an avalanche of processing requests.

Past launches locked down features behind thick walls of caution. The AI refused many reasonable requests, fearing misuse. Jang's team watched users bump into unnecessary restrictions. Even obvious use cases got blocked.

"AI lab employees should not be the arbiters of what people should and shouldn't be allowed to create," Jang writes. Her team learned humility the hard way. Users kept discovering valuable applications they'd never imagined.

The new approach tackles thorny issues head-on. Take public figures - instead of universal restrictions, anyone can opt out of being depicted. Cultural symbols like swastikas? Permitted in educational contexts, blocked when spreading hate.

Jang's team also rewrote rules around human diversity. The old system nervously rejected requests to make people look "more Asian" or "heavier," accidentally treating these features as problematic. The new approach celebrates human variation.

One quote captures Jang's philosophy: "Ships are safest in harbor, but that's not what ships are for." She argues that excessive caution carries its own risks. When fear blocks innovation, good ideas die unseen.

Consider memes. The old thinking questioned whether better meme-making tools justified potential misuse. Jang flipped this logic. Small moments of delight and connection improve lives. Why sacrifice real benefits to prevent hypothetical problems?

The changes might look like lowered standards to outside observers. Jang disagrees. Her team spent months researching and debating each policy shift. The new rules reflect greater sophistication, not less care.

OpenAI now positions itself to learn from real-world use. When policies need updates, they'll change them. Jang sees this flexibility as a strength, not a weakness. Perfect rules don't exist - but adaptable ones do.

The company's GPT-4o model powers these improved images. It renders text better and creates more realistic scenes than previous versions. The Verge called it a "step change" improvement. If only the hardware could keep up.

Why this matters:

- OpenAI's success threatens to outrun its infrastructure. Even tech giants can't predict user demand

- The company traded "block everything" for "enable creativity" - and users responded with such enthusiasm they broke the system. Talk about a task failed successfully

Read on, my dear:

- Joanne Jang's Substack: Thoughts on setting policy for new AI capabilities