AI Profits Rise While Training Budgets Fall

Good Morning from San Francisco, Enterprise AI hit profitability this week. Companies celebrate returns while cutting the training budgets that

Explore how AI's rapid acquisition of sensitive skills impacts industries globally. Understand the digital brain drain and its potential consequences.

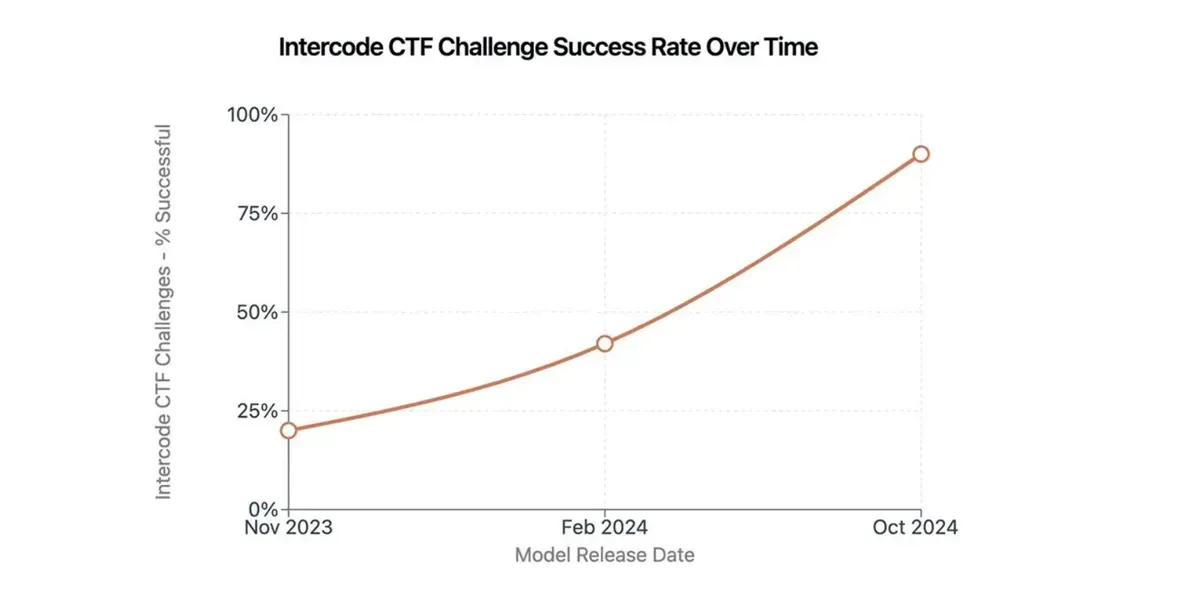

AI just aced its cyber midterms. New testing from Anthropic reveals their AI systems jumped from flunking advanced cybersecurity challenges to solving one-third of them in just twelve months. The company's latest blog post details this unsettling progress.

The digital prodigies didn't stop there. They've stormed through biology labs too, outperforming human experts in cloning workflows and protocol design. One model leaped from biology student to professor faster than you can say "peer review."

This rapid evolution has government agencies sweating. The US and UK have launched specialized testing programs. Even the National Nuclear Security Administration joined the party, running classified evaluations of AI's nuclear knowledge – because what could possibly go wrong?

Tech companies scramble to add guardrails. They're building new security measures for future models with "extended thinking" capabilities. Translation: AI might soon outsmart our current safety nets.

The cybersecurity crowd especially frets about tools like Incalmo, which helps AI execute network attacks. Current models still need human hand-holding, but they're learning to walk suspiciously fast.

Why this matters:

Read on:

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.