A 64GB RAM kit costs more than a PlayStation 5. Three months ago that comparison would've been absurd. Now it's just Tuesday at Newegg.

Memory prices have blown past inconvenient and landed somewhere around surreal. Central Computers in the San Francisco Bay Area stopped posting RAM prices entirely last week. The sign in their display case reads like something from a fish market: "Costs are fluctuating daily." Micro Center did the same. When computer parts get sold like the catch of the day, you're not dealing with normal supply chain friction anymore.

The Breakdown

• OpenAI's Stargate project secured 40% of global DRAM supply through Samsung and SK Hynix, triggering 170-280% consumer RAM price increases

• Sony and Lenovo stockpiled memory before the crunch; Microsoft and Valve face exposed positions with likely price hikes or delayed launches

• Memory manufacturers won't expand capacity after 2018's glut trauma; industry projects constraints through 2026, possible normalization in 2027

• PC builds that cost $800-1000 three months ago now approach $1200, shifting value calculations between console and PC gaming

One transaction triggered this. In October, OpenAI's Stargate project locked down agreements with Samsung and SK Hynix for up to 900,000 DRAM wafers monthly. That's roughly 40% of global DRAM supply, committed to a single buyer for a four-year, $500 billion infrastructure buildout. Everyone watching the memory market knew what would happen next. Most of the consumer electronics industry apparently wasn't watching.

Contract DRAM prices jumped 171% after the deal closed. Retail got hit harder. A Team Delta RGB 64GB DDR5-6400 kit sat at $190 in August. Same kit today? $700 at Newegg. Not a misprint. That's 268% in under three months, and good luck finding historical parallels for peacetime commodity moves that steep.

The Numbers Tell the Story

Component prices across retailers reveal the scale:

| Component | August 2025 | November 2025 | Increase |

|---|---|---|---|

| Team Delta RGB 64GB DDR5-6400 | $190 | $700 | 268% |

| Team T-Force Vulcan 32GB DDR5-6000 | $82 | $310 | 278% |

| G.Skill Trident Z5 Neo 64GB DDR5-6000 | ~$220 | $599-640 | ~190% |

| Crucial Pro 32GB DDR5 | $175 (Oct 21) | $301 | 72% in ~5 weeks |

| Patriot Viper Venom 16GB DDR5-6000 | $49 | $110 | 124% |

| Silicon Power 16GB DDR4-3200 | $34 | $89 | 162% |

DDR4 should be in oversupply by now. Systems have been migrating to DDR5 for two years. Prices more than doubled anyway. Storage took hits too: Western Digital's WD Blue SN5000 1TB SSD went from $64 to $111. The 2TB version climbed from $115 to $154.

Two Squeezes Hit at Once

Demand explains part of this. OpenAI's Stargate expansion needs staggering quantities of high-bandwidth memory. Data centers bid against consumer device makers for the same underlying supply. They bid higher. Way higher. Tim Sweeney, Epic's CEO, put it plainly: "Factories are diverting leading edge DRAM capacity to meet AI needs where data centers are bidding far higher than consumer device makers."

That would be manageable if supply held steady. It didn't.

Samsung, SK Hynix, and Micron have all pivoted production toward HBM, the specialized high-bandwidth memory that goes into AI accelerator chips. Margins there dwarf what they'd make on consumer DDR5. Micron committed its entire 2026 HBM output already. Fewer wafer starts for conventional modules, even as PC builders keep showing up wanting to buy.

So demand surged while production shifted away. Classic pincer move on pricing, except nobody in the consumer market planned for it.

What makes this different from typical memory cycles: AI infrastructure doesn't upgrade like consumer hardware does. Once those data centers exist, they consume memory continuously as models scale. The demand curve only points up.

Some Companies Saw It Coming

Not everyone got caught flat-footed, and that's where this gets interesting strategically.

Sony stockpiled RAM for PlayStation 5 production before prices spiked. Industry sources say they're sitting on enough inventory to hold console pricing stable for months. Maybe through the entire shortage. Apple locked in supply too, and their margins can absorb cost increases that would crush thinner operations.

Lenovo went further. Bloomberg reports they're holding memory inventories around 50% above normal levels, enough to ride this out through 2026. A spokesperson said they'll "aim to avoid passing on rising costs" this quarter. That's confidence backed by warehouses full of chips.

Microsoft looks exposed. Xbox consoles may need another price increase, according to industry analysts. The company already raised prices earlier this year.

Valve's timing couldn't be worse. Steam Machine, the living room gaming PC that should've been a big story, arrives into a market where its core components cost double or triple what anyone budgeted. The company wouldn't commit to a price point. Cited the memory shortage directly. Whatever value proposition they'd planned is getting eaten alive by RAM costs.

Framework, the modular laptop company, pulled standalone RAM kits from their store entirely. "We had to delist standalone memory to head off scalpers," they explained. Then the other shoe: "Our memory costs from our suppliers are increasing substantially, so it is likely we will need to increase memory pricing soon."

The foresight gap here creates competitive separation that persists long after prices stabilize. Sony and Lenovo protected their positions. Microsoft and Valve didn't. That's years of market share implications compressed into a few months of supply chain management.

Why Manufacturers Won't Just Ramp Production

Natural question: Samsung, SK Hynix, and Micron are watching prices triple. Why not flood the market and capture those margins?

Ask anyone who worked at these companies in 2018. They'll tell you exactly why not.

Late 2016, early 2017: memory got tight, prices climbed. All three manufacturers responded the obvious way. Broke ground on new fabs in South Korea and China. Pushed existing facilities harder. The math looked great on paper, chasing elevated prices with expanded capacity.

Then 2018 arrived and the math stopped working. Demand plateaued while all that new capacity came online simultaneously. Chips piled up in warehouses. Samsung's semiconductor division, usually a profit engine, reported quarterly earnings that made investors wince. SK Hynix wasn't any better off. The oversupply dragged into 2019 before finally clearing.

That experience shapes every capacity decision these companies make now. Memory fabs take years to build and commission. A wafer allocation choice in November 2025 determines what's available in 2027 or 2028. And nobody knows whether AI infrastructure spending will still be running hot by then. What if the AGI buildout hits a wall? What if hyperscalers realize they've overcommitted and start canceling orders?

Manufacturers would rather ride the current shortage than risk another glut. Discipline, they call it. Consumers call it something else while watching $89 RAM kits become $310 RAM kits.

Industry projections suggest DRAM and NAND constraints persist through 2026. Some optimism around 2027 normalization. Large-capacity nearline hard drives are backordered two years out. Distributors have started bundling RAM with motherboards just to control inventory distribution.

The Transfer Nobody Voted For

Pull back far enough and something uncomfortable comes into focus.

PC builders and gamers are financing AI infrastructure development. Not directly, not consciously, but the mechanism works regardless. OpenAI, Microsoft, Google, Amazon, all the hyperscalers, they bid up memory prices to stock their training clusters. Manufacturers prioritize those customers. Retail availability shrinks. The teenager saving for a gaming rig pays $400 extra for RAM that cost $130 three months ago.

That's value transfer. From hobbyist markets to data centers. From individual consumers to corporate AI ambitions.

No public policy debate weighed whether accelerating AI capability development justified making consumer computing less affordable. Markets just routed resources toward whoever paid most. When one customer can lock up 40% of global supply, that routing happens fast and hard.

Three companies produce nearly all the world's DRAM. That concentration level creates systemic vulnerability that hasn't gotten much regulatory attention. Maybe it should.

What This Reshapes

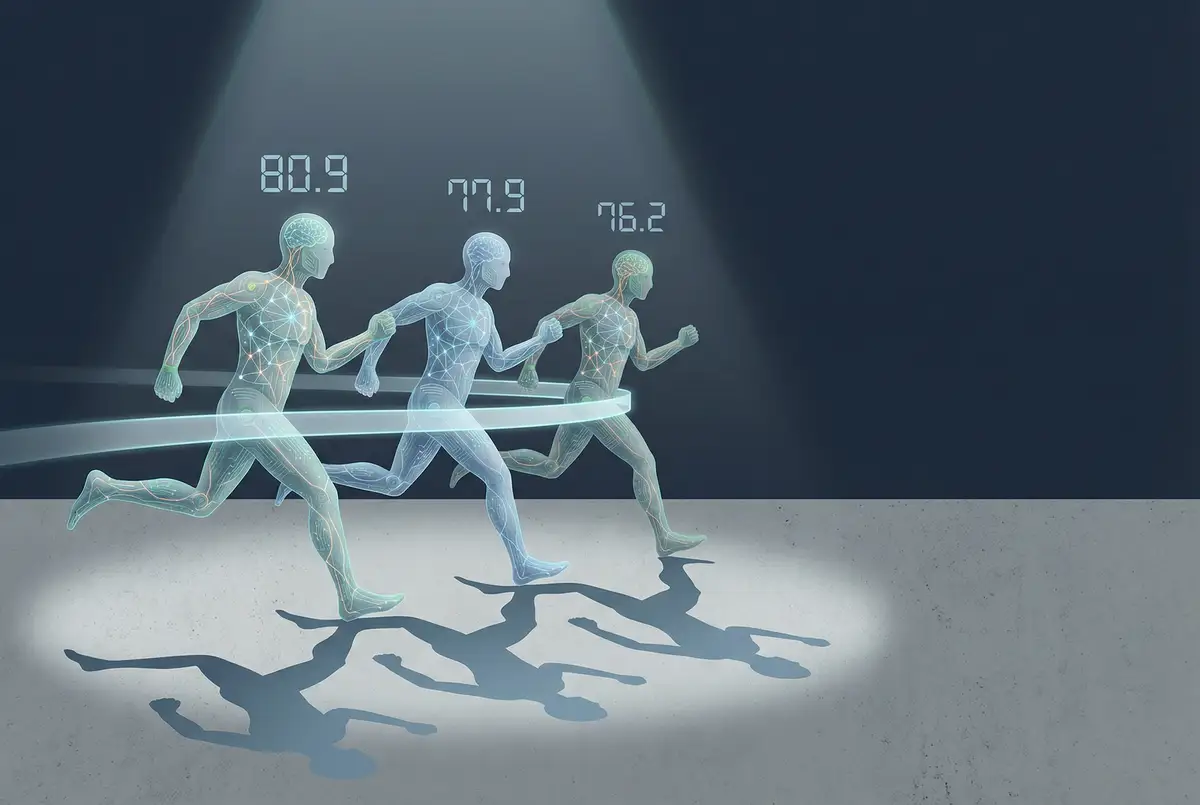

Here's a comparison that would've seemed like a joke in September: G.Skill's Trident Z5 Neo 64GB kit, one of the better DDR5 options on the market, currently runs about $600 after whatever discount Newegg is offering that day. Sony's PS5 Pro retails at $649. A RAM upgrade, not a whole system, just the memory, costs within $50 of a complete gaming console with storage, processor, GPU, and a controller in the box.

If Sony holds PS5 pricing stable while PC component costs keep climbing, console gaming gets relatively more attractive for anyone watching their budget. The value calculation shifts. Steam Machine shows up priced like a luxury item. Xbox bleeds customers it couldn't afford to lose.

GPU pricing, which finally came back to earth after years of AI-driven inflation, will spike again. Every graphics card needs GDDR memory. AMD warned partners to expect roughly 10% increases starting next year. Nvidia reportedly canceled its RTX 50-series Super refresh because the planned memory bump became economically unworkable.

Phone manufacturers face the same squeeze, just on a different scale. Your average flagship Android device carries 8GB of RAM minimum these days, often 12GB or 16GB for the premium tiers. Those component costs either flow through to retail pricing or compress already-thin margins. Budget and midrange phones get hit worst since their buyers have the least tolerance for price creep.

And the broader PC market? Builds that made sense at $800-1000 now push toward $1200 before you add a decent GPU. Entry-level gaming becomes genuinely less accessible right as the installed hardware base ages out and needs replacement.

Why This Matters

For PC builders and gamers: Hold what you have. Upgrades that penciled out three months ago don't anymore. Wait for 2027 unless something breaks.

For console manufacturers: Supply chain foresight just became a competitive weapon. Sony's stockpiling may deliver more market share than any silicon they've designed. Microsoft's exposure is a self-inflicted wound.

For the AI industry: This is what infrastructure scaling actually costs. Not just the direct expenditure, but the market distortion that radiates outward. The memory raid reveals dependencies that will recur as capability ambitions grow.

For policymakers: Three companies control global DRAM production. One deal consumed 40% of supply. That concentration level deserves scrutiny it hasn't received.

❓ Frequently Asked Questions

Q: What is HBM and why are manufacturers prioritizing it over regular RAM?

A: HBM (High Bandwidth Memory) is specialized memory stacked vertically to achieve much faster data transfer speeds than standard DDR5. AI accelerator chips like Nvidia's H100 and H200 require HBM to feed data to GPUs fast enough for training large models. Manufacturers earn substantially higher margins on HBM than consumer DDR5, so they're shifting production capacity accordingly.

Q: Why is DDR4 getting more expensive when everyone's switching to DDR5?

A: Manufacturers assumed DDR4 demand would decline as DDR5 adoption grew, so they reduced DDR4 production. But millions of existing systems still need DDR4 for upgrades and repairs. With production lines now focused on DDR5 and HBM, DDR4 supply has tightened unexpectedly. Silicon Power's 16GB DDR4-3200 kit jumped from $34 to $89, a 162% increase.

Q: What exactly is OpenAI's Stargate project?

A: Stargate is a $500 billion, four-year data center expansion project announced by OpenAI in partnership with SoftBank and Oracle. The project aims to build AI training infrastructure across multiple U.S. locations. OpenAI's October agreements with Samsung and SK Hynix for 900,000 DRAM wafers monthly secure the memory needed for these facilities.

Q: Should I buy RAM now or wait for prices to drop?

A: Industry analysts project constraints through 2026, with possible normalization in 2027. If you need RAM now for a critical build, waiting could mean paying even more as prices haven't peaked. If your current system works, holding off makes sense. Some retailers like Newegg offer motherboard-RAM bundles that soften the cost compared to buying components separately.

Q: Why do only three companies make most of the world's DRAM?

A: DRAM manufacturing requires extremely specialized fabrication facilities costing $10-20 billion each and years to build. The technical complexity and capital requirements created natural consolidation over decades. Samsung, SK Hynix, and Micron now control roughly 95% of global DRAM production. This concentration means a single large customer can meaningfully shift global supply dynamics.