President Trump posted on Truth Social Tuesday urging Congress to block state AI regulation. He called it overregulation threatening America's "HOTTEST" economy. House Majority Leader Steve Scalise confirmed Republicans are eyeing the National Defense Authorization Act as a vehicle for federal preemption language.

This is the second attempt in five months. The first one died 99-1 in the Senate.

July's vote wasn't close. Ted Cruz sponsored the amendment. Then voted with 98 other senators to strip it from a budget bill. Now the White House wants another run through the NDAA, which passes Congress each December.

The coalition that killed preemption hasn't budged. Marsha Blackburn, Josh Hawley, privacy hawks in blue states. Florida Governor Ron DeSantis opposed the renewed effort the same day Trump posted. The packaging changed, though. Trump framed federal preemption as fighting "Woke AI" and protecting children, invoking that viral Google Gemini error generating racially diverse historical figures.

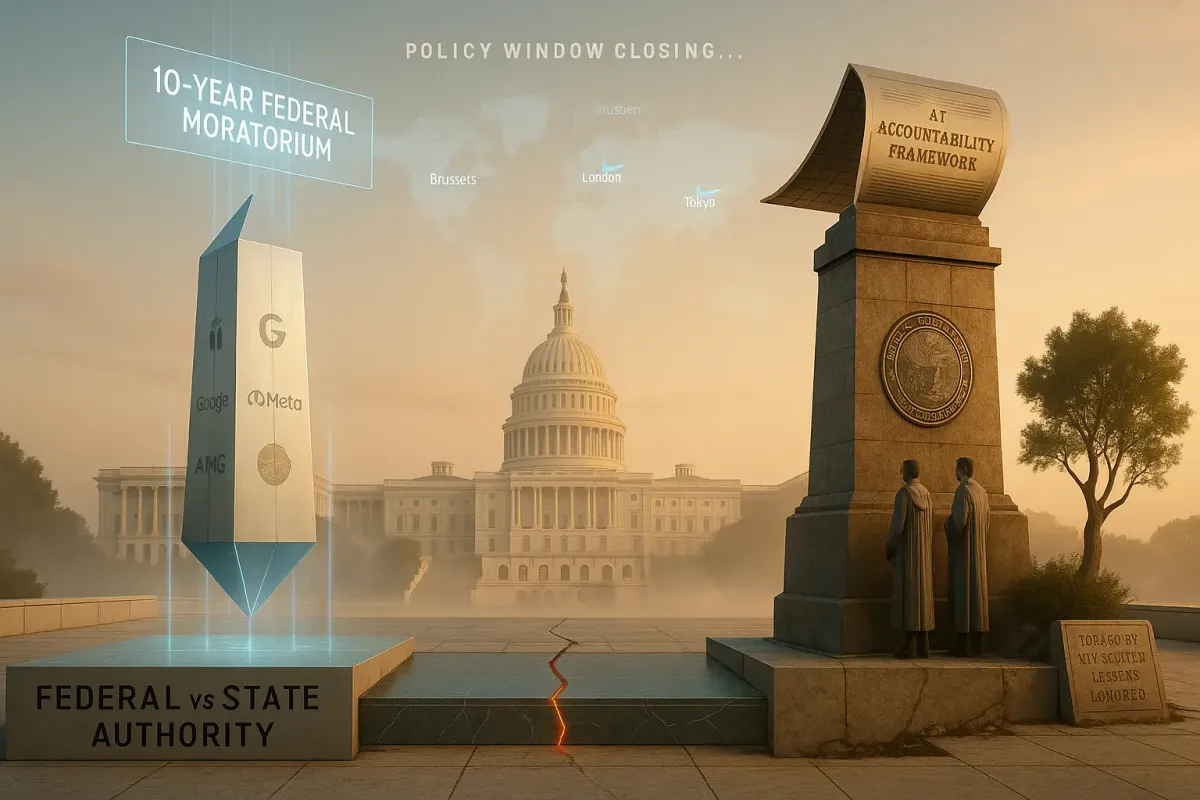

Strip away the framing. What's being proposed is federal preemption of state AI laws.

Key Takeaways

• 99 senators voted to strip AI preemption in July; identical coalition opposes December NDAA attempt including DeSantis

• Federal preemption would nullify existing state AI laws in California, Colorado, Texas, Illinois covering safety and privacy

• Republican civil war pits Cruz-Scalise tech-friendly wing against Blackburn-Hawley faction defending state child safety authority

• Tech companies lobby for single federal framework to avoid compliance costs across 50 states, framing as China competition

The Regulatory Reversal Nobody's Naming

Trump's post attacks "overregulation by the States" while advocating federal regulatory standards. That's not deregulation. It's swapping 50 state approaches for one federal framework, nullifying existing laws in red and blue states alike.

California passed legislation in 2024. Large AI developers must disclose security protocols. Colorado regulated bias in automated decision systems. Texas enacted deepfake rules for elections. Illinois implemented biometric privacy protections covering AI. Federal preemption sweeps these away.

The tech industry argument follows a familiar pattern. AI companies operating across state lines face compliance costs when regulations diverge. NetChoice has pushed this framework hard, warning fragmented rules hand China advantages. Nvidia CEO Jensen Huang claimed Beijing's streamlined approach gives Chinese companies faster deployment.

Preemption proposals hit the same wall every time. States became the primary laboratories for tech regulation because Congress can't move on emerging technologies. The last major federal tech law was COPPA in 1998. States passed data privacy frameworks, biometric regulations, content moderation rules. Congress debated.

Republicans typically champion states' rights. Tech industry preferences for single regulatory frameworks create collision. July exposed this.

The Intra-Party Math That Doesn't Add Up

Cruz introduced the AI moratorium as a 10-year ban on state regulation. Opposition formed immediately. Blackburn, typically aligned with tech-skeptical conservatives, argued states led on child safety protections that AI chatbots threatened. Hawley built his profile attacking Big Tech. He opposed anything preventing states from regulating AI systems targeting children.

Multiple Republican-led states had enacted AI regulations. Deepfakes, biometric data, automated systems affecting children. Preemption would nullify state GOP achievements to satisfy tech companies' operational preferences.

The scope issue compounded problems. Initial drafts used language potentially covering kids' online safety laws touching AI systems. Nearly all modern content moderation uses machine learning. That raised the prospect of wiping out state protections entirely. Democrats and Republicans stripped the amendment.

December's NDAA attempt faces identical dynamics plus one complication. The defense bill serves as a vehicle for unrelated amendments because it's must-pass. But senators opposing provisions can threaten to hold up the entire NDAA. Republican leadership lacks votes to overcome defections from its own caucus plus Democratic opposition.

Scalise told Punchbowl News leadership is "looking at" including AI language. That signals exploratory conversations, not commitments. Republican leaders face choices after July's disaster. Include weak preemption language that won't satisfy tech companies. Draft narrow provisions that might survive but accomplish little. Force the issue and risk fracturing the NDAA coalition.

Trump's intervention adds pressure without resolving the math. Several Republicans who opposed preemption in July aren't vulnerable to primaries. They represent constituencies supporting state-level tech regulation.

Who Actually Benefits From Federal Standards

AI companies have lobbied consistently for federal preemption. OpenAI and Anthropic supported federal frameworks over state legislation. Their public statements emphasize "thoughtful" regulation rather than explicit preemption demands. Practical effect is identical.

Tech companies face real compliance burdens when state rules diverge. A chatbot serving users in California, Texas, Illinois navigates different disclosure requirements, liability standards, definitions of prohibited content. Federal standards simplify operations.

This creates regulatory capture risk. Industries lobbying for federal standards to replace state experimentation typically secure more favorable frameworks. The federal process moves slowly, incorporates extensive industry input, produces compromises favoring established players over startups or consumer advocates.

The China competition argument recurs in every tech policy debate. Loosening antitrust enforcement. Maintaining weak privacy protections. Now AI preemption. The logic: any regulatory friction gives Chinese companies advantages, so American firms need maximum operational freedom.

The reasoning has empirical problems. China leads in facial recognition and state surveillance systems precisely because Beijing imposed top-down frameworks facilitating rapid deployment without privacy protections. The US maintains advantages in foundational AI research and large language models despite its messier regulatory environment. Perhaps because of it.

Tech companies emphasizing China threats while lobbying against state child safety laws face credibility gaps. If the primary concern is maintaining American AI leadership, restrictions on chatbots targeting minors seem orthogonal. The simultaneous invocation of national security and opposition to consumer protection suggests operational flexibility drives the agenda.

Why This Probably Dies Again

The procedural path for NDAA amendments runs on compressed timelines. House and Senate negotiators finalize bill language in late November through closed-door discussions. Final text goes to floor votes in early December with limited amendment opportunities.

For AI preemption to survive, Republican leadership needs to convince negotiators the provision won't sink the bill. Tough sell after July.

Several compromise approaches have circulated. One offers AI companies exemption from state regulation if they agree to federal standards in specific areas like child safety. Maintains preemption while addressing concerns that killed July's amendment. Compromise language faces opposition from both sides. Tech companies want broad preemption, not conditional exemptions. Child safety advocates want enforceable state protections, not voluntary federal commitments.

Another scenario: narrow preemption targeting specific state requirements while preserving broader regulatory authority. Might shield companies from conflicting disclosure rules while allowing states to regulate AI-generated content, biometric data collection, automated decision systems. Tech companies show little interest. Partial preemption preserves the compliance burdens they're eliminating.

Most likely outcome mirrors July. Leadership includes AI language in early NDAA drafts satisfying tech-friendly members and White House pressure. Opposition forms from the Blackburn-Hawley wing plus most Democrats. Negotiators quietly remove the provision to avoid jeopardizing the defense bill.

Trump's "Woke AI" framing might provide political cover for Republicans who opposed preemption in July to flip. The DEI angle repackages corporate lobbying as culture war resistance. Blackburn's public statements suggest she's not interested in that reframing. Her focus: protecting state authority to regulate AI systems affecting children. Culture war packaging doesn't change the underlying policy question.

Why This Matters

State experimentation gets another reprieve, probably temporarily. If preemption fails again in December, states continue developing AI regulations independently through 2026. Creates the fragmented landscape tech companies oppose but generates diverse approaches to problems Congress hasn't addressed. Preserves laboratories of democracy at the cost of operational complexity.

Tech industry loses credibility on China arguments. Second preemption failure in six months undermines the national security framing AI companies deployed across multiple policy debates. When companies citing Chinese threats also oppose state child safety laws, geopolitical rhetoric looks like cover for standard regulatory lobbying.

Republican civil war over Big Tech intensifies. The Cruz-Scalise wing wanting federal preemption has Trump's backing. The Blackburn-Hawley faction prioritizing state authority and child protection isn't folding. This divide cuts across the party's traditional fault lines. Creates new coalitional dynamics shaping tech policy fights beyond AI regulation.

❓ Frequently Asked Questions

Q: What exactly is federal preemption in AI regulation?

A: Federal preemption establishes a single national regulatory framework that overrides and nullifies all existing state laws on the same topic. In this case, Congress would set AI standards that supersede California's security disclosure requirements, Colorado's bias regulations, Texas's deepfake rules, and Illinois's biometric privacy protections. States lose authority to regulate AI systems independently.

Q: How long was Cruz's original AI moratorium supposed to last?

A: Cruz initially proposed a 10-year ban on state AI regulation. After backlash over the length and scope, negotiators reduced it to 5 years before the July vote. Even the shortened version failed 99-1, with Cruz himself voting against his own amendment after widespread opposition from both parties emerged over concerns it would block child safety laws.

Q: Why do lawmakers attach unrelated amendments to the defense bill?

A: The National Defense Authorization Act passes every year because neither party will risk blocking military funding and facing political consequences. This makes it a prime vehicle for controversial provisions that couldn't pass as standalone legislation. However, the tactic has limits. Senators can threaten to hold up the entire NDAA if objectionable language gets included.

Q: What compliance burdens do AI companies face across different states?

A: AI companies must navigate different disclosure requirements, liability standards, and content definitions in each state. A chatbot serving California needs security protocol disclosures, while the same system in Colorado must document bias testing, in Texas must verify deepfake prevention, and in Illinois must meet biometric privacy standards. Each requires separate compliance infrastructure.

Q: How does China's regulatory approach actually differ from America's?

A: Beijing imposes top-down frameworks through central authority, allowing rapid deployment without privacy protections. This gives China advantages in facial recognition and state surveillance systems. The US maintains a fragmented approach with state experimentation and slower federal processes, but leads in foundational AI research and large language models where innovation matters more than deployment speed.