💡 TL;DR - The 30 Seconds Version

👉 Trump's AI Action Plan lists "encourage open-source AI" as a national priority, responding to China's dominance in releasing powerful free models.

📊 DeepSeek-R1 became the most-liked model ever on Hugging Face within days, with American developers building on Chinese foundations for the first time.

🔄 Between 2016-2020, America led open-source AI through Google, OpenAI, and Stanford, but now Chinese labs release models while US companies lock theirs behind APIs.

🏭 Chinese research groups share not just models but data, code, and methods, while GPT-4, Claude, and Gemini remain proprietary and controlled by tech companies.

🌍 Meta's Llama generated tens of thousands of variations, but China's institutional backing for open research creates soft power advantages in global AI development.

🚀 Open-source AI drives innovation velocity through network effects - falling behind here could mean losing the broader AI race entirely.

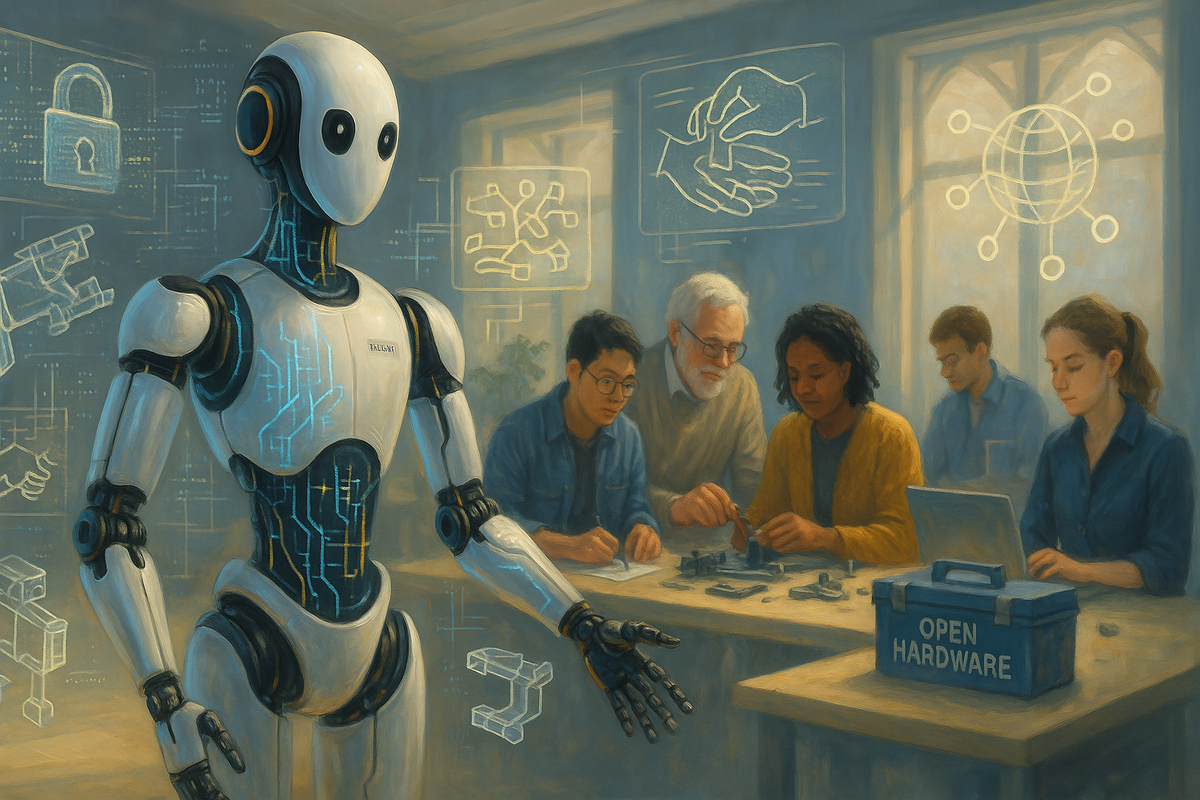

President Trump's AI Action Plan dropped last week with a surprise. Buried in the priorities was "encourage open-source and open-weight AI" — elevating what was once a nerdy technical debate into urgent national strategy.

The timing isn't coincidental. China just proved it can build world-class AI models and give them away for free. Meanwhile, America's biggest AI companies keep locking their best work behind paywalls.

This shift caught Wall Street's attention too. When DeepSeek-R1 launched earlier this year, U.S. stocks tumbled within days. A guest piece in VentureBeat by Hugging Face CEO Clément Delangue argues this represents a fundamental threat to American AI leadership — one that demands dropping the "open is not safe" narrative that's dominated policy discussions.

DeepSeek didn't announce itself with flashy keynotes or press tours. The Chinese research team simply released their large language model with open weights and shared the science behind it. Developers with technical skills and access to computing resources could grab it, tweak it, and build something new.

The response was immediate. Within hours, developers worldwide started experimenting. Within days, it became the most-liked model ever on Hugging Face, spawning thousands of variants across tech companies, research labs, and startups.

But here's what spooked American observers: For the first time, U.S. AI development was building on Chinese foundations. American researchers were using Chinese models as their starting point.

The Great Reversal

This represents a complete flip from five years ago. Between 2016 and 2020, America owned open-source AI. Google released breakthrough models. OpenAI shared their research. Stanford published new methods. These became the foundation for today's AI boom. The transformer architecture — the "T" in ChatGPT — emerged from this open culture.

Now the roles have reversed. Chinese labs are pushing open-source AI forward, releasing models alongside their data, code, and research methods. They work fast and share everything.

Meanwhile, America's flagship models — GPT-4, Claude, Gemini — remain locked behind APIs. You can chat with them or call them through interfaces, but you can't see how they work, retrain them, or use them freely. The weights, training data, and behavior stay proprietary, controlled by a handful of tech companies.

Why Openness Drives Innovation

Open-source AI does more than share code — it speeds up everything. When models are open, researchers can build on each other's work directly. Startups can customize AI for specific needs without depending on big tech APIs. Universities can study AI systems without black-box limitations.

Every AI advancement — even the most closed systems — builds on open foundations. Proprietary models depend on open research, from transformer architecture to training libraries and evaluation frameworks. When openness slows down, innovation follows.

The strategic risk runs deeper than individual models. As Chinese companies like DeepSeek and Alibaba release powerful open systems, they become foundational layers in the global AI ecosystem. The tools powering America's next AI products increasingly come from overseas.

Security Through Transparency

Open models offer advantages beyond innovation speed. They're transparent. You can audit them. A hospital can modify open-source AI to analyze medical scans without depending on some vendor's black box. Same goes for government agencies processing citizen data or small businesses automating customer service.

This matters for democracy too. When algorithms help shape policy, voters deserve to see what's under the hood. Closed models make accountability nearly impossible.

America's Open-Source Assets

The U.S. isn't starting from zero. Meta's Llama family has generated tens of thousands of variations on Hugging Face. The Allen Institute for AI continues publishing excellent fully open models. Startups like Black Forest are building open multimodal systems. Even OpenAI has hinted at releasing open weights soon.

But these efforts need more support. China's advantage comes partly from institutional backing for open research. Their government sees open-source AI as soft power — a way to influence global AI development by providing the foundational tools.

The Trump administration's AI Action Plan signals recognition of this dynamic. Supporting open-source AI isn't just about innovation policy. It's about ensuring American institutions shape the global AI ecosystem rather than adapting to systems built elsewhere.

The Velocity Problem

Speed matters in AI development. Open systems create compounding advantages through network effects. When thousands of developers can experiment with and improve a model, progress accelerates exponentially.

China's research groups understand this. They release models and cultivate communities around them. Each improvement flows back into the system, making the next round of models stronger and more practical.

American companies focusing on proprietary development miss these compounding effects. Their models may be powerful, but they can't benefit from the distributed innovation that open systems enable.

Why this matters:

• America pioneered open-source AI culture but risks losing global influence by closing up while China opens up

• Open-source AI drives innovation velocity — falling behind here could mean losing the broader AI race entirely

❓ Frequently Asked Questions

Q: What's the difference between "open weights" and regular open-source software?

A: Open weights means you can download the actual AI model files and run them on your own computers. Regular open-source just shares code. With open weights, developers can modify the AI's behavior, retrain it, or build new products without depending on the original company's servers.

Q: How exactly did DeepSeek-R1 hurt U.S. stock prices?

A: When DeepSeek-R1 launched, U.S. stocks tumbled within a week as investors realized a Chinese company had built a world-class AI model and released it for free. This threatened American companies charging for similar capabilities through expensive API access.

Q: What is Hugging Face and why does it matter for AI?

A: Hugging Face is like GitHub for AI models - a platform where developers share and download AI models. It's become the main way to measure which models gain traction. DeepSeek-R1 becoming the "most-liked model ever" there showed global developer adoption.

Q: What does "API-only" access mean and why is it limiting?

A: With API access, you send requests to use the AI but can't download or modify it. You're stuck paying monthly fees and following their rules. If they change pricing or shut down, you're out of luck. Open weights let you own and control the AI completely.

Q: How many variations of Meta's Llama models exist?

A: Meta's Llama family has generated "tens of thousands" of variations on Hugging Face according to the article. This shows how open-source AI creates network effects - when one company releases a model, thousands of developers build improvements on top of it.

Q: How does China's government support open-source AI development?

A: China provides institutional backing for open research and views open-source AI as "soft power" - a way to influence global AI development by providing foundational tools. This gives Chinese researchers more resources to share their work freely compared to profit-focused American companies.

Q: Which American companies are still doing open-source AI?

A: Meta leads with its Llama models, the Allen Institute for AI publishes fully open models, and startups like Black Forest build open multimodal systems. Even OpenAI has suggested it may release open weights soon, though they haven't yet.

Q: Why did the U.S. create the transformer architecture if China benefits from it now?

A: The transformer (the "T" in ChatGPT) emerged from America's open research culture between 2016-2020. Google, OpenAI, and Stanford shared breakthroughs freely. Now China uses these foundations while American companies have mostly stopped sharing their latest innovations.