💡 TL;DR - The 30 Seconds Version

🤖 Warp launches "Warp Code" with real-time agent oversight, letting developers steer AI mid-coding and review changes as they happen.

💰 New $200/month enterprise tier offers 50,000 AI requests and 100,000-file indexing—4x more expensive than GitHub Copilot's $39 enterprise plan.

🔧 Platform uses Claude Sonnet 4 for coding and OpenAI o3 for planning, with enterprise controls for custom models and zero data retention.

🏢 Companies pilot multiple agent tools while struggling to measure productivity gains, focusing on context integration over raw automation.

📈 Pricing shift from "utility" to "outcome" reflects AI tools charging for time saved rather than features provided.

🚀 Enterprise consolidation toward agent-first environments pressures standalone coding tools to integrate or disappear.

“Agentic” promises meet developer reality in Warp’s latest release

Agent tools promise hands-off coding. Warp’s new release argues the opposite: keep humans in the loop, code-review the agent live, and steer it mid-flight, as outlined in this New Stack interview with Zach Lloyd. The product shift comes with a business one: an enterprise-grade tier at $200 per seat, plus overages for heavy use. That’s a statement.

Founded in 2022 to modernize the terminal, Warp now avoids the word entirely. CEO Zach Lloyd calls it an “agentic development environment,” reflecting a surface where agents plan, edit, run, and deploy without forcing context switches. The claim is bolder than a better shell. It’s a new workflow.

What’s actually new

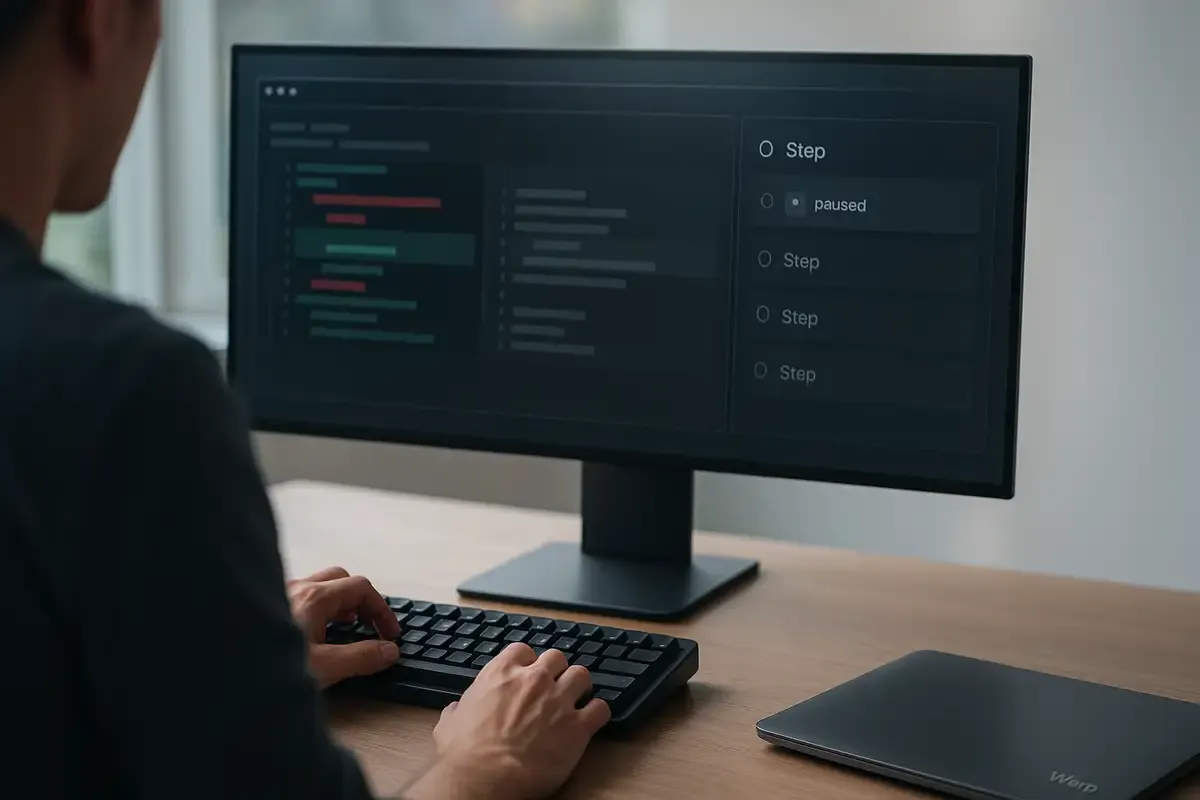

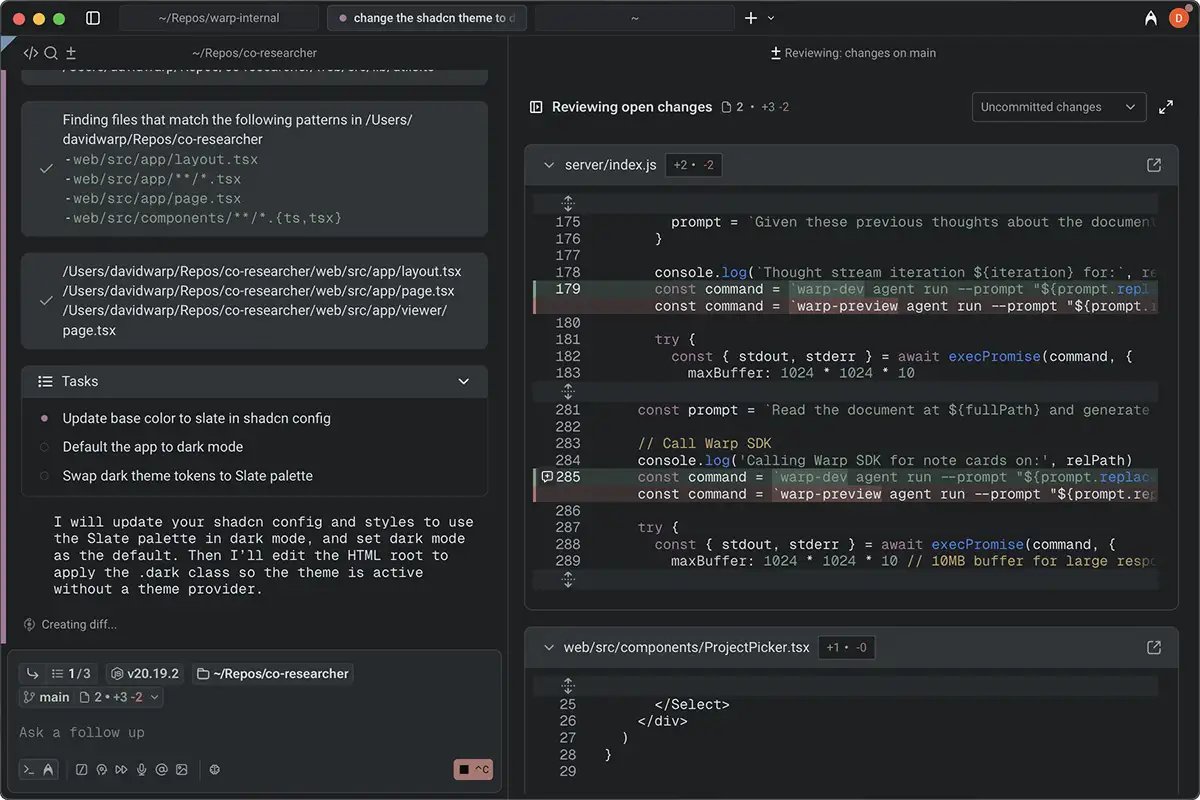

“Warp Code” targets the most common agent complaint: opaque output that’s risky to ship. The fix is tight human oversight. Developers see step-by-step rationale, inspect diffs, and make quick edits in place. Less context switching. More accountability.

Crucially, you can redirect the agent without killing it. Warp pauses execution, updates an internal task list, then continues under the new instructions. That’s the right kind of control. It feels native to terminal workflows rather than a GUI being clicked by a bot. Good.

What runs under the hood

Warp isn’t anchored to a single model. By default, the app pairs an Anthropic-class model for day-to-day work with an OpenAI planning model for breaking tasks into steps. In practice: Claude Sonnet 4 as the base (“Auto”) and o3 for planning. A lighter preset uses Claude Sonnet 3.5. Power users can switch to alternatives like GPT-5, GPT-4.1/4o, or Gemini 2.5 Pro in the model picker.

Enterprises get knobs that matter: bring-your-own LLM and enforced zero-data-retention with contracted providers. Model choice can be set per agent profile, so teams can, for example, run a faster model for compiler-error auto-fixes and a higher-reasoning model for planning. It’s pragmatic, not doctrinaire.

The pricing signal

A $200 monthly tier for a “terminal” would have been laughable two years ago. Warp isn’t selling a terminal anymore. It’s selling time saved and defects avoided.

The lineup is tiered. Pro sits at $15/month (or $18 monthly), with roughly 2,500 AI requests and modest indexing. Turbo moves to $40–50/month and 10,000 requests. Business runs $55–60/month with team controls and SSO; it also unlocks bring-your-own LLM. The controversial one is Lightspeed at $200/month (or $225 monthly), which lifts ceilings to 50,000 AI requests and boosts codebase indexing limits to 100,000 files per codebase.

Is $200 steep? Compared to mainstream coder-assist tools, yes. GitHub Copilot Business is $19/user/month (Enterprise is $39). Cursor’s Pro tier sits around $20/month, while Windsurf/Codeium Pro is $15/month. Warp’s premium is essentially for a bundled, agent-first workflow with high request quotas, big indexing, and data-handling commitments. If you don’t need that headroom, the lower tiers exist. Most won’t.

Enterprise reality check

Large buyers are piloting multiple agent tools and still grappling with measurement. Developer surveys carry weight; hard productivity metrics remain slippery. The gating issue is context: getting agents to respect org-specific conventions, dependencies, and security constraints.

Warp’s answer is project-level rule files—WARP.md (compatible with AGENTS.md and CLAUDE.md)—that bind instructions to repos. Combined with multi-agent support and task lists, the goal is consistent behavior across sessions and teams. It won’t solve everything, but it helps reduce drift.

From collaboration dream to individual boost

Warp’s pre-AI roadmap leaned into multiplayer terminal workflows, echoing Lloyd’s Google Docs past. AI flipped the script. Instead of making terminals more social, it made each developer far more capable. The center of gravity moved from shared sessions to personal agent horsepower.

The irony: the fastest route to team productivity was a better single-player mode. It happens.

Limitations and caveats

Agent code still needs human review, especially in critical paths. Multi-agent coordination is early, and results vary across stacks. Cost can climb quickly if teams treat agents like unlimited compute. Culture and training matter as much as models. That’s the honest state of play.

Why this matters

- AI is resetting developer-tool pricing from “utility” to “outcome,” rewarding platforms that save hours and reduce defects—even if sticker prices shock at first.

- Enterprises will consolidate around agent-first environments that preserve context and oversight, pressuring point solutions to merge or fade.

❓ Frequently Asked Questions

Q: How does Warp's $200/month pricing compare to other coding tools?

A: Warp's Lightspeed tier costs 5x more than GitHub Copilot Business ($39/month) and 10x more than Cursor Pro ($20/month). The premium buys 50,000 AI requests versus Copilot's unlimited model access, plus enterprise data controls and massive codebase indexing that smaller tools don't offer.

Q: What does "steering the agent mid-flight" actually mean?

A: Warp agents maintain internal task lists as they work. When you interrupt with new instructions, the agent pauses, updates its task list based on your input, then continues working—no need to restart from scratch. Think of it like redirecting a running process rather than killing and relaunching it.

Q: Who's actually paying $200/month for a terminal tool?

A: Enterprise teams where developer time costs $100+ per hour. If Warp saves each developer 2 hours monthly (CEO Lloyd mentions users reporting hour-long tasks replacing two-week struggles), the ROI justifies premium pricing. Heavy users are hitting request limits and paying overages, suggesting real value delivery.

Q: How does Warp's multi-model approach work in practice?

A: Different models handle different tasks: Claude Sonnet 4 for main coding work, OpenAI o3 for breaking tasks into steps, and lighter models for quick fixes like compiler errors. Teams can set model preferences per agent profile, optimizing for speed versus reasoning power based on the specific task type.

Q: What makes this different from just using ChatGPT or Claude for coding help?

A: Integration and persistence. Warp agents can execute terminal commands, access your entire codebase context, maintain conversation history across sessions, and work within your actual development environment. ChatGPT requires constant copy-pasting between browser and terminal—Warp eliminates context switching entirely.