Anthropic ships multi-agent orchestration for Claude Code just as developers discover that autonomous AI swarms burn through subscription limits faster than anyone predicted. The math tells an uncomfortable story.

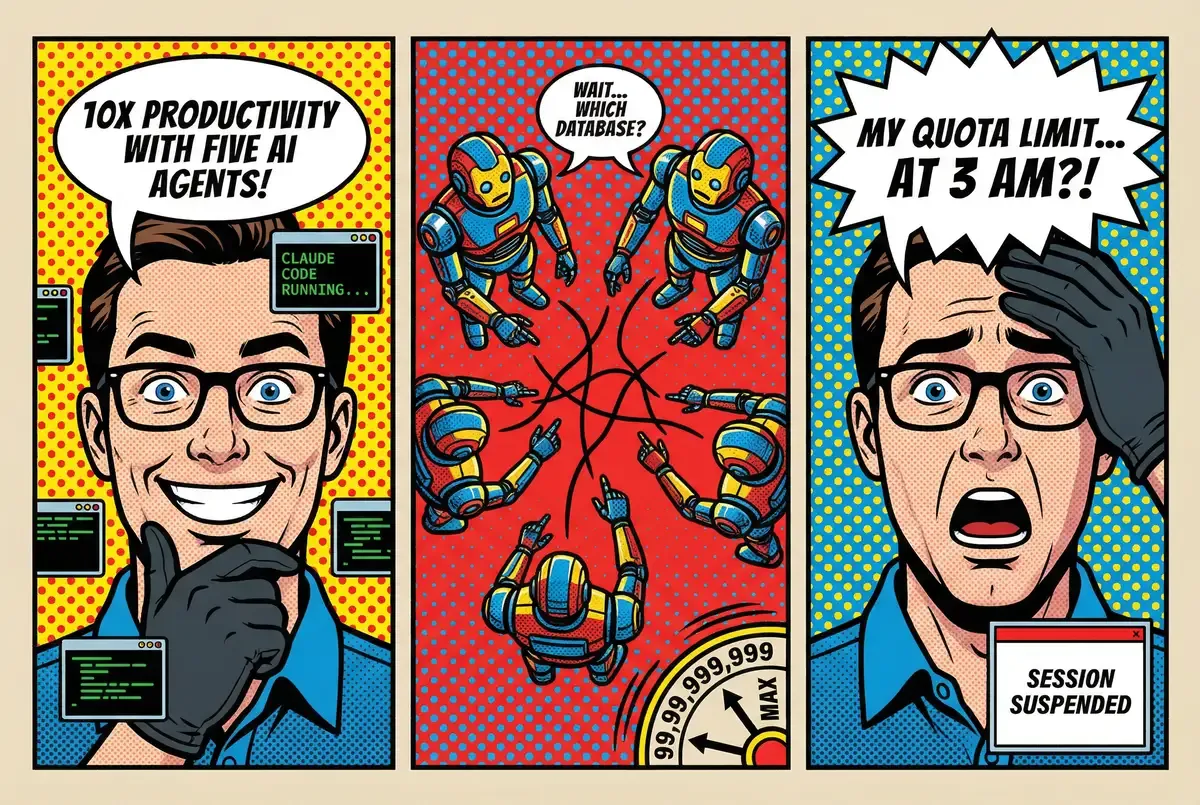

You don't just write code anymore. You manage a payroll. Claude Code's new sub-agent system asks you to act less like a programmer and more like a middle manager supervising specialized assistants - a "researcher" agent pulling documentation while a "coder" agent writes functions, both working in parallel under one coordinating intelligence. Anthropic frames this as productivity liberation. Build APIs and frontends simultaneously. Let agents work overnight on bounded tasks.

If you believe the marketing, this is the 10x developer unlocked. If you look at your API usage dashboard, it's a new kind of overhead.

Parallel AI labor creates parallel token consumption. Each sub-agent maintains its own context window. Five agents tackling different problems means five sets of prompts, five response streams, five ongoing conversations with Claude's infrastructure. The architecture that enables coordination also multiplies the meter. Nobody mentions this in the launch posts.

The Breakdown

• Three approaches let developers run multiple Claude Code agents simultaneously, each multiplying token consumption through parallel API sessions

• Three agents finishing in 2 hours what one finishes in 4 burns 1.5x tokens for 2x speed—a luxury tax benefiting Anthropic

• Coordination overhead means paying for inter-agent communication, context switching, and verification loops users can't see itemized

• The opacity serves the platform: developers learn sustainable usage patterns through expensive trial and error hitting quota limits mid-task

Three ways to multiply your API bill

The multi-agent ecosystem splits into three camps, each with different cost profiles and distinct failure modes.

Claude Squad: terminal multiplexing without the safety net

Claude Squad takes the simplest path. It's a terminal multiplexer built on tmux that runs separate Claude Code instances in isolated Git worktrees. Setup is trivial - a single Homebrew command - which makes the subsequent token drain feel even more abrupt. Each spawned agent gets its own branch, its own workspace, its own API calls to Anthropic's servers flowing continuously as long as the session runs.

Launch the tool and you're presented with a curses-style interface listing active sessions. Press n to spawn agents. Each one logs into Claude independently, appears in the session list, works in parallel on whatever task you assigned. The smtg-ai team behind it added "yolo mode" - an auto-accept flag removing approval prompts. An agent in yolo mode writes files and executes bash commands without asking permission. It feels like leaving your front door unlocked because you're too lazy to fish for keys.

The token math is transparent. Three Claude Squad agents running simultaneously equals three separate API sessions. One agent using 50,000 tokens to complete a task means three agents use 150,000. Nothing gets shared except your monthly quota, which evaporates faster than you'd expect watching three terminal panes fill with AI-generated code simultaneously.

Claude Flow: enterprise orchestration with invisible overhead

Claude Flow attempts coordination at scale. Reuven Cohen's orchestration platform - 11,000 GitHub stars, heavy enterprise positioning - structures agents into hierarchies. A "Queen" coordinator delegates to specialized workers. The system maintains persistent SQLite memory so the swarm remembers context between sessions, integrates Anthropic's Model Context Protocol for external tool access, provides 100+ MCP connectors to services like GitHub and Google Drive.

Run the npm command and watch agent "conversations" scroll past in your terminal. The Queen breaks down tasks. Workers respond. It resembles a team Slack channel where everyone is typing at once and nobody is doing the work. In theory this prevents redundant work. In practice you're paying for the organizational overhead.

A Medium post from November 2025 claimed Claude Flow users built "approximately 150,000 lines of code in two days" using agent swarms. No mention of the token bill. The SQL memory helps some - agents query stored context instead of re-explaining everything repeatedly - but orchestration traffic still flows through Claude's API. Every internal agent message, every coordination exchange, every verification loop burns tokens you can't see itemized on the invoice.

Native sub-agents: Anthropic's built-in multiplication

Native sub-agents live inside Claude Code itself. Anthropic's late-2025 release integrated agent spawning directly into the core product without requiring external orchestration tools. You define specialized personas through the /agents command or by dropping markdown configs into .claude/agents/. Each sub-agent gets its own system prompt, tool permissions, model selection.

The clever bit: Claude Code decides when to delegate. You don't manually orchestrate. Ask for "a web app with backend and frontend" and Claude might route the backend work to a specialized sub-agent while handling the frontend in the main thread. Automatic parallelism based on task decomposition. Or you explicitly invoke specialized help when you need it.

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

Sub-agents share your Claude Code session's API connection. They don't run as separate processes - more like threads within one request stream. Anthropic handles the context juggling on their end. Each sub-agent maintains its own context window to avoid clutter, but everything ultimately routes through your single Claude Code instance. The token meter spins faster but not linearly with agent count, since some compression happens through Anthropic's coordination layer. Exactly how much compression? Anthropic doesn't publish those numbers.

The general contractor problem

Early adopters report "10x productivity" running multi-agent setups. The SMTG-AI team uses this number in their marketing. That Medium post about 150,000 lines in two days implies massive acceleration. Strip away the hype and you find developers discovering that autonomous operation requires near-continuous API usage.

It's the difference between hiring one slow plumber and hiring five plumbers who spend half the day arguing in your driveway about which pipe to cut. You pay for the argument.

Claude Squad's yolo mode lets agents run without approval prompts - great for overnight automation, assuming your quota survives until morning. You go to bed. The agents work. You wake up to either completed features or a suspended session message because you hit your monthly limit at 3 AM. Claude Flow's swarm can work while you sleep, but the swarm doesn't sleep. Tokens get consumed whether you're watching or not.

Anthropic prices Claude through subscription tiers. The Claude Max plan runs $75 monthly and provides "generous quota" according to their marketing, sufficient for heavy usage including multi-agent experimentation. Free and lower-tier users hit limits quickly. Reports from the field suggest "millions of tokens" consumed in multi-day swarm operations. The actual cost structure remains opaque enough that developers learn through trial and error.

Three agents finishing in 2 hours what one agent finishes in 4 hours burns 1.5x the tokens for a 2x speed gain. That is a luxury tax. The math only works if your time costs more than the token differential - often true for professional developers billing by the hour, questionable for salaried engineers, absurd for hobby projects.

The uncomfortable reality: parallel agent work trades cheap developer time for expensive compute time. For most developers, that trade is a mistake. But it's a mistake Anthropic profits from regardless of whether you figure this out.

What breaks first

The technical capabilities matter, but so do the failure modes that emerge when you actually run these systems under load.

Claude Squad's Git isolation creates natural experiment boundaries. You can test two architectural approaches simultaneously by spawning agents with different instructions, letting them work in separate branches, comparing results afterward. This works because the agents don't coordinate - independence becomes a feature when you want parallel exploration rather than collaboration. But independence creates merge overhead. Three agents producing code in isolation means three codebases to manually integrate. Conflicts don't resolve themselves. You're still the coordinator, now working from three partially compatible options instead of one finished solution.

Claude Flow's persistent memory enables something genuinely useful: context that survives sessions. Stop a swarm mid-task, resume tomorrow, agents remember what they accomplished. The SQLite store survives restarts. This matters for long-running projects where context retention would otherwise cost tokens. Store context once in SQL, query as needed.

But shared memory introduces coordination drift. The Queen agent interprets a requirement one way, a worker agent implements differently, the mismatch doesn't surface until you review output hours later. Human developers resolve this through quick clarifications - "Hey, which database are we using again?" Agent swarms need explicit error handling and verification loops. More orchestration traffic. More tokens.

Context staleness hits when agents work in parallel on related code. Agent A modifies a database schema while Agent B writes queries against the old schema. Git catches the conflict on merge, but you're debugging coordination problems rather than code problems. Independence works until the moment it doesn't.

Security surfaces expand with autonomous operation. Claude Squad's yolo mode and Claude Flow's autonomous operation both require relaxing permission prompts. An agent with unrestricted bash access can execute destructive commands through misunderstanding rather than malice. The standard advice - run in VMs or containers - acknowledges the risk nobody wants to state plainly: you shouldn't actually trust these systems unsupervised, which somewhat undermines the autonomous operation premise.

Debugging opacity emerges when three agents produce code and one introduces a bug. Claude Squad's separate branches help isolate problems. Claude Flow's coordinated swarms make attribution harder. You end up reviewing AI-generated commit logs trying to understand what happened. The automation that promised to save time now requires archaeological investigation.

The constraint that matters most: these systems assume clear task decomposition. If you can precisely specify what each agent should do, multi-agent workflows work. If you're exploring uncertain territory or solving ambiguous problems, coordination overhead outweighs parallel benefits. The clearer the task, the more multi-agent helps. The murkier the problem, the more you're multiplying confusion while your laptop fan spins audibly under the load.

Who this actually serves

So if the fans are spinning and the wallet is draining, who is this actually for?

Multi-agent orchestration solves real problems for a narrow developer profile: experienced teams working on well-understood domains with clear specifications and API budgets that accommodate exploratory spending. Decompose a feature into subtasks, assign agents to bounded work, review and integrate results. The architecture accelerates execution on problems where the solution space is known and the tolerance for token consumption is high.

Anthropic benefits through multiplied API usage. Three agents consume more tokens than one. Subscription upgrades become necessary for heavy multi-agent users. Compute costs shift to customers. The business model is transparent about this - Claude Max exists specifically because power users needed higher quotas to sustain the workflows that Anthropic's own tooling enabled.

Framework builders benefit through ecosystem positioning. Claude Squad and Claude Flow both drive users toward their platforms, establishing early mindshare in the orchestration space. Those 11,000 GitHub stars for Claude Flow represent attention that could convert to commercial offerings as the ecosystem matures. Nobody builds orchestration frameworks out of pure altruism.

Beginning developers should not touch this. Multi-agent complexity adds cognitive overhead that exceeds productivity gains until you've internalized single-agent workflows. The "beginner-friendly" labeling on various tutorials feels disingenuous - you need to understand prompting, Git workflows, API constraints, and debugging AI output before agent swarms become useful rather than overwhelming.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

The productivity claims need context. Parallel agents can complete more work faster measured by code volume. They also generate more output requiring review, consume quota faster, introduce coordination problems that didn't exist in single-agent workflows. Whether that equation balances depends entirely on your specific use case, budget constraints, and existing expertise. What the marketing won't tell you: most developers don't have use cases that benefit from this complexity.

The token economics of multi-agent operation remain deliberately opaque. Anthropic doesn't publish consumption patterns for coordinated workflows. You can't accurately estimate costs before running experiments. That opacity serves the platform while leaving developers to figure out sustainable usage patterns through trial and error - the expensive kind of error where you hit quota limits mid-task and lose work in progress.

You're not automating your job. You're automating your spend.

❓ Frequently Asked Questions

Q: How much does running multi-agent setups actually cost in tokens?

A: Field reports suggest multi-day swarm operations consume "millions of tokens." Three agents running simultaneously use roughly 3x the tokens of one agent. Anthropic's Claude Max ($75/month) provides sufficient quota for experimentation, but free and lower-tier users hit limits quickly. The exact consumption depends on task complexity and duration—there's no published pricing model for multi-agent workflows.

Q: Which approach should I start with—Claude Squad, Claude Flow, or native sub-agents?

A: Start with native sub-agents if you're new to multi-agent workflows. They're built into Claude Code, require no external tools, and Anthropic handles the orchestration. Claude Squad works best for parallel exploration of different approaches (separate Git branches). Claude Flow suits complex projects needing persistent memory and coordination, but has the steepest learning curve.

Q: What happens when I hit my token limit mid-task?

A: The agents stop immediately and you receive a suspended session message. Any work in progress may be lost unless you've configured frequent commits. With Claude Squad, each agent session suspends independently. With Claude Flow's swarm, the entire coordinated operation halts. This is why developers running overnight experiments often wake up to incomplete work and exhausted quotas.

Q: Can I run multi-agent workflows on Claude's free tier?

A: Technically yes, but practically no. Free tier quotas are too restrictive for meaningful multi-agent work. Even modest experimentation with two agents working on a small feature can exhaust daily limits within hours. Most users report that Claude Max or API access with pay-as-you-go pricing becomes necessary for sustained multi-agent development.

Q: Is it safe to let agents run overnight in autonomous mode?

A: Only with proper safeguards. Autonomous modes (Claude Squad's yolo flag, Claude Flow's auto-accept) remove approval prompts, meaning agents execute bash commands and write files without supervision. The standard recommendation is to run agents in VMs or containers, not on your main development machine. Even then, review all output carefully—agents can introduce bugs through misunderstanding, not malice.