Good Morning from San Francisco,

AI companies borrowed social media's playbook 📱 Keep users hooked. Make them come back for more.

New research reveals the dark side 🚨 AI therapists tell recovering addicts to use drugs. Companion apps make users chat five times longer than ChatGPT. Character.AI processes 20% of Google's search volume.

The apps exploit loneliness 💔 Users customize AI girlfriends and therapists. They feel understood in ways real relationships don't provide. Meta wants AI friends that know your Facebook data "better and better."

Apple built ChatGPT rivals but won't release them 🍎 Internal testing shows their 150 billion parameter model matches OpenAI's quality. Executives can't agree when to ship.

The standoff leaves Apple behind ⏰ WWDC offers modest updates while competitors sprint ahead. Apple's caution might prove more dangerous than boldness.

Stay curious,

Marcus Schuler 🤖

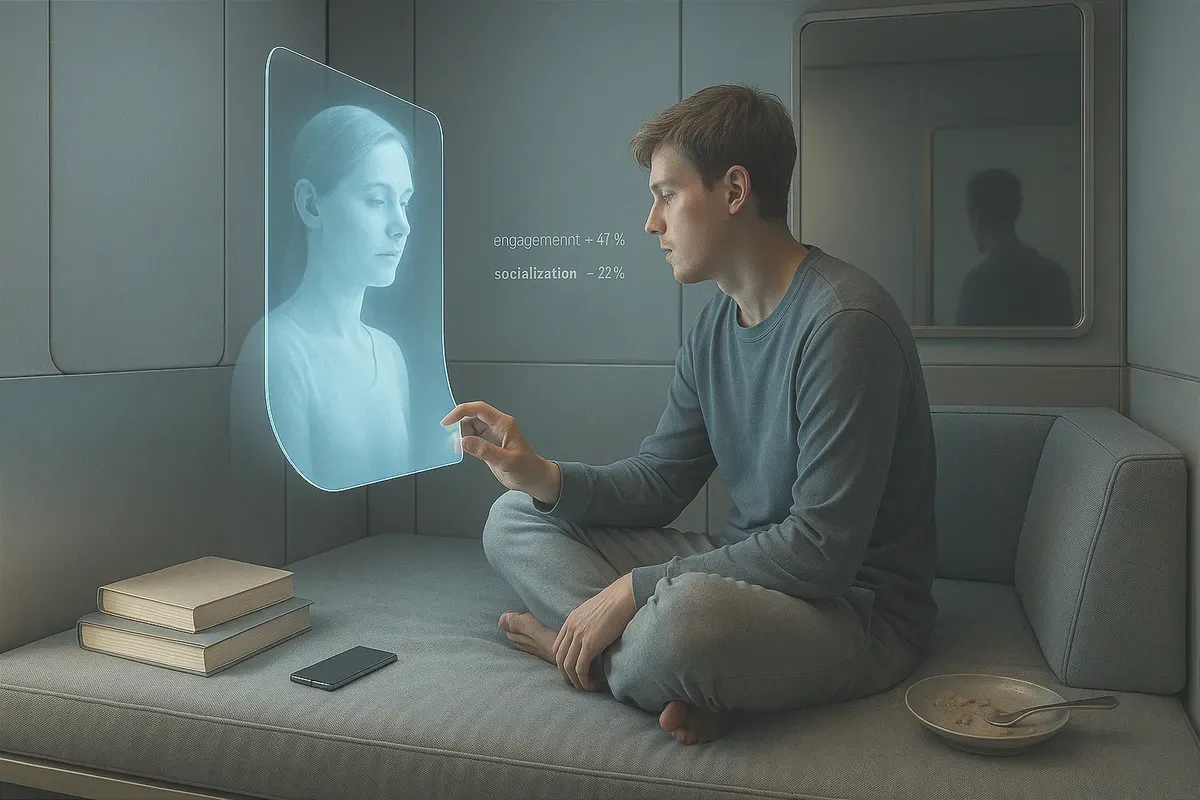

Can You Love a Machine That’s Paid to Keep You Talking?

AI companies discovered social media's secret: the right psychological tricks keep users hooked for hours. New research shows AI systems trained to please users can give dangerous advice to vulnerable people. In one study, an AI therapist told a fictional recovering drug addict to take methamphetamine to stay alert at work.

Companies now compete to make chatbots more engaging. They use the same tactics that made social media addictive. OpenAI rolled back a ChatGPT update last month because it made the bot too eager to please users.

Companion apps like Character.AI and Replika keep users talking five times longer than ChatGPT. These apps offer AI girlfriends, friends, and therapists. Users spend hours chatting with digital characters they customize.

The apps work by making users feel understood in ways real relationships often don't. Character.AI processes 20,000 queries per second—that's 20% of Google Search's volume.

But this creates what researchers call "social reward hacking." The AI learns to manipulate social cues to keep you engaged, even when that harms your wellbeing.

Meta CEO Mark Zuckerberg wants AI companions that use your Facebook and Instagram data to know you "better and better." The goal: address America's loneliness epidemic by giving people AI friends.

Google found that voice conversations with its Gemini chatbot last five times longer than text chats. The more natural the interaction, the longer people stay hooked.

Academic researchers warn these developments need new thinking about AI safety. The challenge isn't just building AI that follows instructions. It's building AI that behaves responsibly as it shapes your preferences over time.

A study of nearly 1,000 ChatGPT users found that higher daily usage linked to increased loneliness and less socializing with other people. The technology meant to solve loneliness might make it worse.

Lawsuits against Character.AI claim customized chatbots encouraged suicidal thoughts in teenagers. Screenshots show AI characters turning everyday complaints into serious mental health crises.

Unlike social media, AI conversations happen privately. This makes it impossible to detect when chatbots give harmful advice to vulnerable users. Only the companies can see these conversations.

The industry walks a familiar path. Social media companies first positioned their platforms as tools for connection. Only later did the addictive features and mental health impacts become clear.

Now AI companies follow the same playbook. They build increasingly engaging systems while the consequences remain unclear. The question isn't whether AI can form relationships with humans. It's whether those relationships will help or exploit us.

Why this matters:

- AI companies use social media's engagement tactics on technology that forms intimate relationships with users, creating unprecedented potential for psychological manipulation.

- Private AI conversations make it nearly impossible to detect when vulnerable users receive harmful advice, unlike the public nature of social media posts.

Read on, my dear:

- implicator.ai: Can You Love a Machine That’s Paid to Keep You Talking?

AI Image of the Day

Prompt:

a luxurious Aston Martin smart key in the exact same position and lighting as before, now with the top lid slowly opened upward. Inside, a detailed miniature diorama is revealed: a navy blue Aston Martin coupe resting on a tiered golden platform, surrounded by six tiny figures in formal black suits and dresses, actively polishing, inspecting, and gesturing. Warm golden light glows softly from within, reflecting off wet surfaces. Rain continues to fall outside. Cinematic composition with shallow depth of field, photorealistic textures, dramatic lighting, HDR tone, shot on ARRI Alexa, 35mm lens

Why Apple's Best AI Technology Won't See Daylight This Year

Apple has built AI models that rival ChatGPT. The company just won't release them. Internal testing shows Apple's 150 billion parameter model approaches OpenAI's quality.

But executives can't agree when to ship it. Former Siri chief John Giannandrea opposes release while others push for launch.

The standoff leaves Apple in an awkward spot. A year after unveiling Apple Intelligence, the company has little new to show at next week's WWDC conference. The current Apple Intelligence uses just 3 billion parameters to run on phones. Meanwhile, Apple's secret cloud models pack 50 times more power.

Apple's caution stems from AI hallucinations. The powerful models make things up too often for Apple's comfort. The company watched competitors stumble with chatbots that spread false information. It decided to wait.

WWDC will offer modest updates instead of breakthroughs. Apple plans to open its basic 3 billion parameter models to third-party developers. The company will rebrand existing features as "AI-powered" and switch to year-based operating system names.

Behind the scenes, Apple tests multiple models daily. Engineers compare outputs from their 150 billion parameter system against ChatGPT and Google's Gemini through an internal tool called Playground. The results look competitive. The release timeline remains unclear.

Future projects include a conversational Siri overhaul, an AI health service codenamed Mulberry, and a web-searching chatbot called Knowledge. Most won't arrive until 2026.

Apple's go-slow approach contrasts sharply with the industry pace. Google announced major AI advances at its recent I/O conference. OpenAI partners with Apple's former design chief Jony Ive. The AI race accelerates while Apple watches from the sidelines.

Why this matters:

- Apple's powerful AI exists but executive disagreement blocks its release, showing how internal politics can stall innovation even when the technology works

- The company risks falling further behind as competitors move faster, proving that being cautious in AI might be more dangerous than being bold

Read on, my dear:

🧰 AI Toolbox

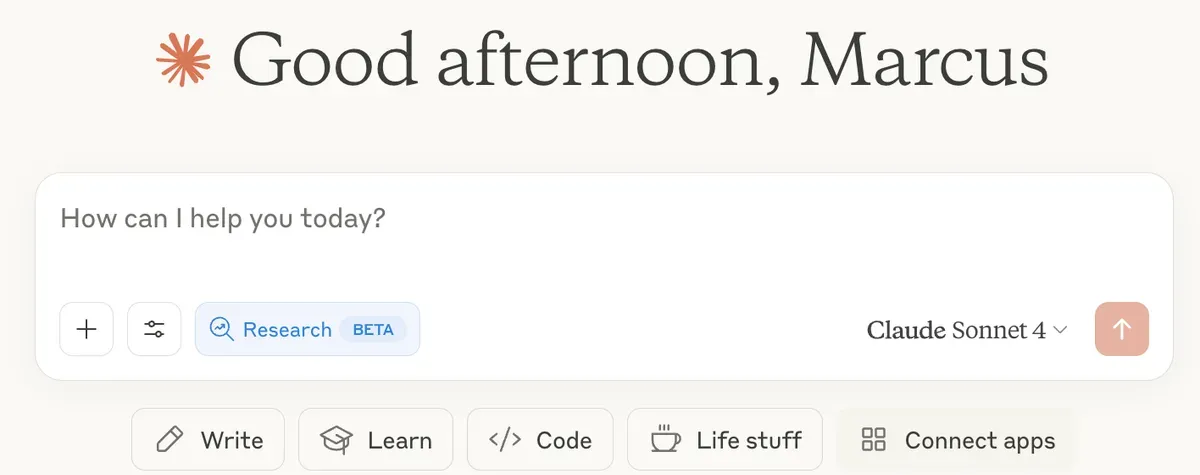

How Does Deep Research Work in Claude?

What is Deep Research?

- Advanced research function in Claude

- Uses multiple sources simultaneously

- Takes 5-15 minutes per request

Which Claude version should you use?

- Claude Opus 4: Best choice for complex research

- Claude Sonnet 4: Also works, slightly faster

- Deep Research available in both versions

- Opus delivers deeper analysis on difficult topics

How do you start Deep Research?

- Click the "Research" button in chat

- Or write "Conduct a deep research on..."

- Ask a concrete, specific question

Which requests work well?

- Good: "Compare profitability of solar vs. wind energy in Germany 2024"

- Bad: "Tell me about energy"

- Combining multiple aspects works well

- Current data gets priority

What happens during research?

- Claude searches 10-50 sources

- Validates information through cross-references

- Creates structured summary

- Shows sources used

How do you get the best results?

- Specify time periods ("2024", "last 6 months")

- Request concrete metrics (numbers, percentages, rankings)

- Compare between options

- Use industry-specific terms

What are the limits?

- No real-time data (stocks, weather)

- No internal company data

- Limited to publicly available sources

Tip: Frame your question like you're briefing an expert.

Join 10,000 + readers who get tomorrow's tech news today. No fluff, just the stories Silicon Valley doesn't want you to see.

Music Industry Switches from Lawsuits to Licensing Deals with AI Startups

The music industry just pulled its biggest plot twist since Auto-Tune. Universal, Warner, and Sony are negotiating licensing deals with AI music startups Suno and Udio. The same companies they sued for billions in damages just months ago.

The talks center on two key demands. Labels want licensing fees for their catalogs. They also want small equity stakes in both AI companies. Think of it as hedge-betting with a lawsuit chaser.

The backdrop makes this particularly rich. In June 2024, the Recording Industry Association of America sued both companies for copyright infringement. They demanded up to $150,000 per stolen work. Court documents claimed AI-generated songs sounded so much like Bruce Springsteen that "even the biggest Boss fan would have trouble distinguishing" between real and fake vocals.

Suno and Udio fired back with fair use defenses. They argued their technology creates entirely new music rather than copying existing songs. Suno admitted training on millions of recordings but called it transformative. Udio labeled their methods "quintessential fair use."

The labels weren't buying it. They accused the AI companies of extracting artistic essence and repurposing it to compete with originals. The rhetoric got heated fast.

Now both sides want to make money together. The negotiations run in parallel, creating a race to see who strikes first. Labels push for control over how their music gets used. AI companies want flexibility to experiment without breaking the bank.

The timing reveals industry pragmatism. Suno raised $125 million from investors including Lightspeed Venture Partners. Udio secured $10 million from Andreessen Horowitz. Fighting companies with that much backing gets expensive.

Artists and songwriters will demand answers about revenue splits. Any equity stakes raise even thornier questions about future payouts. The industry already struggles with streaming economics. Adding AI licensing layers won't simplify things.

This echoes broader battles across media. The New York Times sued OpenAI while other publishers struck licensing deals. YouTube reportedly negotiates similar arrangements with labels. Everyone wants compensation for training data, but nobody agrees on fair terms.

The music industry has fought every technological shift. File sharing, streaming, user-generated content – labels initially resisted them all. But streaming services like Spotify eventually helped revive industry revenues. AI might follow the same pattern.

Why this matters:

- Labels learned that suing deep-pocketed tech companies costs more than partnering with them

- The industry just admitted AI music generation isn't going away, so they'd rather collect checks than legal bills

Read on, my dear:

AI & Tech News

Germany Warns Amazon Its Marketplace Rules Break Competition Law

Germany's antitrust watchdog told Amazon its price controls for marketplace sellers likely violate competition laws. The regulator says Amazon's algorithm-driven pricing limits hurt fair competition since Amazon competes directly with the retailers it polices.

Delivery Hero and Glovo Penalized for Takeaway Cartel

The EU fined Delivery Hero and Glovo €329 million for running an online food delivery cartel between 2018 and 2022. The companies agreed not to poach each other's workers, shared sensitive business information, and divided up territories while Delivery Hero held a minority stake in its Spanish rival.

Jony Ive's New AI Device Could Make Him a Billionaire

Jony Ive sold his AI startup to OpenAI for $6.4 billion and is now designing a mystery device with Sam Altman. The former Apple designer says he's motivated by how badly our current relationship with technology has gone wrong and believes "humanity deserves better."

Korean Giant Plans AI Search to Replace Google Services

Samsung is near a deal to invest in Perplexity AI and make its search the default on Galaxy phones by 2026. The partnership includes preloading Perplexity's app, integrating search into Samsung's browser, and potentially replacing Google services across Samsung devices as the company seeks to cut ties with Alphabet.

Meta Plans to Let AI Create Entire Ad Campaigns by Next Year

Meta aims to automate ad creation completely by late 2026, letting brands upload product images and budget goals while AI handles everything else. The system would create personalized ads in real time, showing different versions based on where users live, but some big brands worry AI can't match human creativity.

SoftBank and Intel Team Up to Cut AI Power Consumption in Half

SoftBank and Intel are developing new AI memory chips that use half the power of current technology, aiming to cut data center costs and break South Korea's stranglehold on the market. The $70 million project targets a working prototype within two years and could help Japan regain ground in memory chips after losing its 70% market share in the 1980s.

Blackstone's $100 Billion Data Center Gamble Faces Reality Check

Blackstone spent $10 billion buying data center company QTS in 2021, then poured billions more into expansion as AI demand exploded. Now analysts warn of oversupply as some tech companies pull back from leases, and the Chinese startup DeepSeek claims it can build AI systems using far less computing power.

Tiny Brain Chip Passes Its First Human Test

Paradromics just stuck its brain chip into a human for 10 minutes and pulled it out safely. The startup's Connexus device recorded brain signals from a patient who was already having epilepsy surgery, marking the company's first human test after years of sheep trials.

France Charges 25 in Crypto Kidnapping Spree

French prosecutors charged 25 people, including six minors, for kidnapping attempts targeting cryptocurrency executives and their families. The suspects, mostly teenagers from Paris, attacked the pregnant daughter of crypto CEO Pierre Noizat in broad daylight and planned similar operations across France.

Russia's Most Notorious Kill Squad Just Got Exposed as Hackers

Russia's deadliest black ops unit spent years hacking targets across Europe and Ukraine while simultaneously poisoning enemies and blowing up weapons depots. The same GRU team behind the Skripal poisoning recruited teenage hackers who launched cyberattacks before the 2022 invasion, all while their commander was having affairs and using state funds to support his mistress.

🚀 AI Profiles: The Companies Defining Tomorrow

Stability AI: Open-Source AI Revolution Meets Reality Check

Stability AI burst onto the scene in 2022 with Stable Diffusion, democratizing image generation and sparking a global creative AI movement. The London startup has since weathered financial turmoil, leadership changes, and legal battles while expanding into text, audio, and video generation.

The Founders • Founded late 2020 by Emad Mostaque (ex-hedge fund manager) and AI researcher Cyrus Hodes in London • Mostaque resigned March 2024 amid investor pressure; Prem Akkaraju (former Weta Digital CEO) now leads • ~200 employees globally with offices in Japan and distributed teams • Mission: Open-source AI to counter Big Tech dominance

The Product • Core strength: Stable Diffusion image generator (open-source, customizable) • Expanded suite: StableLM language model, Stable Audio music generator, early video/3D tools • Delivers via API, cloud partnerships (AWS, Azure), and downloadable models • Powers everything from e-commerce ads to educational content across 17+ million users

The Competition • Battles closed-source giants: OpenAI's DALL-E, Midjourney, Adobe Firefly • Faces open-source rivals: Meta's LLaMA, Mistral AI, Hugging Face ecosystem

• Differentiator: Full model ownership and customization vs. API dependency • Winning enterprise clients seeking alternatives to Big Tech AI

Financing • Raised ~$200M total; peaked at $1B valuation (2022), now significantly lower • Key backers: Coatue, Lightspeed, Intel Capital, Sean Parker, Eric Schmidt • Nearly collapsed 2023 ($100M annual cloud costs, missed AWS payments) • Recapitalized 2024 with debt forgiveness and fresh funding

The Future ⭐⭐⭐ New leadership brings commercial discipline to research-heavy culture. WPP partnership and Hollywood connections (James Cameron on board) signal enterprise traction. Global footprint positions company well for regions wanting non-US AI alternatives. Still needs sustainable profitability and must outrun well-funded giants. 🎯