💡 TL;DR - The 30 Seconds Version

🤖 Google DeepMind released Aeneas, an AI that analyzes Roman inscriptions in seconds—work that normally takes historians days or weeks.

📊 The system learned from 176,000 Latin inscriptions and achieved 72% accuracy in identifying where ancient texts came from across 62 Roman provinces.

🧪 Testing with 23 expert historians showed 152% accuracy improvement when using AI predictions for dating and placing inscriptions.

⚡ Aeneas can fill gaps in damaged texts even when researchers don't know how many letters are missing—a breakthrough previous systems couldn't handle.

🌐 The tool is now free online at predictingthepast.com for historians, students, and museums worldwide.

🚀 Beyond Latin, the system can adapt to other ancient languages and texts, potentially transforming how historians study fragmentary evidence across civilizations.

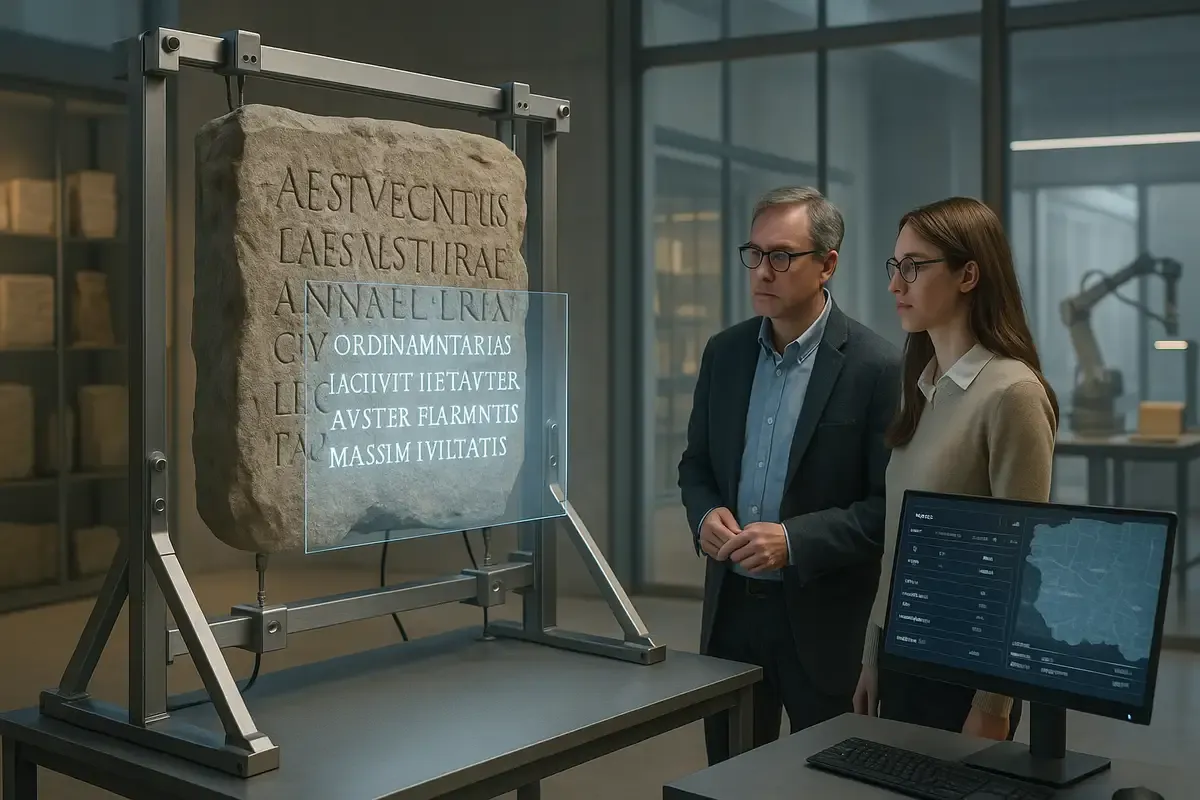

Google DeepMind released an AI system that analyzes ancient Roman inscriptions in seconds—work that normally takes historians days or weeks. The system, called Aeneas, examines Latin text carved into stone monuments, tomb markers, and everyday objects from across the Roman Empire.

Historians spend enormous time hunting through databases and books to find similar inscriptions that help them understand damaged or incomplete text. Aeneas does this automatically, scanning 176,000 Latin inscriptions to find relevant parallels and fill in missing words.

The research team, led by Google DeepMind scientists and University of Nottingham historians, published their findings in Nature this week. They tested Aeneas with 23 expert historians who regularly work with inscriptions. Historians became more accurate and confident when using the AI tool.

Finding needles in historical haystacks

Romans carved words into everything. Emperor proclamations, love poems, business deals, tombstones—you name it. Archaeologists find about 1,500 new inscriptions every year. Most are broken, weathered chunks of stone with words missing.

"Writing was everywhere in the Roman world," says Thea Sommerschield, who studies ancient texts at the University of Nottingham. She helped build Aeneas. The inscriptions show how regular people lived, not just what emperors wanted everyone to think.

Historians search for "parallels"—other inscriptions with similar wording, formulas, or origins. These connections help them figure out what missing text might say, where an inscription came from, and when it was made. Tracking down these parallels requires deep expertise and access to vast libraries.

Training an AI on Roman stones

Aeneas changes this. The system learned patterns from a massive database called the Latin Epigraphic Dataset, which combines three major inscription collections. Historians had built three separate databases over the decades. Each used its own format, so combining them required tedious cleanup work. The team standardized everything into one searchable collection.

Aeneas analyzes writing style, not just words. It notices patterns in how Romans structured sentences and used certain phrases.

Aeneas can restore missing text, date inscriptions, and determine where they came from. The key advance is working with gaps of unknown length. Previous systems needed to know exactly how many letters were missing.

Putting historians to the test

To measure Aeneas's impact, the research team ran the largest "historian and AI" study ever conducted. Twenty-three experts worked on inscription puzzles both with and without AI assistance.

When historians used Aeneas's suggested parallels, their accuracy jumped 35% for determining where inscriptions came from. With the AI's predictions included, accuracy shot up 152%. For dating inscriptions, historians got 32% closer to the correct time periods when using Aeneas.

"The parallels retrieved by Aeneas completely changed my perception of the inscription," one historian wrote in feedback. "It would have taken me a couple of days rather than 15 minutes to find these texts."

Solving ancient mysteries

The researchers tried Aeneas on the Res Gestae Divi Augusti—basically Augustus bragging about everything he accomplished as emperor. Historians argue about when he actually wrote it.

Aeneas looked at the language and came up with two date ranges: 10-1 BCE and 10-20 CE. That's exactly what scholars have been arguing about, which shows the AI gets the uncertainty right.

The system also revealed something new. It found that the inscription shares subtle language patterns with Roman legal documents and imperial messaging—connections human historians hadn't previously noted.

In another test, Aeneas analyzed a damaged altar from ancient Mainz, Germany. The AI correctly identified its most relevant parallel: another altar found nearby that used nearly identical rare formulas. This connection helped historians understand how religious practices spread through the Roman provinces.

Processing text and images

Aeneas processes both text and images. It can look at photos of inscriptions and factor in visual elements like stone shape and layout. This multimodal approach helps determine where inscriptions came from with 72% accuracy across 62 Roman provinces.

The system dates texts by noticing how Romans wrote differently over time. Spellings changed, job titles evolved, and they mentioned different buildings or events depending on the era.

Mary Beard from Cambridge thinks Aeneas could change how historians work. She called it "really exciting" and expects it will reshape the field.

Free tools for everyone

The research team made Aeneas freely available online at predictingthepast.com. Historians, students, teachers, and museum workers can use it to analyze Latin inscriptions. The team also open-sourced the code and dataset to support further research.

They're already working with schools in Belgium to integrate Aeneas into history education. The tool aligns with European digital literacy frameworks and helps students engage directly with ancient sources.

The system can be adapted to other ancient languages and texts—from Greek papyri to coins and manuscripts. This could transform how historians work with fragmentary evidence across many civilizations.

Why this matters:

• Speed wins: What took historians days of digging through books now happens in seconds, so they can spend time figuring out what the texts actually mean.

• Anyone can play: Small colleges and students get the same research tools that only big universities had before.

❓ Frequently Asked Questions

Q: How accurate is Aeneas compared to historians working alone?

A: Historians achieved 27% accuracy identifying inscription origins without AI help. With Aeneas predictions, accuracy jumped to 68%. For text restoration, error rates dropped from 39% to 21% when historians used Aeneas suggestions alongside their expertise.

Q: Can regular people use this tool or just professional researchers?

A: Anyone can use Aeneas free at predictingthepast.com. The interface works for historians, students, teachers, and museum workers. You don't need technical training—just upload an inscription image or text to get predictions and historical parallels.

Q: How long did it take to train the AI on all those inscriptions?

A: Training took one week using 64 specialized computer chips on Google Cloud. But data preparation was the real work—researchers spent months cleaning and standardizing 176,000 inscription records from three different databases with incompatible formats.

Q: Why focus on Latin instead of other ancient languages first?

A: Latin inscriptions are the most common worldwide, with 1,500 new discoveries yearly. This large dataset helped train the AI effectively. The system adapts to other languages—researchers already upgraded their Greek inscription tool using Aeneas technology.

Q: How badly damaged can an inscription be for Aeneas to help?

A: Aeneas handles gaps even when nobody knows how many letters are missing—something previous systems couldn't do. It restores gaps up to 20 characters with 58% accuracy and can analyze fragments with just a few readable words.

Q: What prevents Aeneas from making costly historical mistakes?

A: The system provides confidence scores and shows which text features influenced each prediction. Historians always verify suggestions using their expertise. In testing, experts caught errors and made better final decisions combining AI predictions with their knowledge.

Q: Will AI tools like this replace historians?

A: No. Aeneas accelerates research but historians provide critical interpretation and context. The 23 experts tested emphasized that AI helps them find connections faster, freeing time for deeper analysis that only humans can perform.

Q: How much did Google spend developing this system?

A: Google DeepMind hasn't disclosed development costs, but the project used expensive cloud computing and involved researchers from five universities. The company made all code, data, and the tool free to maximize research impact rather than profit.