💡 TL;DR - The 30 Seconds Version

👉 Researchers from 5 major universities introduce "Context Engineering" as a formal discipline for optimizing how AI systems handle information.

🧠 AI systems excel at understanding complex information but struggle to generate equally sophisticated outputs, creating a "comprehension-generation asymmetry."

📊 The field has evolved rapidly since 2020, growing from basic document retrieval to sophisticated multi-agent networks.

🏗️ Context Engineering breaks into three components: retrieval/generation, processing, and management of information over time.

🔬 Traditional metrics can't evaluate these dynamic systems, creating new challenges for measuring AI performance.

🚀 Fixing the comprehension-generation gap could unlock AI systems that write as sophisticatedly as they read.

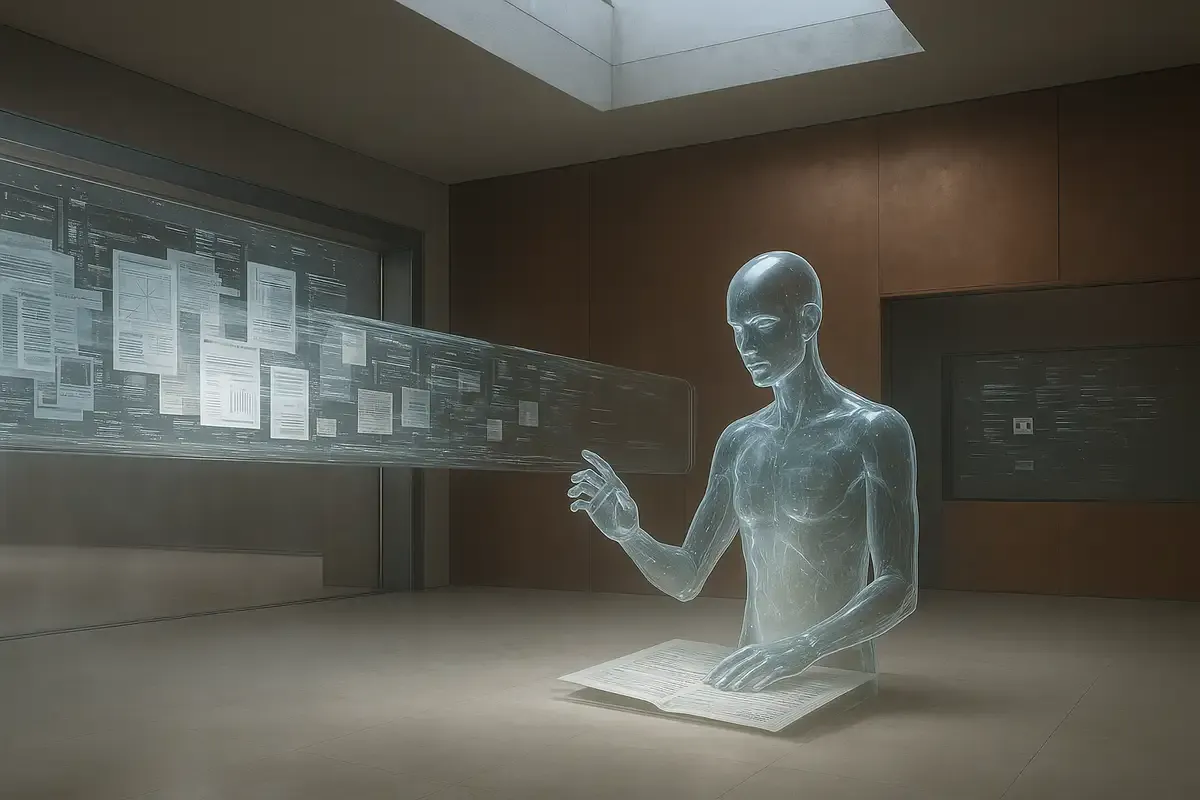

You can feed an AI system thousands of pages and it'll understand complex relationships across all that information. Ask it to write something equally complex back? It chokes.

This problem has a name now: the comprehension-generation asymmetry. Researchers from the Chinese Academy of Sciences, UC Merced, University of Queensland, Peking University, and Tsinghua University just published a survey that introduces "Context Engineering" as the formal discipline for how we feed information to large language models.

The research, led by Lingrui Mei and colleagues, creates the first unified framework for understanding how AI systems handle information. It covers everything from simple prompts to complex multi-agent networks. Think of it as the instruction manual for AI's information diet.

Beyond Prompt Engineering

Context Engineering goes deeper than crafting clever prompts. The researchers define it as an optimization problem: how do you maximize AI performance by organizing information strategically while dealing with limits like context length and computing power?

Most approaches treat context as static text. This framework treats it as structured components you can assemble dynamically. Rather than dumping information into an AI system and hoping for the best, Context Engineering gives you systematic ways to decide what information to include, how to organize it, and when to get more.

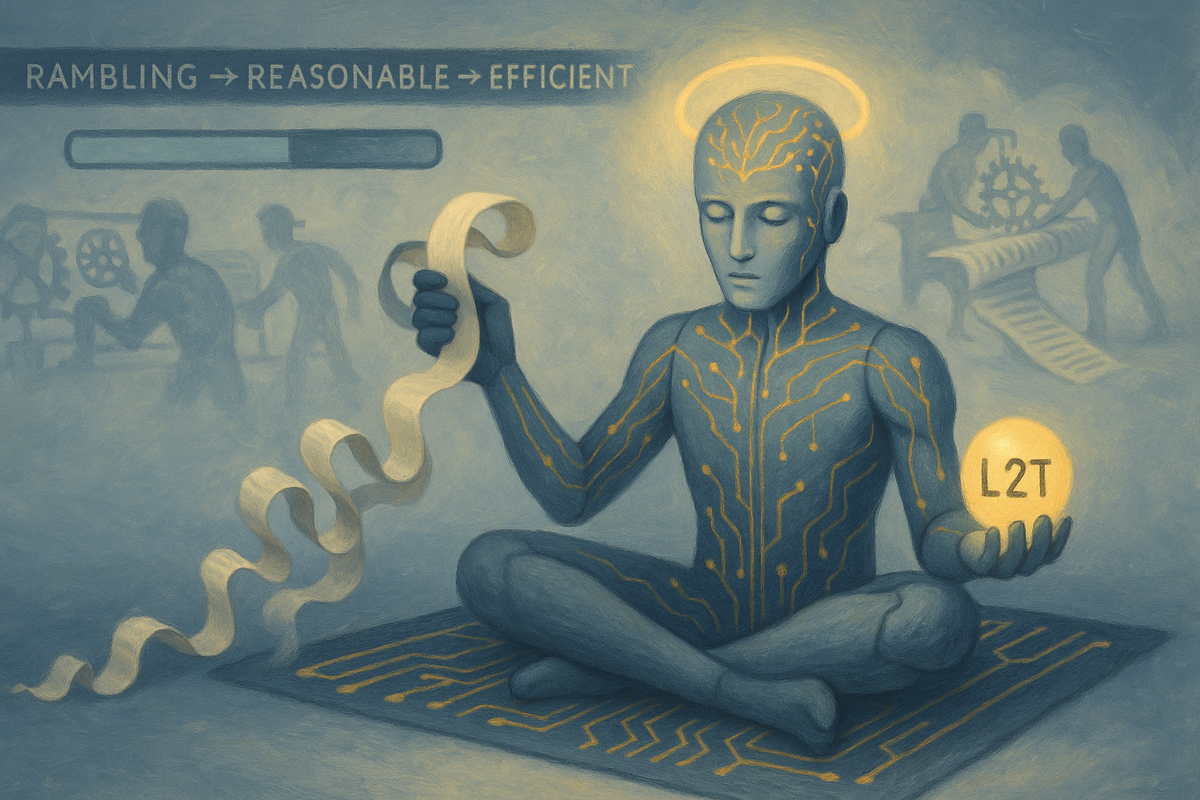

The field has moved fast since 2020. Basic retrieval systems that grabbed relevant documents have turned into sophisticated architectures with memory hierarchies, tool integration, and networks of collaborating agents. Simple question-answering has become something closer to digital thinking.

The Three Building Blocks

The survey breaks Context Engineering into three core components that work together.

Context Retrieval and Generation handles where information comes from. This includes advanced reasoning techniques like Chain-of-Thought prompting, which walks AI through step-by-step thinking, and Tree-of-Thoughts, which explores multiple reasoning paths at once. It also covers external knowledge retrieval—the systems that decide what information to pull from databases, documents, or the web.

Context Processing transforms raw information into something useful. Long sequence processing tackles AI's memory limits through techniques like State Space Models, which handle much longer contexts with linear computational complexity instead of the traditional quadratic scaling. Self-refinement systems let AI critique and improve its own work through feedback loops.

Context Management organizes and stores information over time. These systems create memory hierarchies that work like human memory, separating working memory for immediate tasks from long-term storage for persistent information. Context compression techniques pack more information into limited space without losing what matters.

From Theory to Practice

The framework works best when applied to real systems. Retrieval-Augmented Generation (RAG) has grown from simple document lookup to modular architectures you can optimize and combine independently. Agentic RAG systems make autonomous decisions about what information to retrieve based on how complex the query is.

Memory systems turn stateless AI into persistent agents that learn across interactions. Systems like MemoryBank and MemGPT show how explicit memory can dramatically boost capabilities, letting AI maintain consistent personalities and build relationships with users over time.

Tool integration pushes AI beyond text generation into active world interaction. Systems like Toolformer learn to generate appropriate API calls, while ReAct combines reasoning and action in cycles. The most advanced implementations can navigate web interfaces and control software applications.

Multi-agent systems represent the current frontier. These networks of specialized AI agents use communication protocols to coordinate activities, break down complex tasks, and combine results across distributed teams.

The Core Problem

Here's the weird thing about current AI systems: they can understand incredibly complex information but can't write back with the same level of sophistication. Feed them tons of data and they'll grasp all the connections and nuances. Ask them to produce something equally complex? They fall short.

This gap hurts applications that need detailed analysis, comprehensive reports, or extended reasoning chains. An AI might perfectly understand a 50-page technical document but fail to produce a 10-page summary that captures its full complexity.

The researchers think this asymmetry comes from fundamental architectural limits. While context engineering has optimized information input and processing, the generation mechanisms haven't kept up. You have a brilliant researcher who can read everything but gets writer's block when it's time to write.

Measuring What Matters

Traditional metrics don't work for evaluating dynamic, context-aware systems. How do you measure whether a memory system keeps a consistent personality across months of interactions? How do you assess whether a multi-agent team collaborates well?

The survey calls this a critical challenge. Component-level evaluation can measure retrieval accuracy or memory fidelity, but system-level evaluation must capture behaviors that emerge over time and long-term consistency. Testing individual musicians is easier than evaluating whether they form a good orchestra.

Why this matters:

• Context Engineering provides the first unified framework for understanding how AI systems handle information, connecting previously separate research areas and speeding up development of smarter, more capable systems.

• The comprehension-generation gap gives researchers a clear target—fix this and you could have AI systems that not only understand complex information but can write equally sophisticated responses, changing how we work with artificial intelligence.

Read on, my dear:

❓ Frequently Asked Questions

Q: How is Context Engineering different from just writing better prompts?

A: Context Engineering treats information as structured components you can assemble dynamically, not static text. It includes memory systems, tool integration, and multi-agent coordination—going far beyond crafting clever prompts to systematic information optimization.

Q: What are Chain-of-Thought and Tree-of-Thoughts techniques?

A: Chain-of-Thought walks AI through step-by-step reasoning, like showing your work in math. Tree-of-Thoughts explores multiple reasoning paths simultaneously, letting AI consider different approaches before choosing the best one. Both improve reasoning quality.

Q: How do researchers know AI has this comprehension-generation problem?

A: AI systems can accurately analyze 50-page technical documents but fail to produce 10-page summaries of equal complexity. The survey analyzed over 1,400 research papers showing this pattern across different models and tasks.

Q: Which AI systems actually use Context Engineering today?

A: Systems like MemoryBank, MemGPT, Toolformer, and ReAct already implement these techniques. RAG (Retrieval-Augmented Generation) systems are the most common application, evolving from simple document lookup to sophisticated modular architectures.

Q: Why can't AI generate complex outputs if it understands complex inputs?

A: The problem stems from architectural limits in generation mechanisms. While context engineering has optimized information input and processing, the systems that produce text output haven't kept pace with improvements in comprehension capabilities.

Q: How much does implementing Context Engineering cost?

A: Costs vary by complexity. Basic RAG systems add minimal overhead, while sophisticated multi-agent systems require significant computational resources. The main constraint is context length limits, which scale quadratically with traditional attention mechanisms.

Q: When will AI be able to write as sophisticatedly as it reads?

A: The researchers don't provide a timeline but call fixing the comprehension-generation asymmetry "a defining priority for future research." The rapid evolution since 2020 suggests progress could accelerate once this gap gets focused attention.

Q: What applications need this most urgently?

A: Applications requiring detailed analysis, comprehensive reports, or extended reasoning chains suffer most. Think financial analysis, legal document review, scientific literature reviews, and strategic business planning—anywhere you need sophisticated written output.