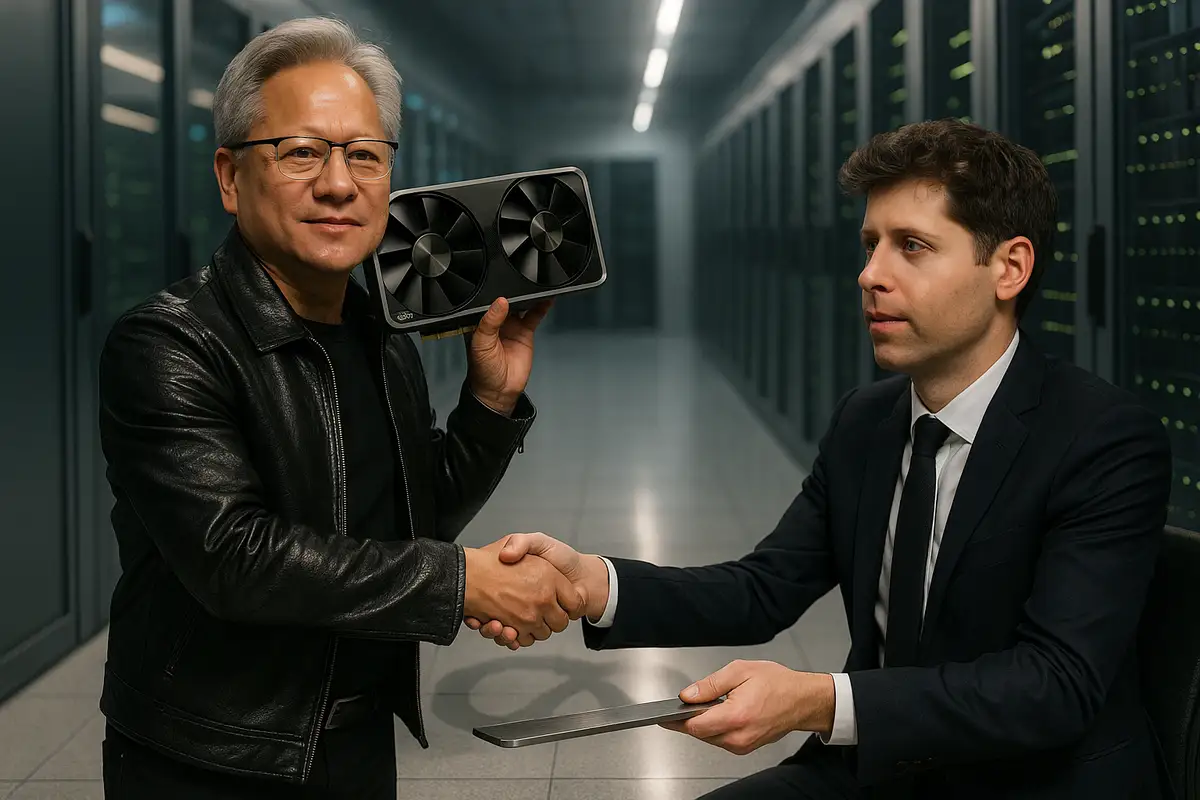

Nvidia's $100 Billion OpenAI Deal Is Dead. The Relationship Isn't.

Nvidia's $100 billion OpenAI infrastructure deal never progressed past preliminary talks. Jensen Huang says he'll still invest, but the terms have changed.

Amazon wants to invest $10 billion in OpenAI. But the deal requires OpenAI to spend that money buying Amazon's chips and cloud services. When your investor is also your vendor, where does the capital actually go?

Amazon is in preliminary discussions to invest roughly $10 billion in OpenAI, according to reports from The Information. The deal would push OpenAI's valuation above $500 billion and require the ChatGPT maker to adopt Amazon's Trainium AI chips while renting additional data center capacity from Amazon Web Services.

The transaction structure deserves close examination. OpenAI would receive $10 billion from Amazon. OpenAI would then route capital back to Amazon through Trainium chip purchases and AWS service fees. This arrangement comes on top of the $38 billion OpenAI committed to spending with AWS in November 2024, a seven-year server rental agreement announced days after OpenAI restructured its relationship with Microsoft. Revenue recognized by AWS becomes capital expenditure for OpenAI, funded by Amazon's equity investment.

OpenAI has locked in more than $1.4 trillion in infrastructure commitments over recent months. Nvidia, Oracle, AMD, Broadcom, and now potentially Amazon have all secured purchase agreements. The SoftBank and Oracle data center buildout alone runs $400 billion. Put that next to OpenAI's 2024 revenue, somewhere around $3 billion, and the ratio starts to look strange. The company is still operating at a loss.

The Breakdown

• Amazon's $10 billion investment would require OpenAI to buy Trainium chips and AWS services, routing capital back to the investor

• Microsoft retains exclusive rights to sell OpenAI's advanced models until the early 2030s, limiting what Amazon actually gets

• Amazon already owns $8 billion of Anthropic, positioning to collect infrastructure revenue regardless of which AI company wins

• OpenAI has committed $1.4 trillion in infrastructure spending against roughly $3 billion in 2024 revenue

What makes the Amazon arrangement particularly notable is its layered structure. The November $38 billion AWS agreement centered on Nvidia chips. The new proposed deal would add Trainium to OpenAI's chip mix. OpenAI is committing to purchase both architectures simultaneously, diversifying its chip suppliers while Amazon diversifies its AI model exposure.

Amazon has already invested $8 billion in Anthropic, OpenAI's primary competitor. A $10 billion OpenAI investment would give Amazon significant positions in both leading model builders. Every dollar of AI inference eventually flows through cloud infrastructure. Amazon is positioning to collect revenue regardless of which model builder captures market share.

Microsoft retains exclusive rights to sell OpenAI's most advanced closed-weight models until the early 2030s. Amazon cannot market GPT-5 or successor models through its developer platform. Microsoft locked that arrangement during early investment rounds and preserved it through the October 2024 restructuring.

The deal structure provides Amazon with three distinct assets: Trainium chip adoption, cloud services revenue, and equity appreciation. AWS claims Trainium delivers 40% better price-performance than comparable Nvidia solutions. That claim has one significant external validation: Anthropic uses 500,000 Trainium2 chips through Amazon's Project Rainier, with plans to scale to over one million chips within the year. But Anthropic already operates within Amazon's investment portfolio.

OpenAI adopting Trainium would validate AWS Neuron, the software development kit required to program Trainium chips. Neuron is less mature than Nvidia's CUDA ecosystem, which has become the default development environment for machine learning. Enterprise customers evaluating Trainium face switching costs and vendor lock-in concerns. If OpenAI runs frontier-scale training on Trainium, it signals that Neuron can support production workloads, reducing perceived risk for other potential adopters.

Reports indicate OpenAI also wants to sell Amazon an enterprise version of ChatGPT, potentially integrating shopping features into Amazon's consumer applications. Etsy, Shopify, and Instacart have signed similar ChatGPT integration deals. Amazon would join a growing list of e-commerce platforms licensing OpenAI capabilities while also investing in the company.

OpenAI's October 2024 restructuring made this arrangement possible. Microsoft previously held constraints over OpenAI's ability to raise capital and secure computing resources from competitors. The new corporate structure transforms OpenAI into a public benefit corporation controlled by a nonprofit with a financial stake in OpenAI's commercial success. Microsoft took a 27% ownership stake and surrendered some control over OpenAI's infrastructure choices.

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

Microsoft retained model distribution exclusivity. When enterprises want GPT-class capabilities through a major cloud provider, they purchase through Azure. Amazon can rent OpenAI servers and sell OpenAI chips. Amazon cannot sell OpenAI models. That contractual distinction carries more weight than the headline investment figure suggests.

Microsoft has also begun diversifying its model builder exposure. In November 2024, Microsoft announced it would invest up to $5 billion in Anthropic. Nvidia committed up to $10 billion to Anthropic in the same period. The major infrastructure providers are acquiring stakes in multiple model builders, creating cross-ownership patterns that complicate traditional competitive analysis.

Enterprise customers seeking to deploy AI models now face a web of interconnected relationships. Cloud providers own stakes in model builders. Model builders commit to purchasing infrastructure from multiple cloud providers. Customer payments flow through relationships where the vendor is simultaneously a customer, investor, and competitor of the company providing AI capabilities.

Trainium3 shipped in early December 2024, built on a 3-nanometer process. Amazon is pitching it as a viable alternative to Nvidia's H100s, with claimed improvements in both cost and energy consumption over the previous Trainium2 generation. The marketing materials look good. But Nvidia's real advantage has never been raw silicon performance. It's CUDA, the software stack that machine learning engineers have built their workflows around for a decade. Trainium runs on AWS Neuron, which requires developers to retool their code and accept single-vendor lock-in. Cheaper chips don't help much if migration eats the savings.

OpenAI's November AWS deal specified Nvidia hardware. If the new Amazon investment brings Trainium into OpenAI's production stack, it would represent the strongest external validation Amazon's chip division has received. Google's TPU chips power both Anthropic's models and Google's Gemini. Amazon needs a comparable reference customer outside its existing investment relationships to compete for enterprise AI workloads.

OpenAI is laying groundwork for an IPO that could push its valuation toward $1 trillion, according to Reuters. The company would join Apple, Microsoft, and Nvidia in that rarefied tier less than a decade after its founding. Whether the public markets agree with that assessment depends on variables no one can model with confidence.

The user numbers look strong. Enterprise adoption is real, with Goldman Sachs, McKinsey, and JPMorgan among the financial institutions running deployments. But OpenAI is spending on infrastructure at a pace that assumes it will dominate a market where Anthropic, Google, and Meta keep shipping competitive models. Llama derivatives are proliferating in the open-source ecosystem. The moat, if there is one, remains unclear.

When investors are also suppliers, and revenue flows back to those investors as infrastructure spending, traditional valuation metrics lose precision. OpenAI's stated revenue is real. The reality that most of it returns to infrastructure funders is also real.

Amazon's proposed $10 billion investment adds another node to an interconnected funding network. For Amazon, downside risk is limited: the company receives cloud revenue, chip adoption, and equity exposure while maintaining its larger Anthropic position. For OpenAI, the calculation depends on whether AI capabilities continue scaling with compute investment and whether technical leadership holds against well-funded competitors.

Wall Street analysts have warned about speculative dynamics in AI infrastructure spending. Even if valuations contract, infrastructure providers have already locked in their contracts. Amazon, Microsoft, Google, Nvidia, and Oracle will collect contracted fees regardless of whether OpenAI's projected valuation holds.

OpenAI's next major infrastructure payment to AWS comes due in Q2 2025, the first installment on the $38 billion November commitment. By then, the Amazon investment terms will likely be finalized, the Trainium adoption timeline set, and the circular structure fully operational.

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

Q: What is Trainium and how does it compare to Nvidia chips?

A: Trainium is Amazon's custom AI chip designed for training machine learning models. AWS claims it delivers 40% better price-performance than Nvidia's comparable chips. The latest version, Trainium3, shipped in December 2024 on a 3-nanometer process. The main barrier to adoption isn't performance but software: Trainium requires AWS Neuron instead of Nvidia's widely-used CUDA ecosystem.

Q: Why does CUDA give Nvidia such a strong market position?

A: CUDA is Nvidia's software development kit that machine learning engineers have built their workflows around for over a decade. Most AI training code, libraries, and tools assume CUDA compatibility. Switching to alternatives like AWS Neuron means rewriting code, retraining teams, and accepting vendor lock-in. The switching costs often outweigh any savings from cheaper hardware.

Q: Who else is building their own AI chips besides Amazon?

A: Google makes TPU chips, which power both its Gemini models and Anthropic's Claude. Microsoft is developing custom AI accelerators for Azure. Meta designs chips for its internal AI workloads. Several startups, including Cerebras and Groq, offer alternatives. Still, Nvidia controls roughly 80% of the AI training chip market through its H100 and newer Blackwell processors.

Q: What would OpenAI's $1 trillion IPO valuation mean for investors?

A: At $1 trillion, OpenAI would rank among the ten most valuable public companies globally, alongside Apple, Microsoft, and Nvidia. For early investors like Microsoft (27% stake), the returns would be substantial. But the valuation assumes OpenAI maintains dominance in a market where competitors keep closing technical gaps and infrastructure costs consume most revenue.

Q: How do these cross-investments affect enterprise customers choosing AI providers?

A: Enterprise customers face tangled vendor relationships. Your cloud provider likely owns stakes in multiple AI companies. The AI company you license from buys infrastructure from competing clouds. This creates potential conflicts of interest around pricing, feature access, and data handling. Companies like Goldman Sachs and JPMorgan are running deployments anyway, but the long-term implications remain unclear.

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.