💡 TL;DR - The 30 Seconds Version

💰 Wall Street poured $100+ billion into AI data centers this year, with Blackstone leading a speculative frenzy that rivals past tech bubbles.

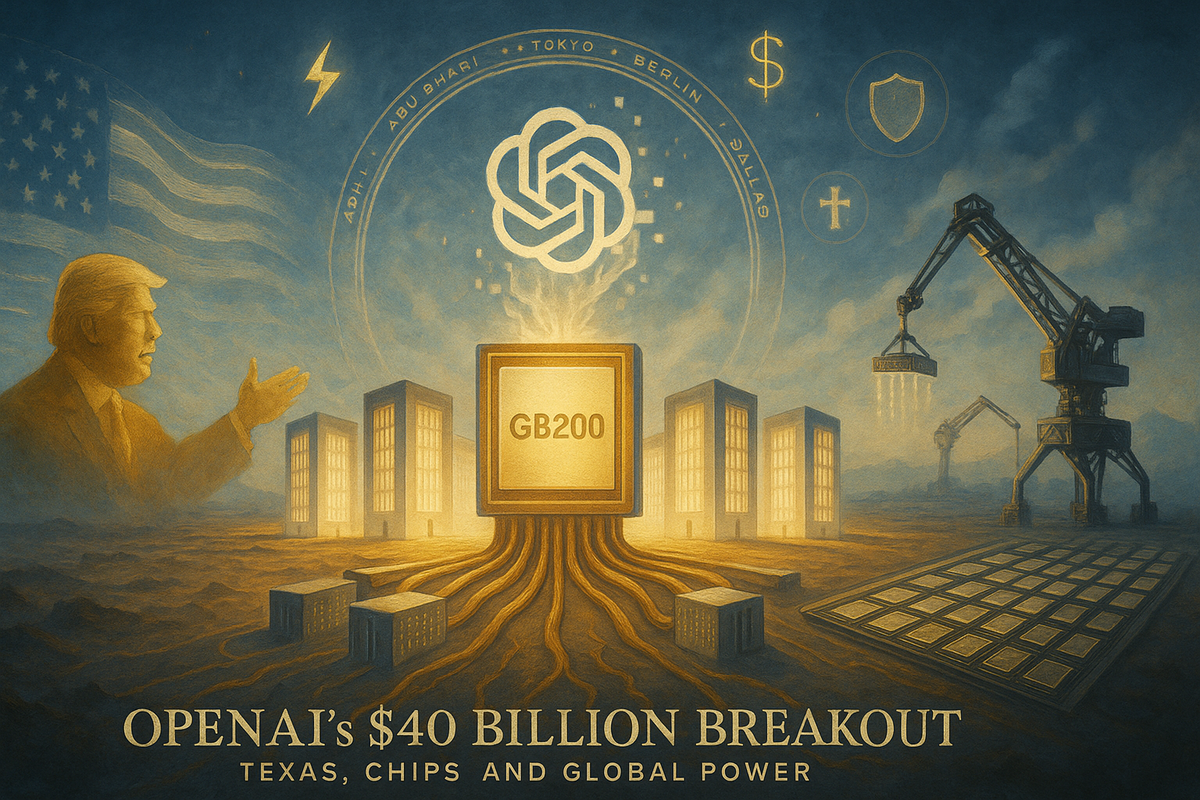

🏗️ OpenAI's Stargate project promises $500 billion for massive computing complexes, starting with 400,000 chips in Abilene, Texas by 2026.

⚠️ Microsoft paused data center construction and companies backed out of leases as analysts warn of "oversupply" in the market.

⚡ AI data centers use 10-20x more power than regular computing and could consume 8% of US electricity by 2035.

🏘️ Towns like Abilene gave up 85% of tax revenue for projects that create few permanent jobs once construction ends.

🎯 If AI efficiency improves faster than expected or demand stalls, these investments could become the next massive financial bubble to burst.

Wall Street has found its new obsession. Private equity firms are pouring hundreds of billions into AI data centers, convinced they're building the backbone of the future. But warning signs keep popping up like weeds after rain.

Blackstone leads the charge. The firm has sunk over $100 billion into data centers and related infrastructure. In 2021, it bought Quality Technology Services for $10 billion. Since ChatGPT launched, Blackstone has poured billions more into QTS, expanding its data centers ninefold in under four years.

The Scale Gets Absurd

The numbers get bigger every week. OpenAI, SoftBank, and Oracle just announced Stargate, a $500 billion project to build massive computing complexes. The first site in Abilene, Texas will consume 1.2 gigawatts of power. That's enough electricity for 750,000 homes.

Chase Lockmiller, who runs the Abilene project, calls it "the largest infrastructure build in human history." His company Crusoe plans eight buildings housing 400,000 chips. They're working 24/7 to finish by mid-2026, using 155 earth-moving vehicles and a 600-ton crane that can lift 100 elephants.

The rush feels frantic because everyone wants to win the AI race. Microsoft, Meta, Google, Amazon, and others are all building competing data centers across the US and beyond. Each company has committed tens of billions, hoping to dominate what they see as the next decade's most important technology.

Warning Signs Flash Red

But cracks are showing. Microsoft recently paused data center construction in Ohio, leaving local officials scrambling. Some companies have backed out of leases entirely. A TD Cowen analyst warned of potential "oversupply" as projects pile up faster than demand.

Joe Tsai, chairman of Alibaba, said he's seeing "the beginning of some kind of bubble" in data center construction. His company needs AI infrastructure, so this isn't sour grapes from the sidelines.

Then came DeepSeek. The Chinese company showed in January that AI systems could run on far less computing power than everyone assumed. If true, it would make these massive data centers look like expensive monuments to poor planning.

Sam Altman, OpenAI's CEO, downplayed DeepSeek's breakthrough. He argues that even if AI becomes more efficient, people will use it so much more that total demand will still grow. "If we had an AI that we could offer at one tenth of the price," he says, "people would use it 20 times as much."

The Math Gets Murky

Maybe. But OpenAI lost $5 billion in 2024, making it hard to evaluate whether this math works. The company desperately needs its new infrastructure to start paying off.

The Energy Problem

The energy demands are staggering. AI data centers use 10 to 20 times more power per server than regular cloud computing. They must stay online 99.999% of the time, allowing just five minutes of downtime per year. Cooling systems alone can consume millions of gallons of water daily.

Lockmiller admits the climate impact is serious. "None of those net zero pledges are going to be met by 2030," he says. "There's no way it's going to get to 100% carbon free power production to power all of the AI infrastructure being developed."

Towns Make Risky Bets

The local economics look questionable too. Abilene gave up 85% of its potential property tax revenue to land the Stargate project. Mayor Weldon White defends the deal, saying 15% of billions still beats 100% of nothing. But data centers don't employ many people once built. The mayor hopes for 400 to 1,200 jobs, mostly security guards and maintenance workers.

Who Wins When the Music Stops?

Private equity faces its own puzzle. These firms typically buy companies and sell them within five to seven years. But who can afford to buy a data center complex worth tens of billions? Few investors have Blackstone's resources, and group deals get complicated fast.

One person already cashed out nicely. QTS founder Chad Williams, who started with one data center in Kansas in 2003, stepped down as CEO in March. His departure agreement pays him $3 billion.

The comparison to past bubbles is hard to avoid. Blackstone's president Jonathan Gray made his reputation buying foreclosed homes after the 2008 financial crisis, turning a $7 billion profit when housing recovered. This time feels different, though. The scale is bigger and the technology more uncertain.

Industry veterans remain split. Some see this as the internet build-out all over again—expensive in the short term but transformative in the long run. Others worry about overbuilding in a market where demand could shift quickly.

The global supply chain adds another layer of risk. Much of the steel and aluminum for data centers comes from China. President Trump's tariffs could drive up construction costs just as projects hit their stride. The most advanced chips still come from Taiwan, creating geopolitical vulnerabilities that didn't exist during previous infrastructure booms.

Meanwhile, the technology keeps evolving. Each breakthrough in AI efficiency could make existing data centers obsolete. Each delay in AI progress could leave investors holding very expensive buildings full of rapidly depreciating equipment.

SoftBank's Masayoshi Son, known for big bets that sometimes go spectacularly wrong, remains confident. "Sometimes I get crazily overexcited and make a mistake, like WeWork," he admits. "But when you have conviction and passion, you learn from those mistakes."

The next few years will show whether this represents visionary infrastructure investment or the latest example of Wall Street's tendency to turn promising technologies into speculative frenzies. Either way, a lot of money is about to find out.

Why this matters:

- Wall Street is making its biggest infrastructure bet in decades on technology that's still evolving rapidly—and the early warning signs suggest this could end badly for investors and communities alike.

- If the AI boom stalls or efficiency breakthroughs reduce demand, hundreds of billions in investments could turn into very expensive mistakes, creating the next major financial bubble.

❓ Frequently Asked Questions

Q: How much does it actually cost to build one of these AI data centers?

A: Individual AI data center buildings cost $2-5 billion each. Stargate's 400,000 chips alone will cost $15-20 billion. Total project costs including power infrastructure can reach $50+ billion for large complexes. These numbers dwarf traditional data centers that cost $100-500 million.

Q: Why do AI data centers need so much more power than regular ones?

A: AI chips (GPUs) consume 130 kilowatts per rack versus 2-4 kilowatts for traditional servers. That's a 30x increase. Running ChatGPT uses 10x more energy than a Google search. The chips also run much hotter, requiring massive cooling systems.

Q: What happens if these companies can't pay their data center leases?

A: Most leases run 15-20 years with strict terms, but Microsoft already paused construction in Ohio and some companies have backed out entirely. If major tenants default, private equity firms like Blackstone could face billions in losses on assets that are hard to resell.

Q: How many jobs do these data centers actually create once they're built?

A: Far fewer than promised. Abilene hopes for 400-1,200 jobs from a $100+ billion project, mostly security and maintenance roles. Data centers are highly automated. Construction creates thousands of temporary jobs, but permanent employment is minimal relative to the investment.

Q: Could AI become more efficient and make these massive data centers unnecessary?

A: Yes. DeepSeek showed AI can run on much less computing power than expected. If this trend continues, current data centers could become expensive white elephants. However, efficiency gains might just lead to more AI usage, keeping total demand high.