💡 TL;DR - The 30 Seconds Version

🚨 Cloudflare accuses Perplexity of using fake Chrome browser identities to scrape websites that banned AI crawlers through robots.txt files.

🔬 Cloudflare claims it tested this by creating secret domains with crawler bans, then asking Perplexity questions about the hidden content, which it answered accurately.

📊 The alleged stealth operation generates 3-6 million daily requests across tens of thousands of domains, separate from Perplexity's 20-25 million legitimate requests.

💬 Perplexity spokesperson called Cloudflare's report a "publicity stunt" and claimed the stealth bot "isn't even ours" without providing evidence.

🛡️ Over 2.5 million websites now use Cloudflare's tools to block AI crawlers, with the company removing Perplexity from its verified bot program.

⚔️ The dispute reflects growing tension between AI companies needing data and publishers losing traffic, with some crawlers taking 60,000 pages per visitor sent back.

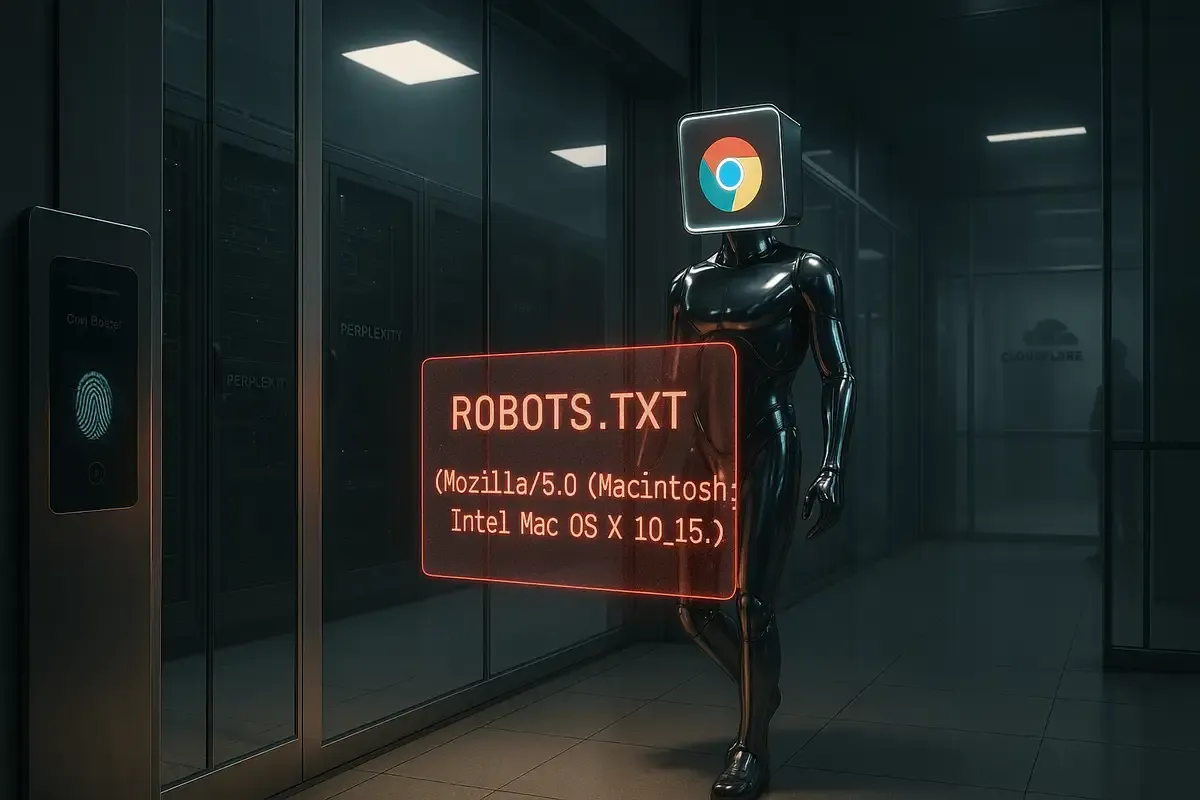

Cloudflare accused Perplexity of using stealth crawlers that pretend to be regular Chrome browsers to scrape websites that banned AI bots.

The web security company published claims Monday alleging the AI search startup deploys fake browser identities when its official crawlers get blocked. According to Cloudflare, when Perplexity can't access content through its declared "PerplexityBot," it switches to a generic user agent that looks like someone browsing from a Mac using Chrome.

Cloudflare says this represents a systematic operation spanning tens of thousands of domains and millions of daily requests. Perplexity disputes these allegations.

The Test That Started It All

Cloudflare says it investigated after customers complained about continued access despite blocks. The company claims it designed a test to verify the behavior.

According to Cloudflare, researchers bought new domains that search engines had never seen. They made these sites private and added robots.txt files that told all crawlers to stay away.

Cloudflare then asked Perplexity questions about the secret content on these hidden domains. The company alleges the AI service provided detailed, accurate answers about content it should never have accessed.

The Alleged Deception Method

Cloudflare claims Perplexity's scheme follows a predictable pattern. First, it tries accessing websites using its legitimate crawler identities: "PerplexityBot" and "Perplexity-User." These generate about 20-25 million daily requests across Cloudflare's network.

When those get blocked, Cloudflare alleges Perplexity switches tactics. The company claims it deploys crawlers that pose as this generic browser: "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36."

According to Cloudflare, this fake identity generates another 3-6 million daily requests. The alleged stealth crawlers rotate through unlisted IP addresses and change their network identifiers to avoid detection.

Perplexity Pushes Back

Perplexity spokesperson Jesse Dwyer called Cloudflare's findings a "publicity stunt" and claimed the stealth bot "isn't even ours." The company hasn't provided evidence to support this denial.

Cloudflare says it used machine learning and network analysis to identify the suspicious crawlers. The company claims that when the stealth bots got blocked, Perplexity's answers became less detailed – which Cloudflare presents as proof the blocks worked.

Comparing AI Companies

Cloudflare says it ran the same test on OpenAI to see how other AI companies respond to website restrictions. According to the report, the contrast was stark.

Cloudflare claims ChatGPT-User properly fetched the robots.txt file and stopped crawling when blocked. The company says it saw no follow-up attempts from mystery browsers or rotating IP addresses.

This behavior aligns with web standards like RFC 9309, which outline how crawlers should identify themselves honestly and respect website preferences.

The Broader Publisher Battle

This isn't Perplexity's first scraping controversy. The company has faced accusations from major publishers including the BBC, The New York Times, and Wired. Developer Robb Knight documented what he claimed was similar stealth crawling behavior targeting his sites in June.

The conflict reflects growing tension between AI companies and content creators. AI companies need massive amounts of data to train their models and answer user questions. Publishers say their content gets consumed without compensation while AI services take their traffic.

Cloudflare CEO Matthew Prince has called this an "existential threat" to publishers. The company's data shows some AI crawlers scrape 60,000 pages for every visitor they send back to publishers.

The Defense Arsenal Grows

Cloudflare isn't just making accusations. The company has built tools for publishers fighting back against aggressive AI crawlers.

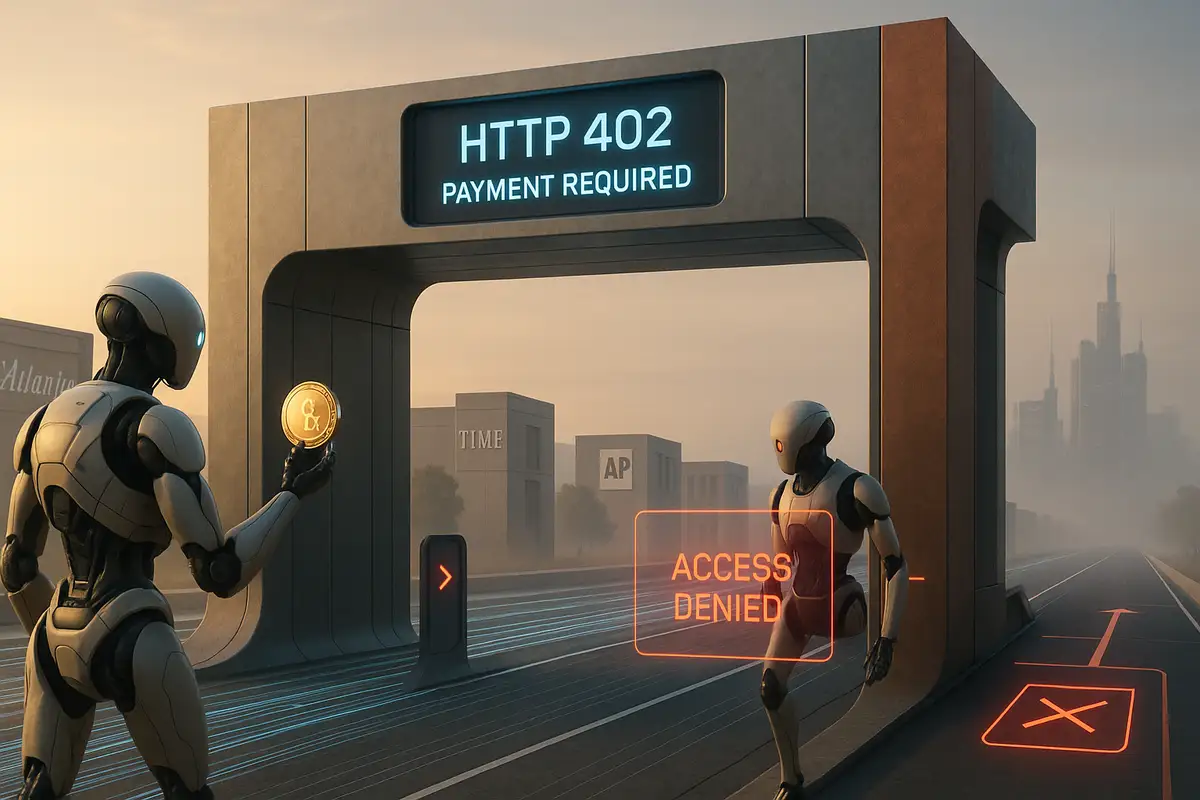

Their "AI Labyrinth" allegedly traps non-compliant bots in mazes of fake content, wasting their resources. The "Pay Per Crawl" system lets websites charge AI companies for access using the HTTP 402 "Payment Required" status code.

Over 2.5 million websites now use Cloudflare's tools to block AI crawlers. The company has also removed Perplexity from its verified bot program and deployed new techniques to catch alleged stealth crawling.

Timing and Valuation Questions

The allegations come at an awkward time for Perplexity. The startup hit an $18 billion valuation in July, fueled by aggressive expansion including a $200/month premium subscription and partnerships with major telecom companies.

CEO Aravind Srinivas has positioned the company as an innovator pushing the boundaries of AI search. But these latest allegations add to questions about whether Perplexity's rapid growth comes at the expense of web standards.

The company recently launched its own "Comet" browser and struck deals to give free subscriptions to 360 million Airtel customers in India. Security audits have also flagged vulnerabilities in Perplexity's Android app.

What Publishers Can Do

Website owners have options beyond robots.txt, according to Cloudflare. The company's bot management system claims to catch most stealth crawling attempts. Customers can set up challenge pages that let real humans pass while blocking suspicious automated traffic.

Cloudflare has also added what it calls signature matching for Perplexity's alleged stealth crawlers to its managed rules, making these tools available even to free customers.

But the fundamental problem remains: as AI companies allegedly get more sophisticated at evading blocks, defensive techniques must evolve just as quickly.

Why this matters:

• These allegations reveal potential conflicts between AI companies' data needs and established web standards, though we only have Cloudflare's side of the story

• Publishers now have tools and techniques to fight back against alleged stealth scraping, but the arms race between AI companies and content creators is just getting started

❓ Frequently Asked Questions

Q: What exactly is robots.txt and why should companies follow it?

A: Robots.txt is a file websites use to tell crawlers which pages they can access. It started in 1994 and became the basic way websites control automated visitors. Following it has been standard web practice for 30 years.

Q: How can we verify Cloudflare's claims independently?

A: Independent verification is difficult since it requires technical analysis of web traffic patterns. Other security firms or researchers would need to run similar tests to confirm or dispute Cloudflare's findings about alleged stealth crawling behavior.

Q: What evidence has Perplexity provided to support their denial?

A: Perplexity hasn't provided technical evidence to support their claim that the stealth bot "isn't even ours." They also haven't explained how their service could answer questions about blocked content without accessing it somehow.

Q: How common is alleged stealth crawling among AI companies?

A: Based on Cloudflare's testing, OpenAI's ChatGPT properly respected robots.txt blocks and didn't attempt stealth crawling. This suggests the alleged behavior isn't universal among major AI companies, making Perplexity's situation stand out.

Q: Can regular website owners protect themselves from alleged stealth crawling?

A: Robots.txt alone isn't enough. Website owners need technical tools like Cloudflare's bot management system, which claims to catch stealth crawlers automatically. Challenge pages can also block suspicious bots while letting real humans through.

Q: Is this alleged behavior illegal?

A: The law isn't clear yet. Breaking robots.txt rules isn't automatically a crime, but using fake identities to bypass website security might violate computer fraud laws. The bigger issue is potentially ignoring 30 years of web standards.

Q: Why should we trust Cloudflare's claims about this?

A: Cloudflare protects millions of websites and has technical tools to analyze web traffic patterns. However, the company also sells bot protection services, so they have a business interest in highlighting these issues.

Q: How much money are publishers allegedly losing to AI crawlers?

A: Cloudflare claims some AI crawlers scrape 60,000 pages for every visitor they send back to publishers. With traditional search sending 20-40% of website traffic, this could represent billions in lost ad revenue industry-wide.