Apple Pays $2 Billion to Read Your Face

Apple acquired Israeli startup Q.AI for close to $2 billion, gaining facial micro-movement technology that decodes silent speech for future wearables.

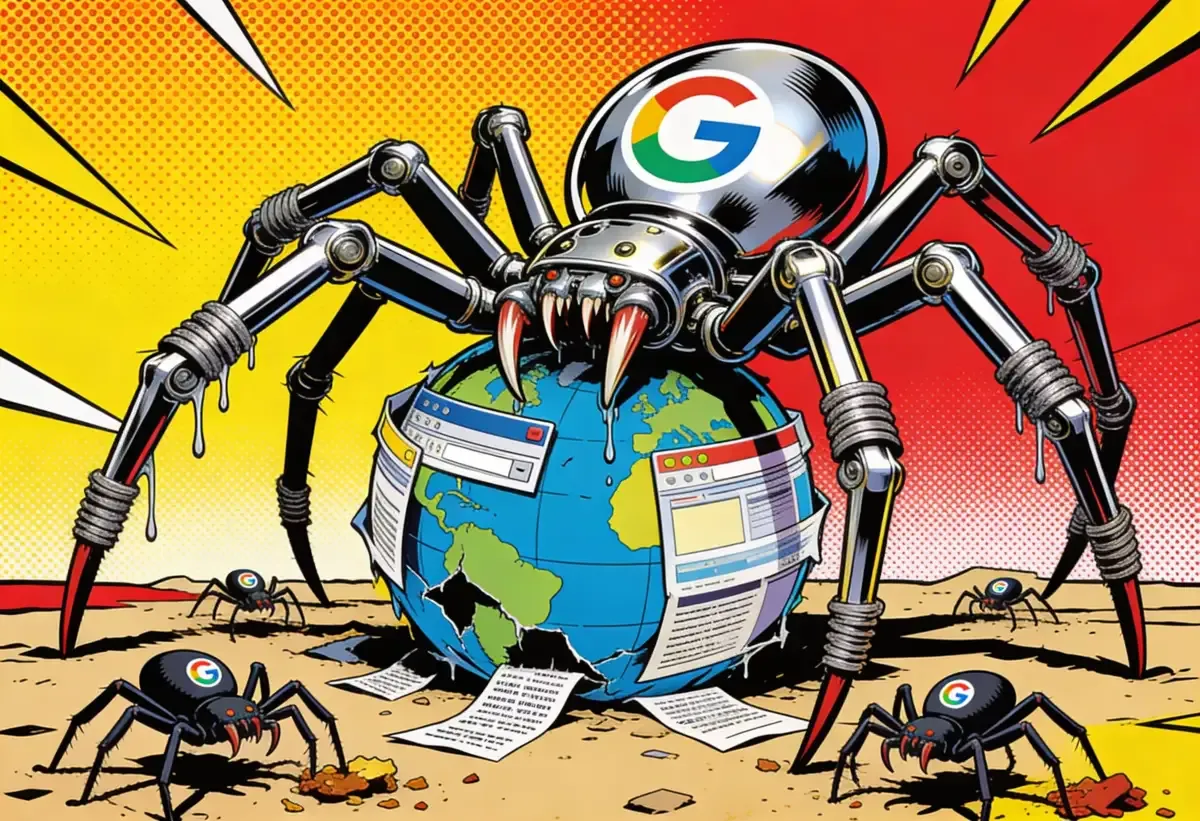

Cloudflare's 2025 data shows Googlebot ingests more content than all other AI bots combined. Publishers who want to block AI training face an impossible choice: lose search visibility entirely. The structural advantage runs deeper than most coverage acknowledged.

Cloudflare's sixth annual Year in Review reported global internet traffic surged 19 percent in 2025. Most coverage focused on the top-line growth, the record DDoS attacks, the post-quantum encryption milestone. The AI crawler data buried deeper in the report tells a different story.

Google's crawler now ingests more web content than every other AI bot combined. Publishers have no practical way to stop it.

Googlebot crawled 11.6 percent of unique web pages in Cloudflare's October-November sample. More than three times OpenAI's GPTBot at 3.6 percent. Nearly 200 times more than PerplexityBot's 0.06 percent. When Cloudflare analyzed HTML request traffic, Googlebot alone accounted for 4.5 percent of all requests. Every other AI bot on the internet, collectively, managed 4.2 percent.

Reports describe an "AI bot war" with multiple combatants. The data shows a single dominant player operating at scale no competitor approaches.

Key Takeaways

• Googlebot crawled 11.6% of sampled web pages, more than 3x OpenAI's GPTBot. Publishers can't block Google's AI training without losing search visibility.

• AI crawlers extract content at ratios up to 100,000:1 compared to referrals sent back. Traditional search operates between 3:1 and 30:1.

• Civil society organizations became the most attacked sector in 2025, receiving 4.4% of mitigated traffic as attackers target institutions with social influence.

• Government-directed shutdowns caused 83 of 174 major internet outages observed globally, with exam-cheating prevention as common justification.

Google's advantage isn't merely scale. It's architectural.

Googlebot crawls for two purposes simultaneously: traditional search indexing and AI model training. Site operators who want to block Google's AI training have no mechanism to do so without also blocking Google's search crawler. Given Google's continued dominance in search referrals, blocking Googlebot means accepting near-invisibility online. Marketing teams watch search referral metrics daily. Those numbers crater without Googlebot access.

Cloudflare states it plainly: publishers are "essentially unable to block Googlebot's AI training without risking search discoverability."

Compare that to what publishers can do with other AI crawlers. GPTBot, ClaudeBot, CCBot topped the list of fully disallowed user agents across the top 10,000 domains. Publishers blocked them freely. They blocked Google's AI training ambitions not at all, because the technical architecture doesn't allow separation.

Two tiers emerge. Smaller AI companies negotiate access, respect robots.txt, accept doors slammed in their faces. Google bundles AI crawling with a service nobody can refuse. The DOJ's antitrust case against Google, which went to trial in September 2023 and resulted in Judge Amit Mehta's August 2024 ruling that Google illegally monopolized the search market, examined precisely this dynamic: default distribution agreements that foreclose competition regardless of product quality. The crawler bundling operates on similar logic.

Cloudflare tracks how often AI platforms crawl sites versus how often they send visitors back. The gap is enormous.

Anthropic's ClaudeBot: ratios between 25,000:1 and 100,000:1 during the second half of 2025. A hundred thousand visits to grab content. One referral in return. OpenAI peaked at 3,700:1 in March. Perplexity stayed generally below 400:1 and dropped under 200:1 from September onward, which is why they keep positioning themselves as the publisher-friendly option.

Google's traditional search crawling looks nothing like this. Ratios between 3:1 and 30:1 all year. The AI platforms extract at rates hundreds or thousands of times higher than search ever did.

The obvious defense: search engines have decades of optimization, AI assistants are newer. As AI platforms add search features, referral rates should theoretically improve. Cloudflare's data shows some decline in OpenAI's ratios as ChatGPT search usage grew. But whether extraction remains the default will shape web economics for years.

Religious institutions. Civic organizations. Libraries. For the first time, these groups became the most attacked sector online. They received 4.4 percent of global mitigated traffic, up from under 2 percent at year's start. Attack share jumped past 17 percent in late March. Hit 23.2 percent in early July.

Cloudflare's explanation: sensitive user data with potential financial value. But there's a simpler reason these organizations get hit. A 2024 NTEN survey found that 59 percent of nonprofits spend less than $50,000 annually on technology. Security gets a fraction of that budget. Most lack dedicated security staff. The servers running donor databases at a regional food bank don't get the same hardening as JPMorgan's infrastructure.

Cloudflare runs Project Galileo, which gives free security services to vulnerable groups. The attack statistics reflect what that program catches. Unprotected nonprofits don't show up in Cloudflare's data at all.

Something else is happening with target selection. Ransomware crews have figured out that hitting a hospital creates chaos that extends far beyond stolen records. A breach at a voter registration nonprofit lands in the news cycle. Gambling sites, which topped attack charts in 2024, saw their share drop by more than half. Attackers moved toward targets where the damage radiates.

Of 174 major internet outages Cloudflare observed in 2025, 83 came from governments deliberately killing connectivity. Access Now and other digital rights groups have tracked this pattern for years. What's changed is the casualness of it.

Iraq shut down the internet so students couldn't cheat on exams. So did Syria. So did Sudan. Libya and Tanzania went dark during civil unrest. Afghanistan's Taliban cited immorality prevention. Some shutdowns were total blackouts. Others used throttling. Technically connected, practically useless. You could load a login page but couldn't authenticate. Email clients would spin indefinitely.

The logic has shifted. Governments now treat connectivity as something they grant, not something citizens possess. Exam integrity apparently outweighs what businesses lose when they can't process transactions for 48 hours.

Cable cuts dropped nearly 50 percent as an outage cause compared to previous years. Power failures doubled. The physical infrastructure keeps getting more resilient. The political commitment to keeping it running keeps getting weaker.

Spain topped Cloudflare's internet quality rankings with average download speeds exceeding 300 Mbps. Romania came second. Then Portugal, Denmark, Hungary, Sweden. All above 200 Mbps.

Notice what's missing from that list. The United States. The UK barely cracks the top tier. A decade of fiber buildout in southern and eastern Europe paid off in ways that American cable company half-measures didn't.

Starlink traffic doubled in 2025 after SpaceX launched in over 20 new countries. Traffic spiked hard whenever a new market opened. Mongolia. Kenya. Places where digging trenches for fiber costs more than the potential revenue justifies.

But satellite won't close the gap. Latency characteristics differ fundamentally from fiber. Good enough for email, video calls, basic browsing. Not enough for the real-time applications that increasingly define what high-bandwidth populations do online. The divide used to be about access. Now it's about what that access lets you do.

Post-quantum encryption hit 52 percent of human-generated traffic to Cloudflare, up from 29 percent in January. Most of that jump came from a single iOS update that enabled hybrid quantum-safe transport layer security by default. When Apple flips a switch, adoption curves move.

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

The threat this addresses: adversaries capturing encrypted traffic today, storing it on cheap hard drives, waiting for quantum computers that can crack RSA and elliptic curve cryptography. When? The Global Risk Institute surveyed 40 quantum computing experts in 2024. Fourteen percent probability within 10 years. Thirty-one percent within 15. Nobody knows exactly, which is why the industry is moving now.

Bot traffic shows different adoption patterns that Cloudflare didn't break out. The 52 percent figure is humans only.

Cloudflare handles traffic for millions of websites. Not all websites. Traffic that routes elsewhere doesn't appear in these measurements. The company processes 81 million HTTP requests per second on average. That's a lot. It's not everything.

The AI crawler data has a specific limitation. Bots identify themselves through user-agent strings. Crawlers that lie about who they are, or route requests through residential proxies, don't show up in verified bot statistics. Some operators rotate infrastructure weekly. The evasion tactics evolve faster than they used to.

And this report shows what happened without explaining why. Traffic growth stayed flat until mid-August, then accelerated sharply. Attacks pivoted toward civil society organizations. Starlink adoption spiked in specific markets. These are patterns in search of explanations.

Google's crawler-search dependency creates a structural advantage that technical solutions won't easily undo. Publishers could theoretically develop a standard that allows Googlebot access for search indexing while blocking AI training. Google would need to implement it. No commercial incentive pushes them toward that.

The DOJ's proposed remedies in the search monopoly case include forcing Google to divest Chrome and share search data with competitors. Neither addresses the crawler bundling. If Google maintains unified crawling while competitors negotiate separate access, the asymmetry persists regardless of what happens to the browser.

The 2026 data will show whether that consolidation accelerates. Or whether publishers, regulators, or technical standards bodies mount resistance that actually sticks.

Q: Why can't publishers block Google's AI training while keeping search indexing?

A: Google uses a single crawler, Googlebot, for both search indexing and AI model training. There's no separate user-agent for AI crawling that publishers can block independently. Robots.txt directives apply to the whole bot. Publishers could theoretically create a new standard allowing selective access, but Google would need to implement it. No commercial incentive pushes them toward that.

Q: What is Cloudflare's Project Galileo?

A: Project Galileo provides free cybersecurity services to vulnerable organizations including human rights groups, journalists, nonprofits, and civic institutions. Cloudflare launched it in 2014. The program now protects organizations in over 111 countries. Qualifying groups receive DDoS protection, web application firewalls, and other security tools at no cost. The 2025 attack statistics on civil society organizations reflect traffic Galileo observed and mitigated.

Q: How does Starlink's performance actually compare to fiber?

A: Starlink typically delivers 50-200 Mbps download speeds with latency around 25-60 milliseconds. Fiber connections in top European markets exceed 200-300 Mbps with latency under 10 milliseconds. The latency gap matters most for real-time applications: video conferencing, online gaming, financial trading. For basic browsing and email, Starlink works fine. For latency-sensitive work, fiber remains significantly better.

Q: What's the difference between a government blackout and throttling?

A: A blackout cuts all connectivity. Throttling slows connections to the point of uselessness while maintaining technical connectivity. During throttling, login pages might load but authentication fails. Email clients spin indefinitely. Video won't buffer. Governments sometimes prefer throttling because it's harder to document and lets them claim the internet remains "available." Both methods appeared in 2025's 83 government-directed outages.

Q: How accurate is Cloudflare's AI crawler data?

A: Cloudflare identifies crawlers through user-agent strings, which bots self-report. Crawlers that misidentify themselves or route through residential proxies don't appear in verified statistics. Cloudflare handles 81 million HTTP requests per second on average, covering a large portion of web traffic, but not all websites use their services. The data represents what Cloudflare sees, not the entire internet.

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.