💡 TL;DR - The 30 Seconds Version

👉 GPT-5 cuts hallucinations with browsing: gpt-5-main 9.6% vs 12.9% for GPT-4o; gpt-5-thinking 4.5% vs 12.7% for o3.

📊 Without web access, SimpleQA hallucination rates rise: gpt-5-main 0.47; gpt-5-thinking 0.40; o3 0.46; GPT-4o 0.52.

🧭 ChatGPT enables browsing by default, while many API calls do not, making app answers look cleaner; keep retrieval on when correctness matters.

🧮 GPT-5 answers include fewer claims, shrinking error surface: gpt-5-main averaged 5.9 vs 6.8 for GPT-4o; gpt-5-thinking 7.2 vs 7.7 for o3.

🛡️ OpenAI uses safe-completions and flags gpt-5-thinking as High capability in bio and chem under its Preparedness Framework, adding safeguards.

🚀 Accuracy now depends on configuration; expect default browsing, source links, and smarter routing to drive trust and adoption across apps.

OpenAI says GPT-5 hallucinates less. It does, under the right conditions. The system card shows a clear drop in factual errors when ChatGPT is allowed to browse the web and source answers. Turn browsing off, and the gains shrink fast. The story isn’t “problem solved.” It’s “know the conditions.”

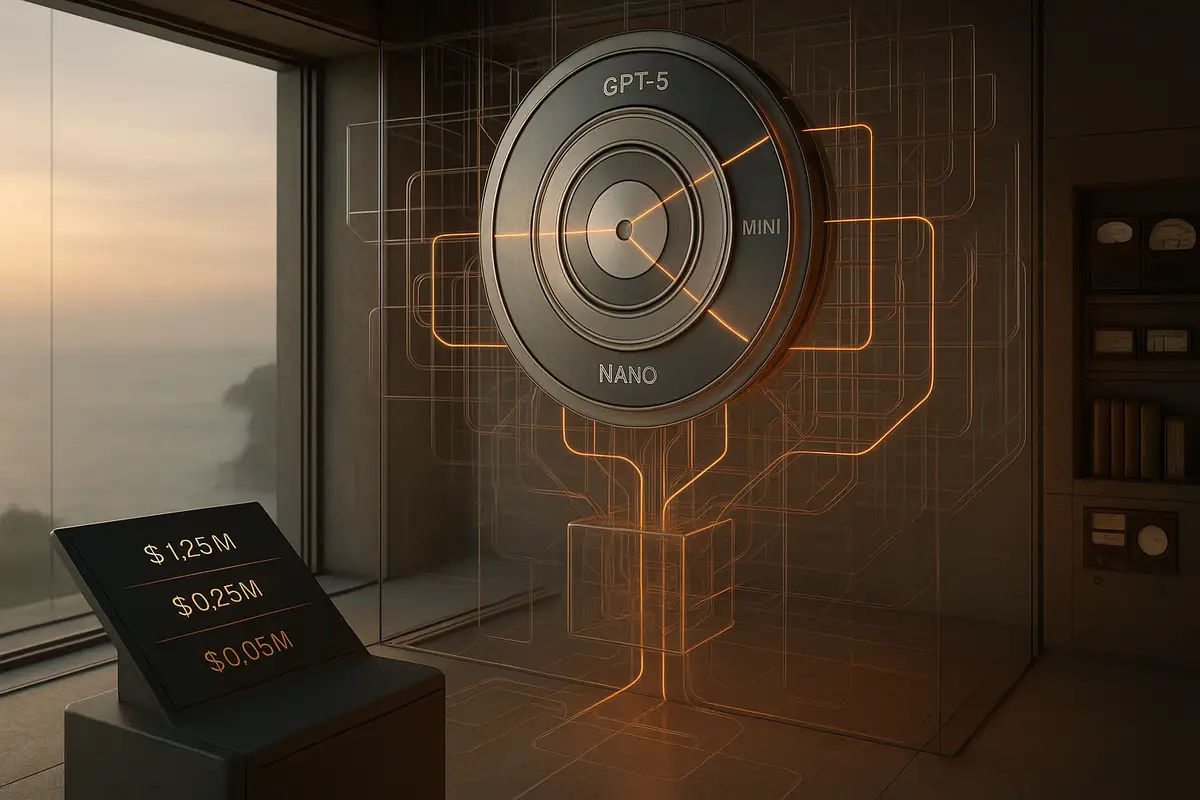

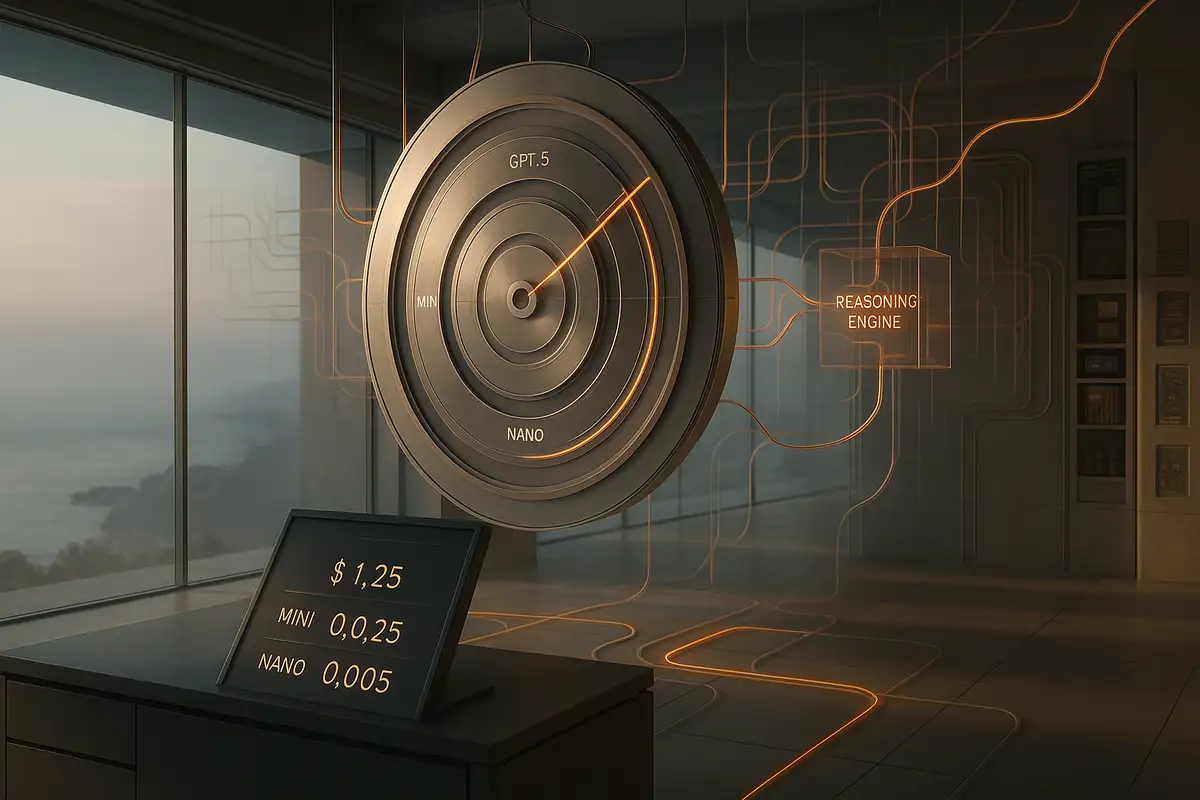

On production-like prompts with browsing enabled, GPT-5’s fast chat model (“gpt-5-main”) cut claim-level errors to 9.6% versus 12.9% for GPT-4o, and the deeper reasoning model (“gpt-5-thinking”) fell to 4.5% versus 12.7% for o3. Measured at the response level, “at least one major factual error” dropped to 11.6% for gpt-5-main (20.6% for GPT-4o) and 4.8% for gpt-5-thinking (22.0% for o3). That’s real progress.

But hold that thought. On OpenAI’s SimpleQA—short fact questions, no web access—hallucination rates jump across the board: 0.47 for gpt-5-main, 0.40 for gpt-5-thinking, 0.46 for o3, 0.52 for GPT-4o (lower is better). In other words, the “one in ten” vibe disappears when the model can’t look things up.

Browsing is the hidden variable

OpenAI states it plainly: ChatGPT has browsing enabled by default, but many API queries do not. The evals match that split. With browsing on, GPT-5’s grader-audited accuracy improves; with browsing off, the thinking model still helps, but the main model’s error rate rises sharply on open-ended factual tasks. For users, the practical advice is simple: if correctness matters, keep retrieval/browsing on.

One more wrinkle: GPT-5’s answers tend to pack fewer claims. In the production eval, gpt-5-main averaged 5.9 correct claims per response vs 6.8 for GPT-4o; gpt-5-thinking hit 7.2 vs 7.7 for o3. Fewer claims per answer means a smaller target surface for errors. That’s not bad. It’s caution, but it does lower coverage.

OpenAI also leaned on an LLM grader (with web) to score factuality, reporting ~75% agreement with human raters and noting the grader tended to catch more errors than humans. Translation: the scoring was strict but consistent, which likely depresses headline accuracy a bit—preferable to grading on a curve.

“Less refusal, more safe help”

Beyond accuracy, GPT-5 ships a policy pivot: safe-completions replace brittle yes/no refusals. Instead of blocking mixed-intent requests outright, the model aims to respond safely—offering high-level guidance or alternatives while avoiding actionable harm. OpenAI’s write-up shows safer outputs on dual-use prompts (bio, cyber) and argues the mistakes that do slip through are less severe than with refusal-trained baselines. It’s a sensible direction for real-world use, where intent is murky and users need some help.

The company also flags gpt-5-thinking as High capability in bio/chem under its Preparedness Framework—not because they proved dangerous uplift, but as a precaution that triggers extra safeguards. Belt-and-suspenders, and a smart political read.

What the numbers really mean for users

Three things matter here.

First, “hallucination” depends on setup.

GPT-5’s biggest gains show up when the assistant can browse. On open factuality benchmarks like LongFact and FActScore, thinking models still lead with browsing off—but the fast main model degrades without retrieval. Tooling matters. Defaults matter.

Context beats model size.

Second, abstention is doing some work.

Those “fewer claims per response” suggest GPT-5 sometimes says less rather than guess. That’s appropriate and part of why error rates drop.

If you need comprehensive answers, enable retrieval and nudge the model to enumerate sources explicitly.

Third, healthcare claims deserve granularity.

OpenAI highlights HealthBench gains for GPT-5-thinking. Good, but general hallucination stats aren’t clinical safety.

What matters is domain-specific performance, calibration, and how often the model defers to a professional or cites guidelines. Expect regulators and hospital buyers to ask for those specifics.

Strategy check: Open weight, controlled risk

Two days before the system card, OpenAI released gpt-oss-120b and gpt-oss-20b as open-weight models under Apache-2.0 with a usage policy. The model card says the 120B default doesn’t meet OpenAI’s thresholds for “High capability” in bio, cyber, or self-improvement—and even with adversarial fine-tuning, it didn’t cross those bars in their testing. This isn’t magnanimity; it’s a calibrated move: court developers, stay below frontier risk, and keep the real moat—GPT-5 + browsing + safety stack—behind the API.

That split matters for accuracy, too. The system-level pieces—routing between fast and thinking models, browsing, graders, safer output training—drive much of GPT-5’s measured improvement. It’s less about a single model and more about the stack.

The uncomfortable middle

A final, slightly awkward note: GPT-5’s progress doesn’t end the hallucination debate; it reframes it. When the assistant can fetch and verify, error rates look like single digits. When it can’t, they look like quarter-to-half of answers on SimpleQA. That’s not a contradiction—it’s two usage modes. The real product question is whether the default experience keeps people in the safer lane and makes source-checking effortless.

OpenAI’s pitch—fewer errors, safer outputs, better instruction following—mostly holds up. But the caveat is structural: correctness lives in the ecosystem (router, tools, policies), not just the weights. If you change the environment, you change the model you think you’re getting.

Why this matters:

- Accuracy isn’t a trait; it’s a configuration. GPT-5’s biggest gains ride on browsing, routing, and safer output training. Turn those off, and you’re back near old error rates.

- The moat is the system, not just the model. Open-weight releases are developer-friendly, but the measurable reliability comes from the closed, tool-rich stack—and that’s the part OpenAI controls.

❓ Frequently Asked Questions

Q: How should I configure an app to reduce GPT-5 hallucinations?

A: Keep retrieval/browsing on by default, route hard factual tasks to the thinking model, and require the model to cite sources or enumerate evidence. Add guardrails that log unanswered queries rather than forcing guesses. This setup makes outputs more verifiable and keeps risky prompts in safer lanes.

Q: Did the accuracy gains come from the model alone?

A: No. The stack mattered—routing between fast/thinking models, web browsing, an LLM grader, and safer output training. The grader agreed with humans about 75% of the time and caught more errors, making scores stricter but more consistent across evaluations.

Q: What’s the difference between claim-level and response-level errors here?

A: Response-level asks, “Was there at least one major error?” On production-like prompts, gpt-5-main had 11.6% response-level errors vs 20.6% for GPT-4o; gpt-5-thinking had 4.8% vs 22.0% for o3. Claim-level measures per-statement mistakes and showed similar directional gains.

Q: Why does GPT-5 sometimes give shorter answers?

A: It often abstains instead of guessing. In the production eval, gpt-5-main averaged 5.9 correct claims per answer vs 6.8 for GPT-4o; gpt-5-thinking 7.2 vs 7.7 for o3. Fewer claims shrink the error surface. The trade-off: slightly lower coverage unless you prompt for explicit enumeration.

Q: What changes with “safe-completions” compared with refusals?

A: Instead of blanket “no,” GPT-5 offers high-level, non-actionable guidance and alternatives on mixed-intent prompts. You get some help without step-by-step misuse. It reduces unhelpful refusals while aiming to lower the severity of mistakes that slip through.

Q: Why was gpt-5-thinking flagged as High capability in bio/chem?

A: As a precaution under OpenAI’s Preparedness Framework. The label triggers extra safeguards; it wasn’t shown to cause dangerous uplift in their tests. In practice, you should expect more friction and scrutiny on sensitive biology and chemistry tasks.

Q: What’s the strategy behind the open-weight releases?

A: OpenAI released gpt-oss-120b and 20b under Apache-2.0 with a usage policy. The 120B default stayed below “High capability” thresholds in bio/cyber/self-improvement, even after adversarial fine-tuning. It courts developers while keeping frontier reliability tied to the closed GPT-5 + tools stack.

Q: If I’m in healthcare, how should I read these results?

A: Treat general hallucination drops as necessary but not sufficient. Demand domain-specific evals, calibration checks, source citation policies, and clear deferral behavior to clinicians or guidelines. Track refusal/abstention rates and require retrieval for any patient-facing advice.