Introduction

💡 TL;DR - The 30 Seconds Version

🤖 AI agents are autonomous programs that use 4 core components (Brain, Memory, Tools, Planning) to complete tasks without constant human guidance.

🔄 Agents operate in a Think-Act-Observe loop, analyzing situations, taking actions, and learning from results until tasks are complete.

👨💻 Tutorial provides complete code examples for building a customer support agent, including setup, memory management, and tool integration.

🚀 Advanced patterns include multi-agent teams and self-improving agents that learn from mistakes through reflection and refinement.

🛠️ Deployment guide covers Docker containerization, FastAPI endpoints, and monitoring with framework comparisons for LangChain, CrewAI, and others.

📈 Start simple with basic functionality, then add complexity based on user needs rather than building everything upfront.

AI agents are programs designed to think, act, and learn autonomously. Unlike simple chatbots, they utilize external tools, retain context, and handle complex tasks independently. Imagine having a digital assistant capable of performing real-world actions.

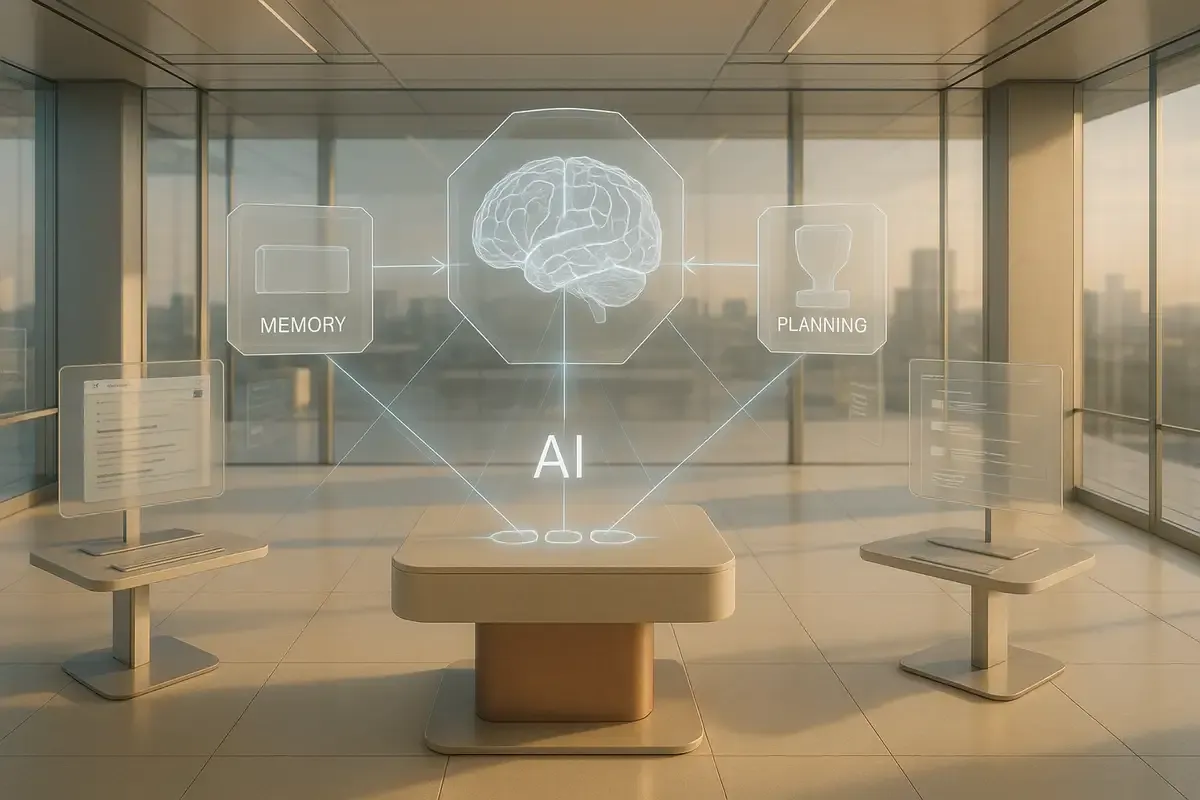

Core Components of AI Agents

Every effective AI agent comprises four key elements:

- Brain (LLM): Decision-making engine (e.g., GPT-4, Claude, Mistral 7B).

- Memory: Stores past interactions across three levels:

- Short-term: immediate context.

- Working: current session details.

- Long-term: persistent knowledge.

- Tools: Allow agents to interact externally (web search, email, database operations).

- Planning: Breaks down tasks and coordinates actions logically.

The Think-Act-Observe Loop

AI agents operate using a straightforward loop:

- Think: Evaluate current state and decide actions.

- Act: Perform actions or generate responses.

- Observe: Review outcomes and update the state accordingly.

This cycle repeats until the task is accomplished or additional input is required.

Building a Basic Customer Support Agent

Step 1: Environment Setup

Install dependencies:

pip install langchain openai python-dotenv

Set your API keys securely in .env:

OPENAI_API_KEY=your_secret_key_here

Step 2: Define Agent Goals and Constraints

Clarify your agent’s purpose explicitly:

class CustomerSupportAgent:

def __init__(self):

self.name = "CustomerSupportAgent"

self.goal = "Efficiently resolve customer inquiries"

self.constraints = [

"Protect customer privacy",

"Escalate complex issues",

"Maintain professional communication"

]

Step 3: Implement Memory

Manage agent memory efficiently:

class AgentMemory:

def __init__(self):

self.short_term = []

self.working_memory = {}

self.long_term = VectorDatabase()

def store_interaction(self, query, response, importance_score):

if importance_score > 0.8:

self.long_term.add(query, response)

self.working_memory[query] = response

Step 4: Integrate Tools

Equip your agent with actionable tools:

class ToolManager:

def __init__(self):

self.tools = {

'web_search': WebSearchTool(),

'email': EmailTool(),

'database': DatabaseTool()

}

def execute_tool(self, tool_name, parameters):

if tool_name not in self.tools:

return f"Error: Tool {tool_name} unavailable"

try:

return self.tools[tool_name].execute(parameters)

except Exception as e:

return f"Execution failed: {e}"

Step 5: Core Operational Loop

The central operational method:

class AgentLoop:

def run(self, initial_input):

state = AgentState(initial_input)

iteration = 0

max_iterations = 10

while iteration < max_iterations and not self.is_complete(state):

analysis = self.think(state)

action_result = self.act(analysis)

state = self.observe(action_result, state)

iteration += 1

if self.should_stop(state):

break

return state.final_result

def think(self, state):

prompt = f"Situation: {state.context}\nGoal: {state.goal}\nNext steps?"

return self.llm.generate(prompt)

Advanced Patterns

Multi-Agent Collaboration

Coordinate multiple specialized agents effectively:

class MultiAgentSystem:

def __init__(self):

self.agents = {

'researcher': ResearchAgent(),

'writer': WritingAgent(),

'reviewer': ReviewAgent(),

'coordinator': CoordinatorAgent()

}

def solve_task(self, task):

assignments = self.agents['coordinator'].delegate(task)

results = {name: self.agents[name].execute(subtask) for name, subtask in assignments.items()}

return self.agents['coordinator'].synthesize(results)

Self-Improving Agents

Enhance your agent with reflective capabilities:

class ReflectiveAgent:

def process_with_reflection(self, query):

response = self.generate_response(query)

critique = self.critique_response(response, query)

return self.refine_response(response, critique.suggestions) if critique.needs_improvement else response

Deployment

Containerization

Standardize deployment using Docker:

FROM python:3.10-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

EXPOSE 8000

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]

API Exposure

Provide API endpoints clearly:

from fastapi import FastAPI

app = FastAPI()

@app.post("/chat")

async def chat(request: QueryRequest):

return {"response": agent.process(request.message)}

@app.get("/health")

async def health():

return {"status": "healthy"}

Monitoring

Implement robust logging and monitoring:

import logging

from datetime import datetime

def log_agent_action(action, user_id, success=True):

logging.info({

'timestamp': datetime.now().isoformat(),

'user_id': hash(user_id),

'action': action,

'success': success,

'agent_version': '1.2.3'

})

Framework Recommendations

- LangChain: Comprehensive, beginner-friendly, extensive community.

- CrewAI: Ideal for coordinated multi-agent setups.

- LlamaIndex: Best for sophisticated data retrieval needs.

- n8n: Visual workflows and minimal coding.

Common Issues and Quick Solutions

| Issue | Solution |

|---|---|

| Looping or stalled behavior | Set iteration limits and clear exit criteria. |

| Excessive memory usage | Implement compression and regular pruning. |

| Unpredictable tool failures | Add error handling with fallback mechanisms. |

| Slow response times | Optimize with smaller models or caching. |

Actionable Checklist

- [ ] Clearly define agent's objective

- [ ] Choose a suitable framework

- [ ] Set up environment and dependencies

- [ ] Develop simple agent prototype

- [ ] Incrementally add complexity

- [ ] Conduct realistic testing

- [ ] Deploy and monitor

- [ ] Continuously refine based on feedback

Core Advice: Begin simply, ensure reliability, then incrementally enhance complexity based on actual user needs and feedback.

❓ Frequently Asked Questions

Q: How much does it cost to run an AI agent?

A: Basic agents using GPT-3.5 cost around $0.002 per 1,000 tokens (roughly 750 words). A customer service agent handling 100 queries daily runs $5-15 monthly. GPT-4 costs 10-15x more but provides better reasoning for complex tasks.

Q: What programming skills do I need to build an AI agent?

A: You need basic Python knowledge and API experience. Most frameworks like LangChain require understanding functions, classes, and HTTP requests. No machine learning expertise needed - the heavy lifting happens through API calls to pre-trained models.

Q: How long does it take to build a working AI agent?

A: A simple agent takes 2-4 hours using frameworks like LangChain. Production-ready agents with proper error handling, monitoring, and deployment typically require 1-2 weeks for experienced developers.

Q: How fast do AI agents respond to user requests?

A: Response times vary by model and complexity. Simple queries using GPT-3.5 return in 1-3 seconds. Complex tasks requiring multiple tool calls can take 10-30 seconds. Local models respond faster but with reduced capabilities.

Q: What security risks come with AI agents?

A: Main risks include prompt injection attacks, memory poisoning, and privilege escalation. Agents with database access or email capabilities need input validation, rate limiting, and scoped permissions to prevent misuse.

Q: Can AI agents handle multiple users simultaneously?

A: Yes, with proper architecture. Cloud deployments using containers can scale to thousands of concurrent users. Each user needs isolated memory and session management to prevent data leakage between conversations.

Q: Which framework should beginners choose?

A: LangChain offers the best starting point with extensive documentation and 500+ pre-built tools. CrewAI works better for multi-agent scenarios. n8n suits non-programmers who prefer visual workflow builders.

Q: Do I need to train my own AI model?

A: No. Most agents use pre-trained models through APIs (OpenAI, Anthropic, etc.). You only customize the agent's behavior through prompts, tools, and memory systems. Training custom models requires significant resources and expertise.