Three professors bet diffusion beats autoregression for speed. Microsoft, Nvidia back parallel token generation

Inception just raised $50 million to prove language models have been generating text wrong. The Stanford-led startup claims its diffusion models deliver code 10 times faster than GPT or Claude.

Menlo Ventures led the round. Microsoft's M12, Nvidia's NVentures, Snowflake, and Databricks piled in. Andrew Ng and Andrej Karpathy wrote angel checks. The syndicate signals enterprise buyers want alternatives to the OpenAI/Anthropic duopoly, especially if those alternatives slash latency.

The Breakdown

• Inception raised $50M to commercialize diffusion models that generate all tokens simultaneously, not sequentially like GPT

• Mercury hits 1,000 tokens/second versus 50-200 for traditional models, potentially slashing AI infrastructure costs 90%

• AWS Bedrock and Microsoft partnerships provide distribution, but Copilot's 90% Fortune 100 penetration poses adoption challenge

• Success depends on whether parallel generation maintains quality at scale when handling messy production code

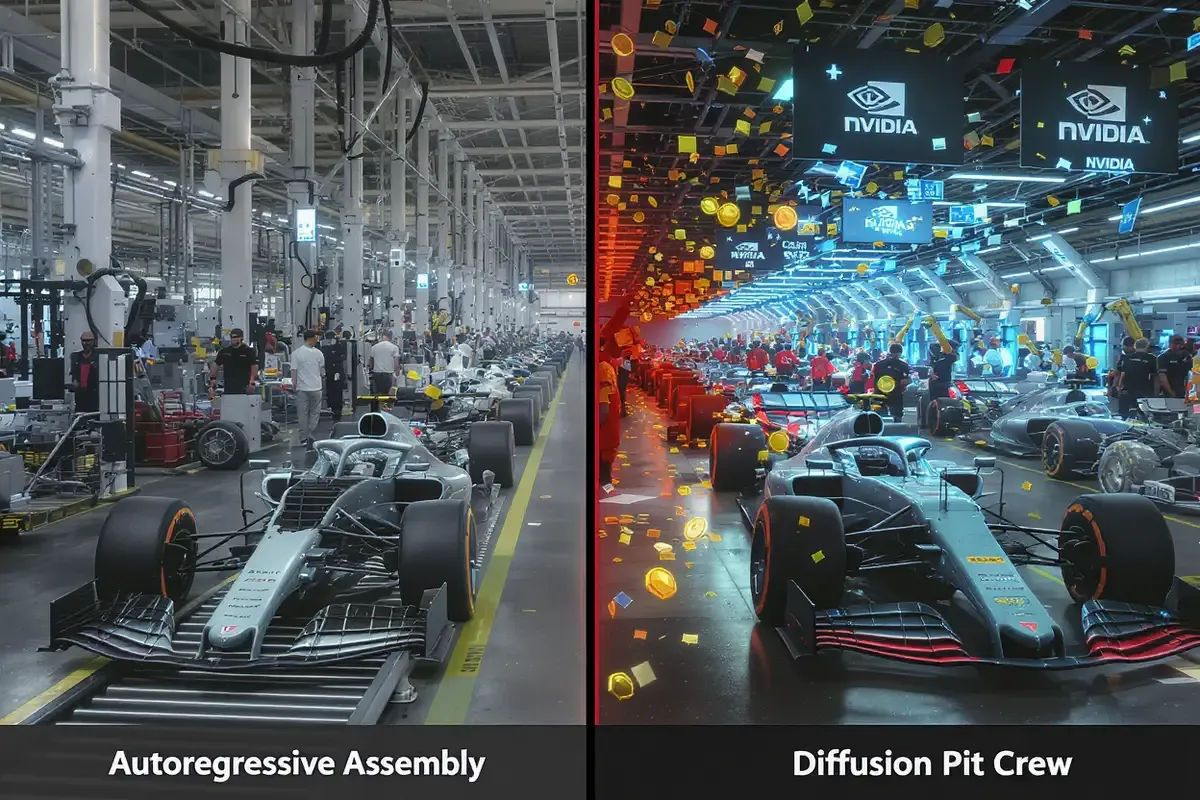

The core bet: diffusion models, which power Midjourney and Stable Diffusion for images, work better than autoregression for text. CEO Stefano Ermon, a Stanford CS professor who helped pioneer diffusion techniques, frames it as a physics problem. Autoregressive models like GPT generate one token at a time, each waiting for the last. Diffusion models refine all tokens simultaneously. Think factory line versus pit crew.

The parallel processing pitch

Mercury, Inception's flagship model, reportedly hits 1,000 tokens per second on Nvidia H100s. For context, most production models deliver 50-200 tokens per second. If accurate, that's not incremental improvement. It's architectural disruption.

The speed comes from parallelism. While ChatGPT calculates token 47, then 48, then 49, Mercury refines tokens 47 through 500 simultaneously. Each refinement step improves the entire sequence. The approach trades sequential precision for parallel throughput.

That's the headline. The engineering reality is messier. Diffusion models historically struggled with discrete tokens (words) versus continuous values (pixels). Recent papers show progress, but questions remain about quality degradation when you push speed to the limit. Mercury's benchmarks claim parity with frontier models on coding tasks. Enterprise A/B tests will determine if that holds under production loads.

Who needs millisecond code

Three customer segments care about sub-second responses. Developers hate waiting, enterprises hate compute bills, and real-time applications literally can't function with traditional latencies.

For developers, the pitch is flow state. Copilot already converted millions by reducing friction. Mercury promises to eliminate it. Early integrations with ProxyAI, Buildglare, and Kilo Code target this wedge. The tools pitch Mercury as the backend that makes suggestions appear before you finish typing.

For enterprises, it's unit economics. If Mercury truly delivers 10x throughput per GPU, that transforms the cost structure of AI-powered products. A customer service bot running Mercury could handle 10x more conversations per server. Or cut infrastructure spend by 90%. AWS clearly believes the math. Mercury launched on Bedrock and SageMaker JumpStart in August.

For real-time applications, voice agents, live translation, interactive debugging, Mercury enables products that autoregressive models price out. Microsoft's NLWeb pilot, announced at Build, specifically chose Mercury for natural language web interfaces that need instant responses.

The distribution problem

Speed alone doesn't guarantee adoption. GitHub Copilot owns 90% of Fortune 100 accounts. Cursor hit $500 million ARR by June. Both have baked themselves into developer muscle memory.

Inception's distribution strategy skips direct competition. Instead of building a coding tool, they're selling the engine. AWS Bedrock gives them enterprise reach. OpenRouter captures the indie developer crowd. The API mimics OpenAI's format, making swaps trivial.

The risk: becoming commoditized infrastructure. If Mercury just powers other companies' products, Inception captures less value than the tools wrapping it. The partnership list suggests they've accepted this trade. Better to be Intel than Gateway.

The autoregression empire strikes back

OpenAI and Anthropic won't watch passively. Both companies have explored non-autoregressive approaches. Neither has shipped them. That suggests either technical barriers or strategic priorities elsewhere.

More immediately, speed-optimized autoregressive models keep improving. Gemini Flash, Claude Haiku, and Amazon's Nova Micro all target the latency-sensitive segment Mercury claims. SambaNova advertises DeepSeek V3 running at 250 tokens/second on custom hardware. If specialized chips make autoregression fast enough, Mercury's advantage narrows.

The deeper question: Does parallel generation scale? Inception's papers acknowledge that diffusion models typically need multiple refinement steps. Too few steps and quality drops. Too many and you lose the speed advantage. The sweet spot might be task-specific, which complicates the "drop-in replacement" pitch.

What breaks first

Three scenarios could crack Inception's thesis:

Quality gaps emerge at scale. Lab benchmarks use standard datasets. Production code is weird. If Mercury handles clean Python but chokes on legacy enterprise Java, adoption stalls.

Autoregression gets fast enough. If next-gen GPT models hit 500 tokens/second through clever caching or hardware, "10x faster" becomes "2x faster." That's still valuable but not paradigm-shifting.

Distribution partners squeeze margins. AWS and Microsoft want differentiated offerings, but they also want commodity pricing. If Mercury becomes successful infrastructure, expect pressure on economics.

The bull case remains compelling. Every millisecond matters for voice agents, coding assistants, and interactive applications. If diffusion delivers consistent quality at 1,000 tokens/second, it unlocks product categories that don't exist today. Imagine debuggers that explain errors before you finish reading them. Customer service that feels like conversation, not typing indicators.

Inception's founders spent years publishing the research. Now they're testing whether academic innovation translates to market disruption. The $50 million says smart money believes parallel beats sequential.

The code will ship fast. Whether it works is another question.

Why this matters:

- Diffusion could reset AI economics. 10x throughput means 1/10th infrastructure costs.

- Speed enables new products. Real-time voice agents become profitable at Mercury's claimed latencies.

❓ Frequently Asked Questions

Q: How exactly do diffusion models work differently from ChatGPT?

A: ChatGPT writes one word at a time, each waiting for the previous one. Diffusion models start with random tokens and refine all of them simultaneously through multiple passes. Think editing an entire paragraph at once versus typing it letter by letter. This parallel processing is why Mercury hits 1,000 tokens/second versus ChatGPT's 50-200.

Q: Who are the Stanford professors behind Inception?

A: CEO Stefano Ermon (Stanford CS), Aditya Grover (UCLA), and Volodymyr Kuleshov (Cornell) founded Inception. They co-authored key diffusion papers including EDLM and FlashAttention. Ermon specifically pioneered applying diffusion to discrete tokens (words) rather than just continuous values (pixels). Their academic credibility helped secure backing from Andrew Ng and Andrej Karpathy.

Q: What's the actual cost difference for companies using Mercury?

A: At 10x throughput, Mercury could cut infrastructure costs by 90%. A customer service bot handling 10,000 daily conversations might need 10 H100 GPUs with traditional models ($300,000/month) versus 1 GPU with Mercury ($30,000/month). AWS Bedrock pricing isn't public yet, but the math suggests dramatic savings for high-volume applications.

Q: Which coding tools can I actually use Mercury with today?

A: Mercury is available through AWS Bedrock, SageMaker JumpStart, OpenRouter, and Poe. Specific coding tools include ProxyAI, Buildglare (for low-code web development), and Kilo Code. You can also access it directly via Inception's API, which mimics OpenAI's format. Microsoft's NLWeb for natural language interfaces also integrated Mercury.

Q: Why haven't OpenAI or Anthropic shipped diffusion models if they're so fast?

A: Both have explored non-autoregressive approaches in research but haven't productized them. The challenge: diffusion needs multiple refinement steps. Too few and quality drops. Too many and you lose speed. Inception claims they've found the sweet spot, but enterprise A/B tests will determine if quality holds under production loads with messy legacy code.