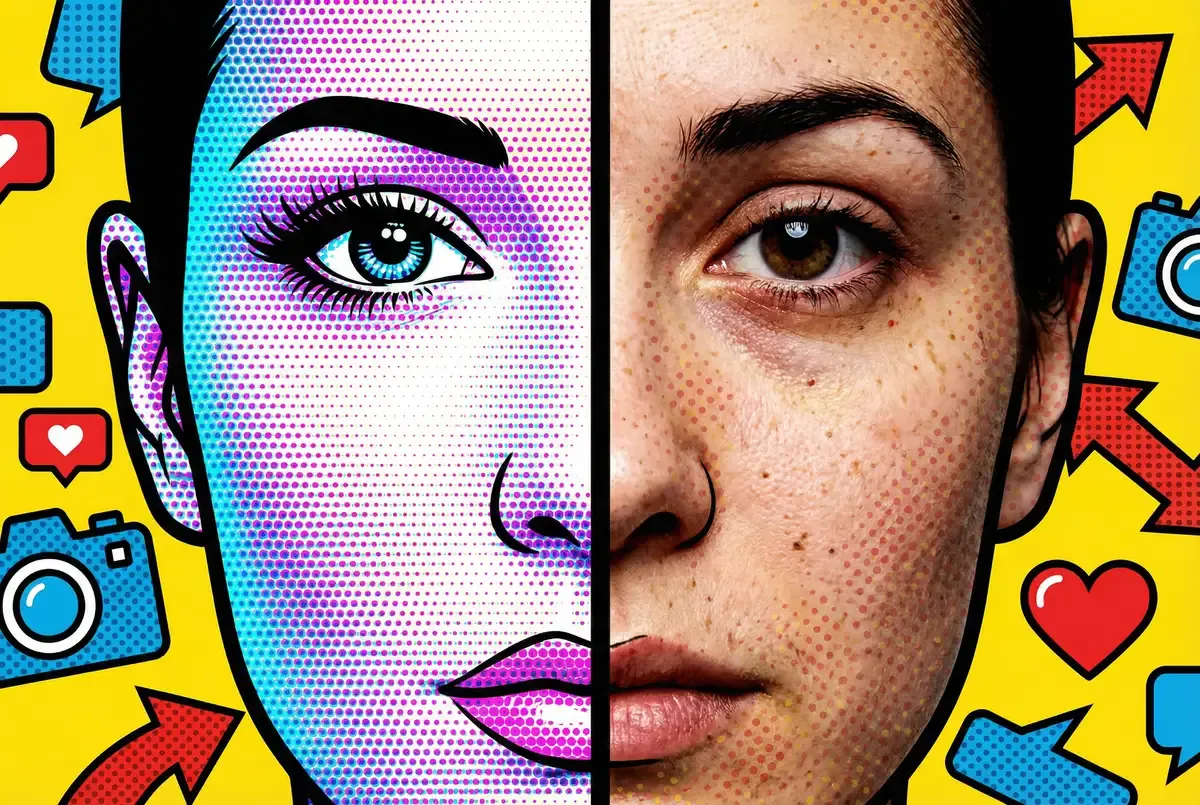

If you scrolled through Instagram this morning, you probably saw a face that never existed. Skin smoother than human biology allows. Lighting that's just off enough to trigger that uncanny feeling. Background details that blur into nonsense if you look too close. That's the tell—the glossy sheen of AI-generated imagery. Adam Mosseri sees it everywhere now. His solution? Make it the camera companies' problem.

Mosseri spent 2025 watching Meta pour tens of billions of dollars into AI infrastructure. The company built generative tools directly into Instagram and Facebook, rolled out AI chatbots that users could customize, and even experimented with AI influencers based on real celebrities. Then, in a 20-slide year-end post on Threads, Instagram's head did something unusual for a platform executive: he admitted the whole thing has gotten out of hand.

"The feeds are starting to fill up with synthetic everything," Mosseri wrote. Authenticity is becoming "infinitely reproducible," he declared. You can't trust photos anymore. You can't trust videos. The polished Instagram aesthetic that made the platform matter culturally? Dead, according to Mosseri. Platforms will get worse at spotting AI content as the technology improves, not better.

His solution? Camera manufacturers should cryptographically sign images at capture, creating a "chain of custody" that proves photos are real. Not Meta. Not the company that built the tools flooding its own platform with AI content. Camera makers.

The post reads less like a strategy document and more like a surrender. Which raises a question Mosseri doesn't address: if the company spending billions to create AI content tools has concluded that detecting AI content is impossible, who exactly benefits from that conclusion?

Key Takeaways

• Instagram head Adam Mosseri admits platforms will get worse at detecting AI content as technology improves

• Proposed solution requires camera manufacturers to build cryptographic signing at billions in cost—not Meta

• Photographers told to post unflattering content to prove authenticity while Meta profits from free AI-generated engagement

• Announcement came after Meta spent billions building AI tools that created the problem

The Surrender

Mosseri doesn't mince words. "For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened," he wrote. Not anymore, he says. Adapting will take years.

He's not wrong. Deepfakes keep improving. Midjourney generates images that pass for real. So does OpenAI's Sora. Most people can't spot the fakes most of the time. You don't need expertise to create this stuff—just access to the tools. Meta's own contribution to this flood includes AI Studio, which lets users create custom chatbots, and various generative tools integrated across Instagram and Facebook.

The result, according to Mosseri, is that users will need to abandon their default assumption that what they see is real. Instead, they'll approach media with skepticism, "paying attention to who is sharing something and why." He acknowledges this will be uncomfortable—humans are "genetically predisposed to believing our eyes."

Sign up for Implicator.ai

Strategic AI news from San Francisco. Clear reporting on power, money, and policy. Delivered daily at 6am PST.

No spam. Unsubscribe anytime.

None of this is news. Photographers have been sounding alarms about AI slop for years. Journalists too. Researchers have been tracking the problem since generative models got good enough to matter. What's different here is that Instagram's head is admitting it publicly, calling it inevitable, and pitching a solution that requires Meta to do exactly nothing.

The Camera Manufacturer Solution

Mosseri's proposed fix centers on cryptographic signing. Camera manufacturers would embed digital signatures in images at the moment of capture, creating verifiable proof that a photo is real. "It will be more practical to fingerprint real media than fake media," he wrote.

On the surface, this sounds reasonable. If detection of AI content will only get harder as models improve—which Mosseri states as fact—then perhaps verification of authentic content is the better approach.

But look at what he's actually asking for. Apple needs to build cryptographic signing into iPhones. Samsung too. Canon and Sony would have to retool their cameras. Every manufacturer would need compatible systems that work across billions of devices, stay secure against tampering, and plug into Instagram's infrastructure without breaking. The coordination alone would take years. The cost would run into billions.

And who bears that cost? Not Meta.

It's the industrial logic of a company that floods its platform with synthetic content and then declares that hardware manufacturers should solve the authenticity problem. Meta spent billions making it easy to create AI slop. Now camera makers should spend billions proving what's real.

Mosseri provides no details. Who maintains the cryptographic standards? How do you handle the billions of existing devices that can't sign images? What about backwards compatibility? He just says camera companies "could" do this, waving away the engineering and economics like they're trivial details.

Meta has already admitted defeat on detection. Its own labeling system has proved unclear and ineffective. Watermarks—the precursor to cryptographic signing—have been easy to remove and easier to ignore. The company spent 2025 investing heavily in AI while simultaneously declaring the detection problem unsolvable.

The Imperfection Tax

Photographers already had a Mosseri problem before AI entered the picture. The algorithm doesn't show their work to followers. Not consistently. Not reliably. Ask any professional photographer and you'll hear the same complaint: Instagram suppresses their content. They're forced to game a system they don't understand just to reach their own audience.

Now Mosseri tells them the solution to competing with AI is to make themselves look worse.

"Savvy creators are going to lean into explicitly unproduced and unflattering images of themselves," he wrote. "In a world where everything can be perfected, imperfection becomes a signal. Rawness isn't just aesthetic preference anymore. It's proof. It's defensive."

Read that again. Instagram's head of product is telling creators that the way to prove they're human is to post content that's "explicitly unproduced and unflattering." Not because it's better content. Because the platform can't tell the difference between human and AI work, and imperfection is—temporarily—a signal of authenticity.

The economics are stark. Humans pay to be real. Meta gets paid either way.

Human creators produce original content at their own expense. They maintain relationships with audiences. Now they're supposed to degrade their production quality just to signal authenticity. Meta? It gets infinite AI content for free. Content that scales without limit and drives the engagement metrics advertisers care about.

Mosseri calls this an aesthetic shift. "Unless you are under 25, you probably think of Instagram as feed of square photos: polished makeup, skin smoothing, and beautiful landscapes," he wrote. "That feed is dead. People stopped sharing personal moments to feed years ago."

He's describing a change Instagram's own algorithm accelerated. The platform stopped prioritizing content from accounts users follow, instead surfacing algorithmically recommended posts. Personal sharing moved to direct messages because the public feed became unreliable for actually reaching friends. Now Mosseri declares this shift complete and tells creators the polished aesthetic that made Instagram culturally significant is over.

What he doesn't mention: Instagram's algorithm could prioritize showing posts to followers. It could surface original content over synthetic content. It could make creator content visible to the audiences that chose to follow them. These are product decisions, not technological constraints.

The Timing Tells a Story

Mosseri made this announcement after Meta placed its bets. The company burned billions on AI infrastructure in 2025. Built generative tools into Instagram and Facebook. Pushed AI chatbots and synthetic content features to users. Only after shipping all of this does Instagram's head declare that detecting AI content is a losing battle and platforms will "get worse at it over time."

This isn't prediction. It's positioning.

By declaring the detection problem unsolvable now, Meta lowers expectations for its own responsibilities. The company can continue benefiting from AI-generated engagement while pointing to Mosseri's post as evidence that everyone knew this was coming and the solution lies elsewhere.

There's a regulatory angle here too. Governments and advocacy groups have been pressuring platforms to label and control AI content. Mosseri's post preemptively frames that as a losing battle, shifting the conversation toward verification of real content—which requires action from hardware manufacturers, not platforms.

The phrase "a lot of amazing AI content" appears in Mosseri's post without examples. He doesn't identify what this amazing content is, who created it, or why it's valuable. The claim exists to soften the admission that Instagram is filling up with synthetic content, suggesting this is a quality problem rather than a fundamental shift in how the platform operates.

Meta's incentives are straightforward. AI content is free. It scales without friction. It keeps people scrolling. Human creators? They want payment. They have rights. They require moderation. An Instagram dominated by AI is cheaper to run than one dominated by humans—assuming users can't tell the difference and the engagement numbers don't tank.

Where This Goes

Mosseri thinks AI will soon replicate imperfection convincingly enough to fool people. At that point, he says, authenticity won't live in the content anymore. It'll live in the identity. "We'll need to shift our focus to who says something instead of what is being said," he wrote.

This requires platforms to surface much more context about who's posting. Identity verification. Credibility signals. Account transparency. All things Meta could build now but has chosen not to prioritize.

Instead, the company is betting that camera manufacturers will solve the authenticity problem through cryptographic signing. This conveniently requires no immediate action from Meta while positioning the company as forward-thinking about a problem it helped create.

The photographers and creators who built Instagram into a cultural platform are now told to compete by making themselves look worse. The algorithm that already doesn't show their work to followers remains unchanged. The platform that spent billions on AI tools declares detecting AI impossible. And the solution requires hardware manufacturers to rebuild their products around verification systems that don't yet exist at scale.

Mosseri's post frames all of this as inevitable technological change. But these are product decisions. Instagram could prioritize follower content over algorithmic recommendations. It could invest in detection rather than declaring it futile. It could choose not to flood its own platform with AI generation tools while simultaneously claiming it can't control the results.

The company that created this problem is now declaring it someone else's responsibility to solve. That's not a technology limitation. It's a business decision.

Instagram's 3 billion users are about to learn that seeing is no longer believing. What they won't see in Mosseri's post is who made that choice, who profits from it, and who pays the cost.

Get Implicator.ai in your inbox

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

❓ Frequently Asked Questions

Q: How does cryptographic signing for photos actually work?

A: When you take a photo, the camera embeds an encrypted digital signature into the file at capture. This signature creates a verifiable chain showing the image hasn't been altered. Any edit breaks the signature. The technology exists—cameras from Leica and Sony already support C2PA standards—but requires industry-wide adoption across billions of devices to work at Instagram's scale.

Q: How much has Meta actually spent on AI?

A: Meta spent over $30 billion on AI infrastructure in 2024 alone, mostly on Nvidia GPUs for training models. The company plans similar spending in 2025-2026. This includes building AI Studio, generative tools for Instagram and Facebook, and the Llama language model family. For context, that's more than the entire annual revenue of most Fortune 500 companies.

Q: Can't Instagram just ban AI-generated images entirely?

A: Technically difficult and economically undesirable for Meta. AI content drives engagement, costs nothing to produce, and requires no creator payments or rights management. Banning it would reduce content volume and engagement metrics that advertisers pay for. More importantly, Meta has admitted it can't reliably detect AI images—so enforcement would be impossible without false positives hitting real photographers.

Q: What percentage of Instagram content is AI-generated now?

A: Meta hasn't released official figures. Third-party analysis from Graphika found that AI-generated images grew from under 1% of viral Instagram posts in early 2023 to roughly 15-20% by late 2024. The percentage varies dramatically by category—fashion and travel content shows higher AI rates. Mosseri's post suggests the company expects AI to become the majority of content within years.

Q: Why did Instagram's polished aesthetic die?

A: Instagram's algorithm changed around 2021-2022, prioritizing recommended content over posts from accounts you follow. This made the public feed unreliable for reaching friends, so personal sharing moved to DMs. The polished grid became performative rather than personal. Now Mosseri says imperfect, raw content signals authenticity—but only until AI learns to fake imperfection too.