The app remembers key details about users. Tell it you're learning Spanish, and it tracks your progress. Mention food allergies, and it adjusts recommendations. It learns from your social media engagement - the posts you like, content you click, and how you interact with friends.

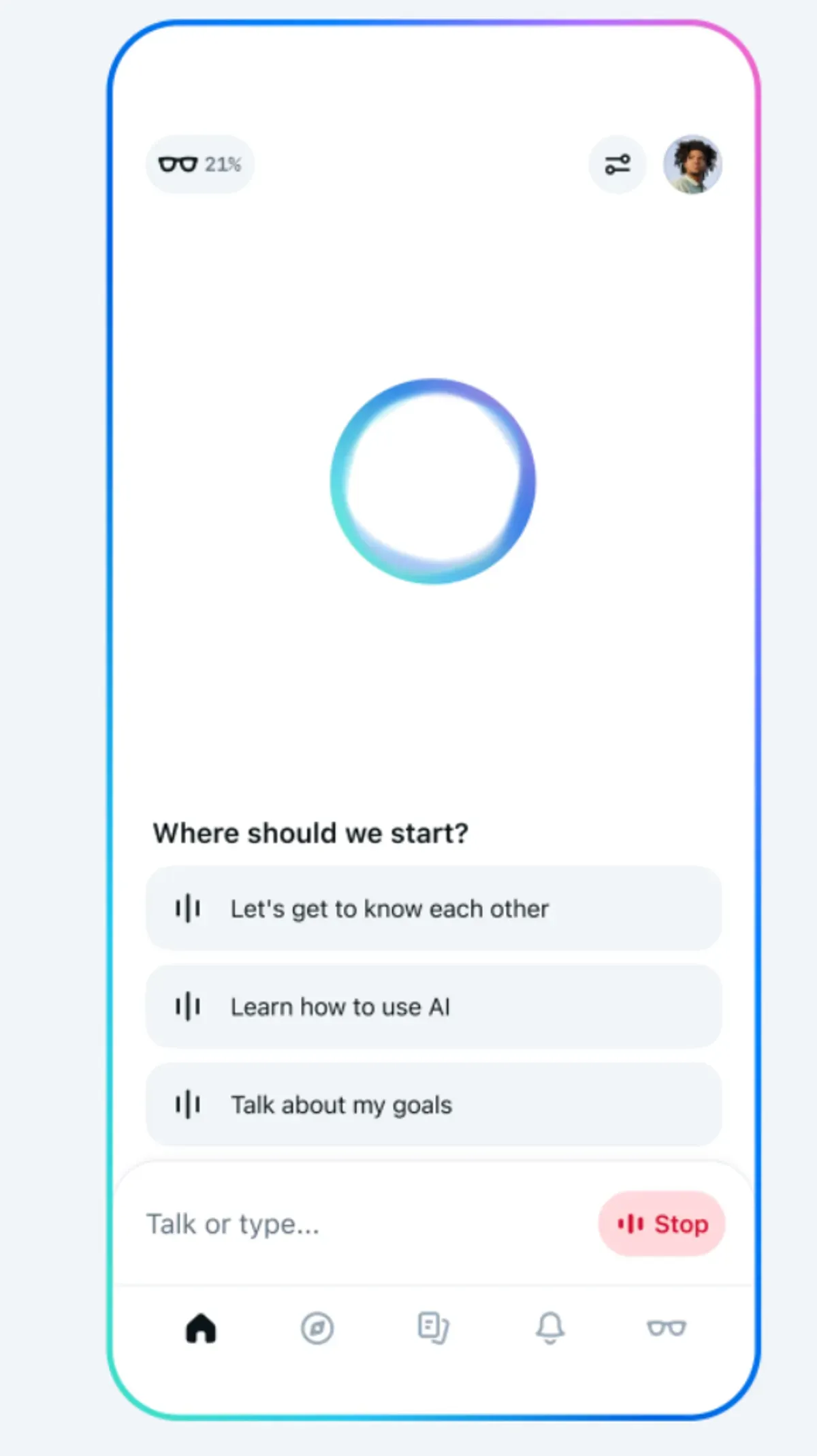

Voice commands drive the experience. Meta added experimental "full-duplex speech" technology that generates voice responses directly instead of converting text to speech. The AI speaks more naturally, though the feature remains in testing across the U.S., Canada, Australia, and New Zealand.

A Digital Memory That Never Forgets

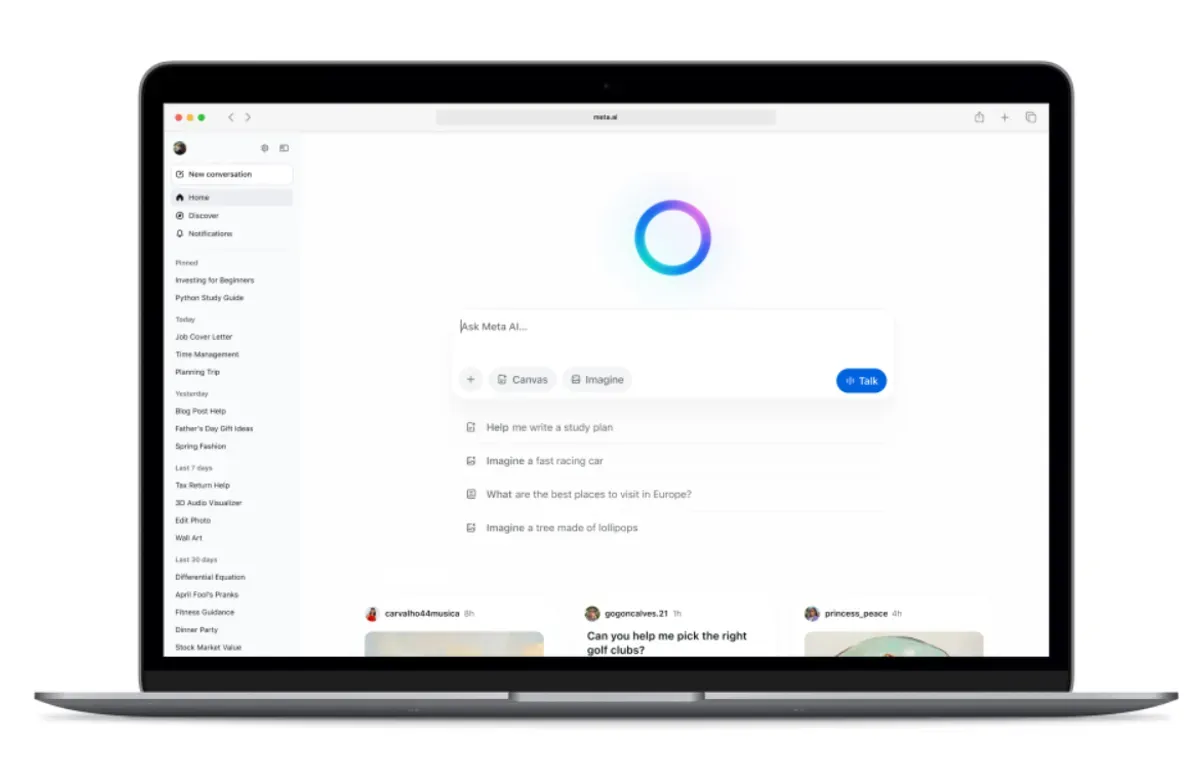

The app connects with Meta's broader ecosystem. Start a conversation on Ray-Ban smart glasses, continue on your phone, then finish on desktop. One limitation: you can't start chats on desktop and move to glasses. The AI works across WhatsApp, Instagram, Facebook, and Meta's smart glasses.

Meta packed the app with social features. A Discover feed lets users share AI interactions and modify popular prompts. Nothing posts without permission, but the social angle shows Meta's strategy: make AI part of everyday digital conversations.

The desktop version adds tools for work. Users can generate and edit documents, create images, and export PDFs. Meta tests features for document analysis and rich text editing, pushing beyond casual chat into productivity.

The Personalization Strategy

Meta banks on personalization to stand out. While competitors like ChatGPT and Claude focus on broad knowledge, Meta's AI aims to understand individual users. They bet that knowing your coffee order and meeting schedule matters more than explaining complex topics.

The hardware integration reveals bigger plans. By connecting with Ray-Ban smart glasses, Meta positions their AI as an always-available assistant. They want it ready whether you're walking downtown or sitting at your desk.

The privacy question looms large. Meta's pitch boils down to a simple trade: share your data, get an AI that understands you. Some users will embrace personalization. Others might question the data collection. Meta bets enough people want a truly personal AI to accept the trade-off.

Timing and Strategy

The timing matters. As AI assistants multiply, Meta carves out their niche: deep personalization through social data. They've turned their biggest criticism - collecting vast user information - into their key advantage.

Why this matters:

- Meta found a unique edge in AI: years of personal data that competitors can't match

- The launch shows Meta's AI strategy: become essential to daily life by knowing users better than any other assistant

Read on, my dear:

Meta: Introducing the Meta AI App: A New Way to Access Your AI Assistant | Meta