💡 TL;DR - The 30 Seconds Version

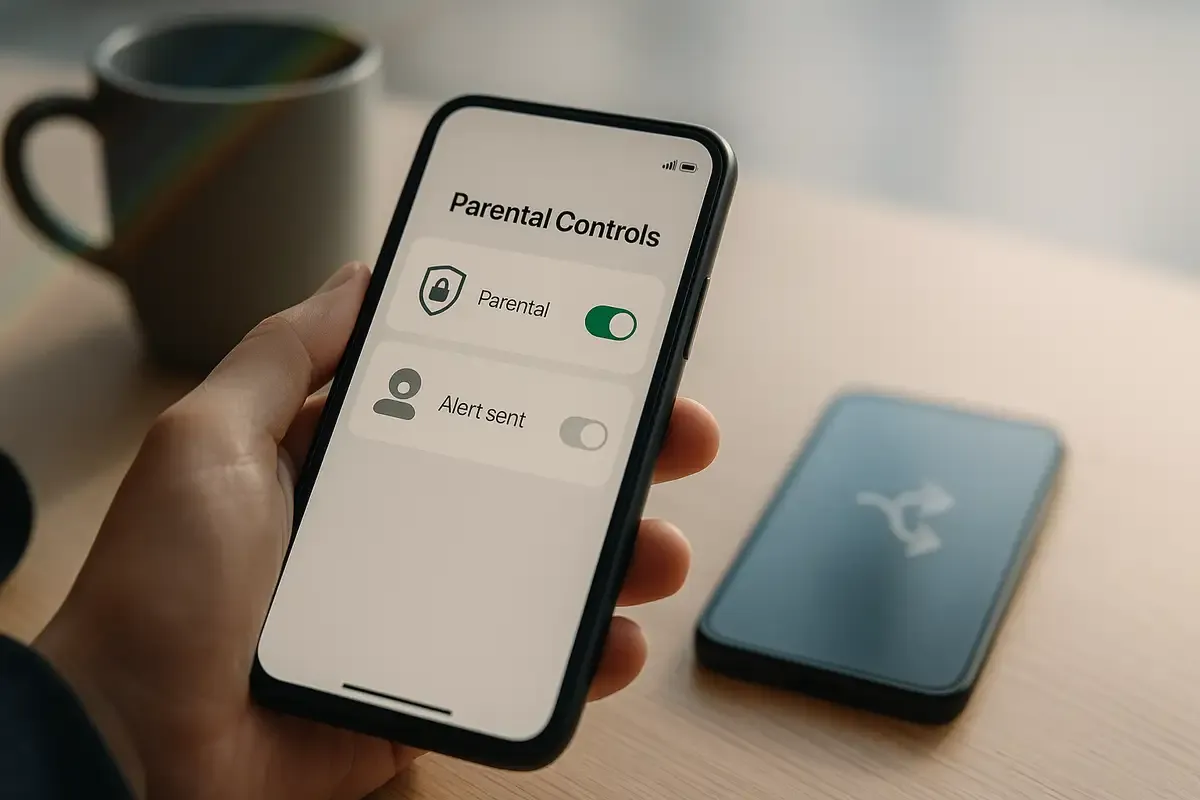

👨👩👧 OpenAI launched parental controls Monday letting parents link ChatGPT accounts with teens to filter content, set usage windows, and receive alerts if human reviewers detect self-harm language—but the setup is opt-in and either party can unlink anytime.

🔄 ChatGPT now silently routes emotional or sensitive prompts to more restrictive models for all users—including paying adults—with no notification when switches occur and no toggle to disable the behavior.

⚖️ The controls emerged after a California family sued OpenAI last month alleging the chatbot contributed to their teen's suicide, with OpenAI working alongside state attorneys general on the design.

⏱️ Parental alerts for self-harm arrive within hours of flagging—not minutes—and OpenAI hasn't published median response times, false positive rates, or review team size despite these metrics determining actual effectiveness.

🎭 ChatGPT's human-like design drove adoption but created attachment risks the company is now retrofitting controls to address—while determined teens bypass restrictions via burner accounts and adult users lose agency over paid products.

🔮 OpenAI is building age-detection systems to auto-apply teen settings when uncertain about user age, but hasn't explained how classification works or what false positive rate is acceptable—potentially pushing more adults into restrictive modes.

Parents get alerts on self-harm language; safety routing now touches grown-ups, too.

OpenAI rolled out parental controls for ChatGPT on Monday, giving families the ability to link accounts, filter content, set quiet hours, and receive alerts if staff reviewing flagged chats see signs of self-harm in a teen’s conversation. The company framed the launch as a youth-safety upgrade, but it also coincides with a quieter change: when prompts turn emotional or sensitive, ChatGPT can reroute messages to stricter models—even for paying adults—without asking first. See OpenAI’s own explanation in its parental controls announcement.

What’s actually new—and what isn’t

The controls centralize familiar switches in one panel. Parents can disable voice mode and image generation, turn off memory, opt out of model-training, and block access during set hours. For linked teen accounts, stricter defaults now suppress graphic material, sexual/romantic or violent role-play, viral challenges, and “extreme beauty ideals.” That is the product delta. Not a silver bullet.

Alerts are the headline feature: when the system detects potential self-harm, a human review decides whether to notify a parent by email, text, and push. The alert shares timing and guidance—not chat transcripts. OpenAI says it worked with California and Delaware attorneys general and youth-safety groups on the design, after a California family sued last month alleging ChatGPT contributed to their son’s death. The setup is opt-in for both sides; either party can initiate the link, and unlinking triggers a notice to the parent.

The design tension, laid bare

ChatGPT grew by feeling conversational and empathetic. It remembers context, mirrors tone, and follows social cues. That human-like veneer drove adoption; it also raises risk. People—especially teens—can form attachments to something that cannot actually care, diagnose, or consent. Parental controls acknowledge the risk without solving it. A motivated 15-year-old can spin up a burner account on the web in minutes. Tools help. They do not substitute for trust.

There’s a second tension: privacy versus intervention. Parents do not get transcripts, only a nudge that something is wrong. Teen privacy is preserved, but response time matters. Minutes do. OpenAI says it’s working to shrink the lag from flag to alert. Until we see real median response times and false-positive rates, this remains a promise, not a safety metric. That’s the uncomfortable truth.

Adult users, routed without consent

Alongside teen controls, OpenAI has been testing a “safety router” that quietly shifts individual messages to more conservative models when conversations veer into emotional or legally sensitive territory. The company’s ChatGPT lead, Nick Turley, has said the switch happens per-message and is temporary. Many subscribers are livid. They chose a model for a reason, and there is no toggle to refuse rerouting or an on-screen notice when it occurs.

This is not an argument against guardrails. It is an argument for agency and disclosure in a paid product. Adults deserve the option to accept the risks of a frank conversation with a general-purpose model—or to opt in to the safer one—without being silently overruled. Silent overrides feel paternalistic, and they erode trust even when well-intentioned.

Business and policy cross-pressures

OpenAI faces three constituencies at once. Regulators and courts want fewer tragedies and clearer due care. Parents want visibility with minimal overreach. Teens want autonomy and privacy. Now add paying adults, who expect predictable tools that do not change behavior mid-sentence. All four groups are right in their own way, and their interests collide.

The current architecture splits the difference. Parents get meaningful knobs—but not enforcement. Teens get privacy—but alerts may arrive late. Adults get safer defaults—but lose control at the edges. OpenAI gets to say it acted—without publishing the operational numbers that would let outsiders grade effectiveness. That’s a policy posture, not a product finish line.

What to watch next

Two data points matter more than press-day feature lists. First, routing transparency: will OpenAI show a banner when it swaps models, and will it offer an account-level override for adults? Second, emergency latency: how long from a flagged message to a parent’s phone, on average and at the 90th percentile? Publish those numbers monthly and the debate becomes empirical.

One more question lingers: age detection. OpenAI says it’s building systems to apply teen settings by default when uncertain about age. False positives will push more adults into teen-style constraints. False negatives miss the very users these controls aim to protect. Precision here is not “nice to have.” It is the crux.

Why this matters

- Anthropomorphized AI drives engagement—and dependency. The design that made ChatGPT feel “human” now forces trade-offs between empathy, safety, and user agency for both teens and adults.

- Silent safety routing chips away at trust. Absent transparent controls and published response metrics, OpenAI’s fixes protect the company more than users.

❓ Frequently Asked Questions

Q: How does account linking actually work—can my teen refuse?

A: Yes. Both parent and teen must opt in. Either can send the invitation, but the other must accept. Either party can also unlink at any time—the parent receives a notification when this happens, but there's no enforcement mechanism. A teen can decline the invitation or disconnect later without losing ChatGPT access.

Q: Can I opt out of the safety routing if I'm an adult paying for ChatGPT Plus or Pro?

A: No. OpenAI currently provides no toggle to disable the safety router that switches emotional or sensitive prompts to more restrictive models. Adult users report being rerouted to "gpt-5-chat-safety" variants without notification, regardless of subscription level or chosen model. The company has not announced plans to offer an opt-out.

Q: How fast do parental alerts arrive after ChatGPT detects potential self-harm?

A: Within hours, not minutes. OpenAI states alerts arrive "within hours" after human reviewers flag concerning conversations. The company acknowledges current lag times exceed what they consider acceptable and says they're working to reduce delays. No specific median response times or 90th percentile metrics have been published.

Q: Can my teen just create a second account to bypass parental controls?

A: Yes, easily. The controls only apply to linked accounts. A teen can create unlimited unmonitored accounts using different email addresses on web or mobile. OpenAI is building an age-detection system to auto-apply teen settings when uncertain about user age, but it's not yet deployed and the company hasn't explained how it will work.

Q: Do I need a paid ChatGPT subscription to use parental controls?

A: No. Parental controls are available to all ChatGPT users regardless of subscription tier. Both free and paid accounts can link with teen accounts and access the control panel. However, the safety routing that affects adults applies across all subscription levels, including Plus and Pro users.