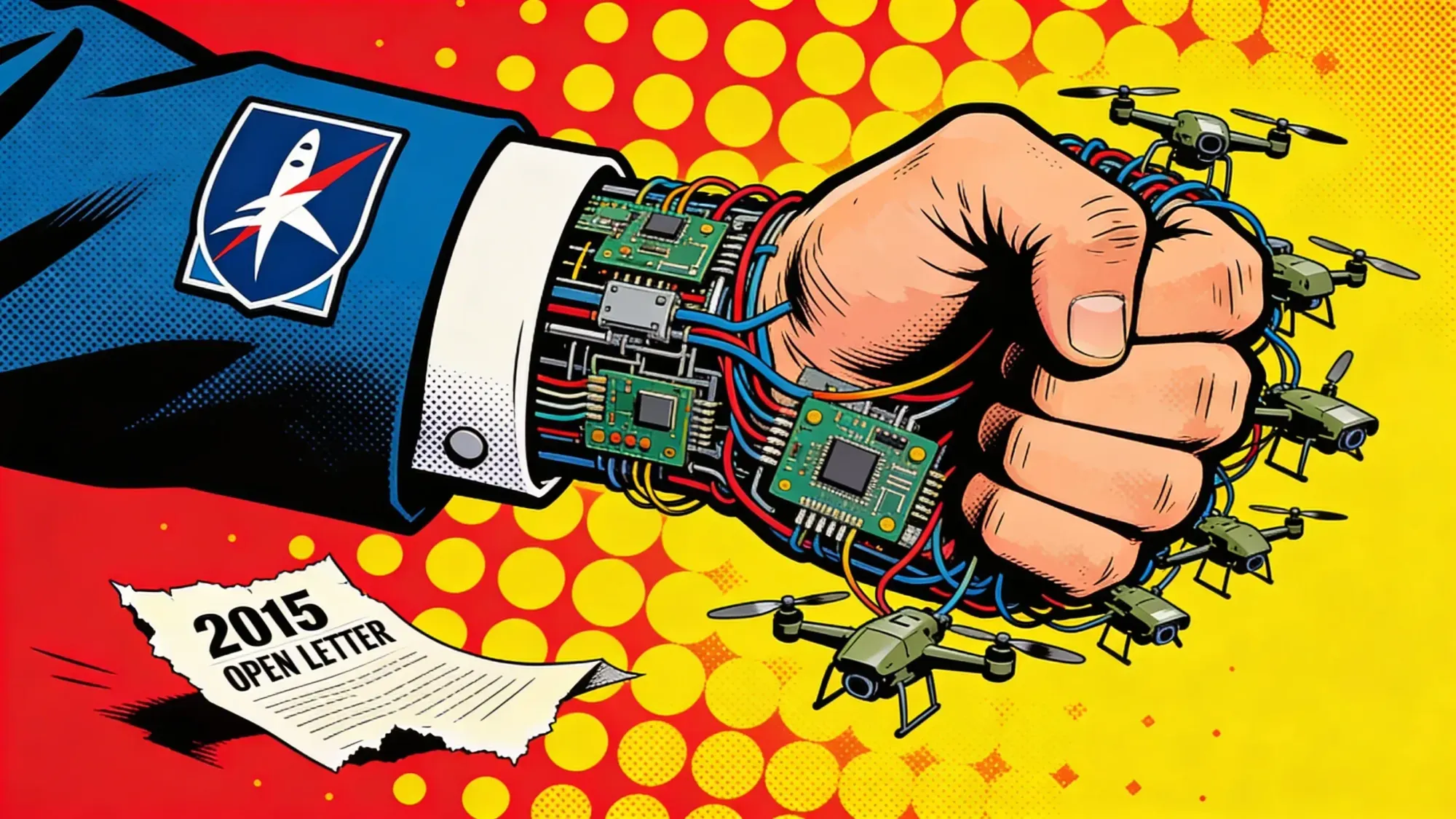

SpaceX and its subsidiary xAI are competing in a secret Pentagon contest to build voice-controlled autonomous drone swarming technology, Bloomberg reported Sunday. The $100 million prize challenge, launched in January by the Defense Innovation Unit and the Defense Autonomous Warfare Group, will develop software that translates spoken battlefield commands into digital instructions for coordinating drone swarms across air and sea. Neither SpaceX nor xAI responded to Bloomberg's requests for comment.

Their participation had not been previously disclosed. Musk's companies are among a small number of entrants selected for the six-month competition, according to people familiar with the matter who requested anonymity to discuss a classified program. The entry is a direct reversal for Musk. He signed an open letter in 2015 from AI researchers warning against autonomous weapons that "select and engage targets without meaningful human control." A decade later, his engineers will build software for a program the Pentagon describes as progressing through "target-related awareness" to "launch to termination."

The Breakdown

• SpaceX and xAI are competing in a secret $100M Pentagon contest to build voice-controlled autonomous drone swarm software.

• Musk signed a 2015 letter opposing autonomous weapons. His companies will now work on the full project, including targeting phases.

• OpenAI is also involved but limits its role to voice-to-command translation. SpaceX and xAI set no such boundary.

• The Pentagon may designate Anthropic a "supply chain risk" for refusing to drop restrictions on autonomous weapons and mass surveillance.

Five phases, from software to live weapons

The competition runs over six months in five phases. Early rounds focus only on software development. Later stages move to live platform testing, multi-domain drone coordination across air and sea, and what the Pentagon calls mission execution.

A defense official was blunt about the ambition when the contest was first announced. The human-machine interaction being developed, the official said, "will directly impact the lethality and effectiveness of these systems."

Voice-to-command translation is the centerpiece. A commander speaks an order into a headset. AI software converts the words into digital instructions. Multiple drones receive those instructions simultaneously and act as a coordinated swarm, adjusting behavior autonomously while pursuing a target. Flying several drones at once is already routine. Directing a swarm that adapts on its own, that splits and reforms across air and sea, that makes real-time decisions without waiting for human input on each maneuver, is the problem nobody has solved at scale.

DAWG, the Defense Autonomous Warfare Group, was created under the second Trump administration as part of US Special Operations Command. It continues the work of the Biden-era Replicator initiative, which sought to produce thousands of expendable autonomous drones. Replicator was about volume. DAWG wants coordination, swarms that think together and respond to human speech in real time.

xAI's defense buildup

xAI is not treating this as a side project. Job postings on the company's website call for software engineers with active "secret" or "top secret" security clearance, experience working with the Department of Defense or federal contractors, and fluency in AI and data projects. The listing promises a hiring process complete within a week. That urgency matters. Defense hiring with clearance requirements normally takes months.

The company already has a footprint in Pentagon contracting. xAI announced in December that its Grok chatbot would be deployed across government sites to "empower military and civilians." Before that, it secured a $200 million contract to integrate xAI into military systems. Those earlier deals centered on information tools, chatbots and analysis platforms. Building the software that coordinates autonomous weapons platforms is a different category of work entirely, and one Musk once argued should not exist. But xAI needs the revenue. The company carries billions in debt, faces regulatory scrutiny after Grok produced sexualized images, and brings in far less money than SpaceX. Pentagon contracts offer a funding stream that doesn't depend on advertising or consumer adoption.

SpaceX, for its part, is a longtime defense contractor, but its military portfolio has stayed close to rockets and satellite communications. SpaceX, Boeing, and Lockheed Martin handle the Pentagon's most sensitive satellite launches. Offensive weapons software is a different business. Rockets go up. Drone swarms go hunting.

Just weeks ago, SpaceX and xAI agreed to merge in a deal valued at $1.25 trillion. Musk's announcement framed the combination as "the most ambitious, vertically-integrated innovation engine on (and off) Earth, with AI, rockets, space-based internet, direct-to-mobile device communications and the world's foremost real-time information and free speech platform." The word "weapon" did not appear. Neither did "drone" or "military." The Pentagon contest fills in what the press release left out.

Stay ahead of the curve

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

OpenAI draws a line that SpaceX does not

OpenAI is also involved in the contest, but with strict limits on its role. The company is supporting bids from two defense technology partners, including Applied Intuition, Bloomberg reported last week. OpenAI's contribution covers only the "mission control" element, converting voice commands into digital instructions. Its technology will not control drone behavior, handle weapons integration, or hold targeting authority, according to a submission document Bloomberg reviewed.

A spokesperson said OpenAI's open-source models were included in contest bids and that the company would ensure any use stayed consistent with its usage policy. OpenAI did not submit its own bid. Its involvement came through existing defense partners who embedded the models in their proposals. Sam Altman has said he does not expect OpenAI to develop AI-enabled weapons platforms "in the foreseeable future," though he has left the door open. The distinction is fine but deliberate: providing tools is different from building weapons, even if the tools end up inside weapons systems.

SpaceX and xAI set no such boundary. People familiar with the matter told Bloomberg that the two companies plan to work on the entire project together, including later phases involving target awareness and mission execution. You can draw a clean line between translating a commander's voice into code and directing a drone to strike a target. OpenAI stays on one side of that line. SpaceX and xAI do not.

The companies that won't play

Not every AI company wants in. A senior administration official told Axios this week that the Pentagon is "close" to designating Anthropic a "supply chain risk," a classification that would force every military contractor to sever ties with the AI company. Anthropic has maintained hard limits on autonomous weapons development and mass domestic surveillance in its usage policies. Pentagon officials treat those restrictions as operational obstacles. Anthropic treats them as non-negotiable.

The swarm contest is one piece of a larger Pentagon drone push. The Defense Department has a separate Drone Dominance Program with more than $1 billion in planned spending, and Defense Secretary Pete Hegseth launched it last year with a memo titled "Unleashing U.S. Military Drone Dominance." Anthropic is walking away from that money. SpaceX and xAI are running toward it.

The Pentagon's own people are nervous too

Even inside the building that commissioned this contest, the idea of wiring generative AI into weapons systems has generated real anxiety. Several people with knowledge of the drone swarm program told Bloomberg they worried about what happens when large language models translate voice commands into operational decisions without a human checking the output.

LLMs hallucinate. They generate text that reads as authoritative but is fabricated. In a consumer product, a hallucination produces a wrong restaurant recommendation or a fabricated citation. In a system coordinating autonomous drone swarms in a combat zone, a fabricated output could misdirect a lethal strike. The people Bloomberg spoke with said it would be critical to keep generative AI restricted to translating commands, not to let it make decisions about drone behavior or targeting on its own.

The Pentagon's AI Acceleration Strategy, released in January, does not dwell on those concerns. The document calls for "unleashing" AI agents across the battlefield, from campaign planning to targeting, with language broad enough to accommodate lethal autonomous action. Defense contracts involving AI have a history of provoking backlash from the companies tasked with building them. Google tried this once. Employees revolted over Project Maven, a Pentagon program that used AI to analyze drone footage, and the company pulled out. That lesson stuck inside Big Tech for years. But the political and commercial incentives have shifted. OpenAI is now a Pentagon partner. xAI has a $200 million military contract. Anthropic held the line on its restrictions and may get cut from defense work entirely. An OpenAI researcher recently left over concerns about ads in ChatGPT. At Anthropic, another resigned publicly, citing worries about where AI development is headed. The exits have not slowed the contracting.

For now, the drone swarm contest sits in phase one. Software only, no live platforms. But the procurement documents describe where the program is headed, and every company selected to compete signed on knowing the trajectory. Musk's 2015 letter warned against weapons that operate "beyond meaningful human control." His companies' entry in this contest will test whether that phrase still carries weight when a hundred million dollars and a Pentagon contract sit on the other side of the table.

Frequently Asked Questions

Q: What is the Pentagon's drone swarm contest?

A: A $100 million prize challenge launched in January by the Defense Innovation Unit and the Defense Autonomous Warfare Group. It aims to develop software that translates spoken battlefield commands into instructions for coordinating autonomous drone swarms across air and sea over six months.

Q: What is the Defense Autonomous Warfare Group?

A: DAWG was created under the second Trump administration as part of US Special Operations Command. It continues the Biden-era Replicator initiative but focuses on drone swarm coordination rather than just producing large numbers of expendable drones.

Q: How is OpenAI's involvement different from SpaceX's?

A: OpenAI limits its role to the mission control element, converting voice commands into digital instructions. It will not control drone behavior or handle weapons integration. SpaceX and xAI plan to work on the entire project, including later targeting and mission execution phases.

Q: Why might the Pentagon designate Anthropic a supply chain risk?

A: Anthropic has refused to remove usage policy restrictions on autonomous weapons development and mass domestic surveillance. A supply chain risk designation would force every military contractor to cut ties with the company, effectively locking Anthropic out of defense work.

Q: What is the Drone Dominance Program?

A: A separate Pentagon initiative with more than $1 billion in planned spending across four phases. Defense Secretary Pete Hegseth launched it to field hundreds of thousands of cheap, weaponized attack drones by 2027. The first phase selected 25 vendors to compete.