💡 TL;DR - The 30 Seconds Version

💰 BlackRock's Global Infrastructure Partners is acquiring Aligned Data Centers for $40 billion—78 facilities with 5 gigawatts of capacity—as sovereign funds treat data centers as critical national infrastructure.

⚡ U.S. data center electricity consumption doubled from 76 terawatt-hours in 2018 to 176 TWh by 2023, jumping from 1.9% to 4.4% of total power generation in five years.

🔥 GPU chips activate thousands of cores simultaneously for AI workloads, generating massive heat that requires cooling systems consuming 30% to 50% of total facility power.

💧 AI facilities used 55.4 billion liters of water in 2023—five times traditional centers—with projections exceeding 124 billion liters annually by 2028, mostly lost to evaporation.

🔌 Transmission bottlenecks and skilled labor shortages slow build-outs even as Meta, Oracle, and others commit tens of billions to new capacity.

🌍 Efficiency gains can reduce per-chip consumption, but parallel processing and heat removal impose structural limits that will reshape power markets and rate structures.

A construction boom collides with hard limits on power, cooling, and labor.

BlackRock’s Global Infrastructure Partners is in advanced talks to buy Aligned Data Centers for roughly $40 billion, a portfolio of 78 facilities with around 5 gigawatts of capacity across the U.S. and South America, according to Bloomberg reporting on GIP’s talks to buy Aligned Data Centers. The fund’s co-investor is MGX, an AI infrastructure vehicle backed by Abu Dhabi’s Mubadala—another sign that sovereign money now sees data centers as core national infrastructure.

The same week, Groq—the $6.9 billion startup making chips for AI inference—said it will open more than a dozen data centers in 2026 after standing up 12 this year. Its first Asian site is slated for launch within months, with India a priority market. In Saudi Arabia, where Groq landed a $1.5 billion deal earlier this year, demand already exceeds the company’s supply. Two announcements, one pattern: capital is racing ahead of the grid.

What’s actually new: construction at hyperscale

The numbers are no longer notional. Meta raised $29 billion to finance a Louisiana complex. Oracle issued $18 billion in bonds as it builds infrastructure for OpenAI. Aligned secured $12 billion in equity and debt commitments in January. Put together, these deals point to an unprecedented build-out and rising valuations for assets once treated like utility real estate.

Groq’s push highlights a second shift: specialized silicon for inference. Its design with embedded memory aims to deploy faster and use less energy than general-purpose GPUs. The thesis is straightforward—optimize for the workload that’s growing fastest. Whether that translates to materially lower power per output remains to be proven at sustained, real-world scale.

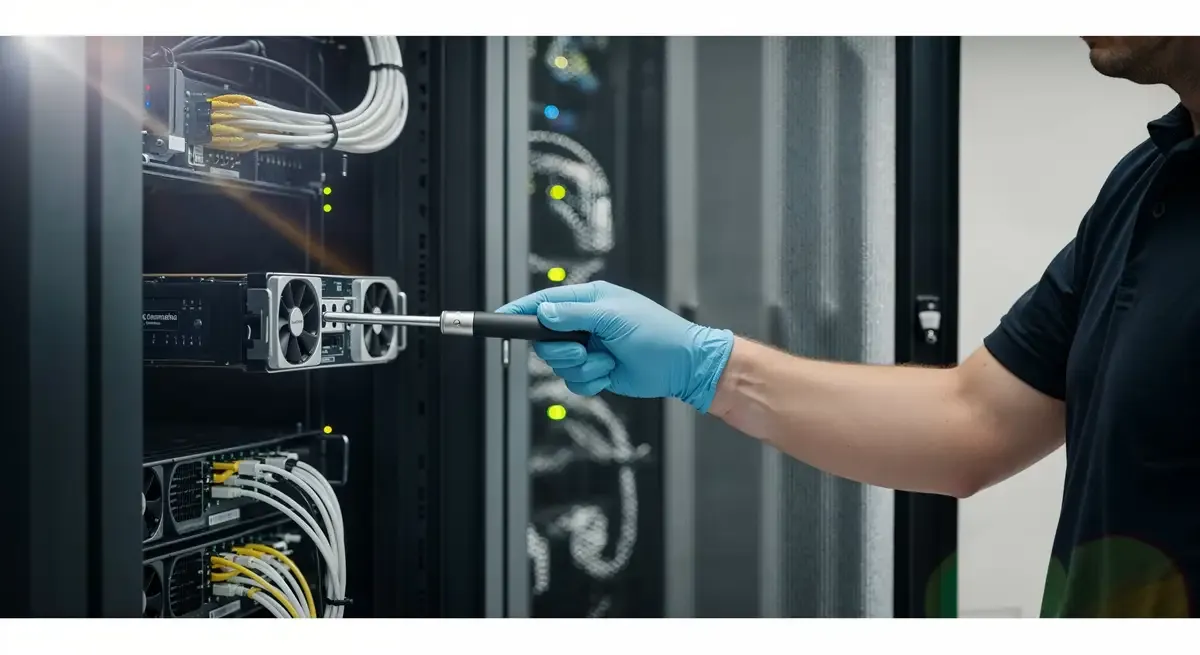

Why AI data centers consume so much energy

The physics starts at the chip. CPUs excel at sequential tasks and spend more time idling between bursts. Modern AI workloads—training and inference—favor GPUs and other massively parallel processors. They light up thousands of cores at once to perform identical math operations across large tensors. More transistors active in parallel means higher instantaneous power draw. Successive GPU generations have added cores faster than they’ve improved efficiency, lifting total facility demand.

The national meter reflects the shift. U.S. data-center electricity use held near 60 terawatt-hours from 2014 to 2016. As GPUs became standard for machine learning, consumption climbed to 76 TWh by 2018 and roughly 176 TWh by 2023. That’s a jump from about 1.9% to 4.4% of U.S. electricity in five years, with credible forecasts pushing toward double-digit share early next decade. Scale, not hype, is driving the curve.

Heat: the second energy bill

Almost all of that electrical power ends up as heat, and modern AI halls are dense—rack after rack of high-wattage accelerators. Chips must be kept within the ASHRAE-recommended 18–27°C band to preserve performance and longevity, which means facilities spend heavily on cooling. In many sites, cooling consumes 30% to 50% of the total power budget.

Cooling also has a water cost. Traditional evaporative systems draw hot air through wetted media or use indirect heat exchangers. The method is effective but thirsty. U.S. estimates put 2023 consumption in the tens of billions of liters for “conventional” centers and roughly five times that for AI-heavy sites, with projections that AI data-center water use could exceed 100 billion liters annually within a few years. Most of that water evaporates or becomes chemically treated wastewater. One fifth of facilities tap already-stressed watersheds. And that’s just direct use; the electricity feeding these sites carries its own water footprint from thermal power plants.

Transmission, labor, and timing make it harder

Even if generation can keep up, moving power to new campuses is a constraint. High-voltage lines take years to permit and build, and interconnection queues are clogged. Construction adds another bottleneck. The U.S. faces acute shortages of skilled trades—electricians, ironworkers, pile-driver operators—pushing costs up and schedules out. Developers are paying premiums to import crews across regions and compress timelines, but there’s only so much slack in the system.

“Inference is cheaper” doesn’t automatically fix it

Inference often uses less compute per request than model training, but scale erases that advantage. Billions of daily queries, agents that run for minutes or hours, and retrieval-heavy pipelines still drive sustained parallel workloads. Groq’s experience—demand outrunning supply despite significant capital—suggests inference will add to total load rather than replace it. The real win comes from right-sizing models and workflows: smaller, tuned models on specialized silicon; caching; early-exit strategies; and aggressive job termination when agentic branches prove unpromising.

What might bend the curve

Operators are shifting to closed-loop liquid cooling and direct-to-chip cold plates to cut evaporation losses. Early microfluidic experiments show promise in pulling heat from the die itself. Siting strategies are changing too: co-locating near surplus generation, building behind-the-meter renewables, and piloting geothermal for both electricity and cooling. On the grid side, utilities can unlock capacity faster with dynamic line rating and sensors that safely raise throughput on existing lines while the long permits grind on. None of this is free. All of it buys time.

The bottom line

The money is real and the physics is stubborn. Investments like GIP’s planned Aligned acquisition make sense only if the grid, cooling, and labor problems are solvable on tight timelines. Some are. Others will take years. In the interim, the costs of speed—electricity, water, and rate structures that socialize infrastructure—are spilling into the broader economy, especially in regions dense with new builds.

Why this matters

- Grid strain and rate design risk shifting private AI costs onto public power bills, especially near data-center clusters.

- Efficiency gains are possible, but parallel compute and heat removal impose structural limits that policy, siting, and smarter workloads must address.

❓ Frequently Asked Questions

Q: Why can't data centers just use regular CPUs instead of power-hungry GPUs?

A: CPUs execute tasks sequentially—one complex calculation at a time, very fast. AI workloads require processing identical operations across millions of data points simultaneously. GPUs sacrifice per-task speed for massive parallelism, running thousands of smaller processors at once. A CPU is efficient for varied tasks; a GPU is essential for the repetitive matrix math that powers AI models.

Q: What alternatives to evaporative cooling are data centers actually deploying?

A: Closed-loop liquid cooling is gaining traction—coolant circulates through cold plates attached directly to chips, eliminating water loss to evaporation. Microsoft tested microfluidics systems with tiny channels on chip backs, reducing temperature rise by 65%. Some facilities are siting near cold water sources or using geothermal systems that tap underground temperatures. These methods cut direct water use dramatically but require higher upfront capital.

Q: Why is BlackRock paying $40 billion for assets facing such serious constraints?

A: Infrastructure funds bet on scarcity value. If grid constraints and permitting delays make new capacity hard to build, existing facilities become more valuable. Aligned's 5 gigawatts of operational capacity and established grid connections represent years of solved problems. The bet assumes AI demand will outpace new supply, letting owners charge premium rates as hyperscalers compete for scarce power allocations.

Q: How much more energy does an AI-powered search use compared to a traditional Google search?

A: Estimates vary widely, but researchers peg AI-enhanced queries at 5 to 10 times the energy of traditional keyword searches. A standard search uses about 0.3 watt-hours; an AI response generating multiple paragraphs can hit 3 to 5 watt-hours. Scale that across billions of daily queries and the grid impact becomes measurable, especially as companies embed AI into every search box and chat interface.

Q: Is training AI models or running inference queries more energy-intensive?

A: Training a frontier model like GPT-4 consumed an estimated 50 gigawatt-hours in a single multi-month run—equivalent to powering 4,600 U.S. homes for a year. Individual inference queries use far less, but scale changes the math. Billions of daily queries, multi-turn conversations, and agentic workflows that run for minutes create sustained load. Both training and inference are now driving data center expansion.