Apple Pays $2 Billion to Read Your Face

Apple acquired Israeli startup Q.AI for close to $2 billion, gaining facial micro-movement technology that decodes silent speech for future wearables.

Apple acquired Israeli startup Q.AI for close to $2 billion, gaining facial micro-movement technology that decodes silent speech for future wearables.

Try this. Mouth a word, any word, without letting sound escape. Your jaw twitches. Something tightens near your throat. You can feel it if you pay attention, though nobody across the table would ever notice. A startup called Q.AI built cameras that notice. Tiny ones, designed to sit inside glasses or earbuds, tracking those muscle contractions and turning them into text. On Thursday, Apple paid close to $2 billion to own that trick.

The company announced Thursday that it acquired Q.AI, a secretive Israeli startup whose technology tracks facial skin micro-movements to interpret silent and whispered speech. The deal, reported by the Financial Times at close to $2 billion, ranks as Apple's second-largest acquisition ever. And it was built by someone Apple already knows well. The man behind Q.AI is Aviad Maizels. He walked out of Apple a few years back, after PrimeSense, the company he'd founded earlier, gave Cupertino the depth sensors that power Face ID. You sell Apple a company, watch it ship in a billion phones, and then what? You go home. You get bored. You call two friends.

The friends were Yonatan Wexler, who'd spent years at OrCam working on computer vision for the visually impaired, and Avi Barliya, an AI researcher. Q.AI came together in 2022. Kleiner Perkins backed it. Google Ventures backed it. Spark Capital and Aleph did too. The round totaled $24.5 million, all of it raised before anyone outside the company really knew what it was building. Then they went quiet. Wexler posted a single teaser on social media after launch: "I can't tell you anything yet about our product, but I bet it will leave you speechless."

The Breakdown

• Apple acquired Israeli startup Q.AI for close to $2 billion, its second-largest deal ever after Beats.

• Q.AI's technology reads facial skin micro-movements to decode silent and whispered speech without sound.

• Founder Aviad Maizels previously sold PrimeSense to Apple in 2013, which became the basis for Face ID.

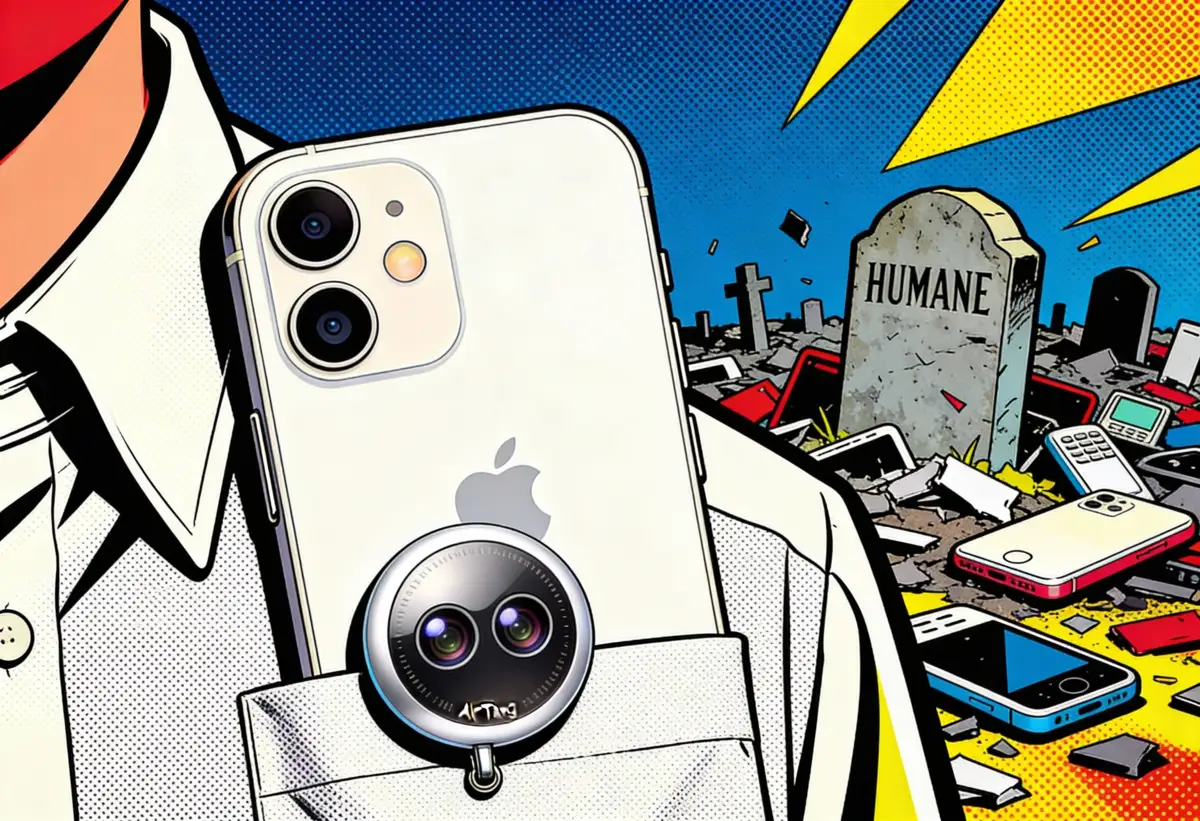

• The deal signals Apple's push into AI-powered wearables as Meta, Google, and OpenAI race to ship smart glasses.

That turned out to be almost literal.

Q.AI's roughly 100 employees built machine learning models optimized to run on embedded hardware, not in the cloud. Job postings on the company's website, still visible Thursday, described an electro-optical module destined for a "mass-production-ready device" running a custom Linux distribution with a software stack written in C. On-device inference. Tight power budgets. The kind of engineering Apple does when it plans to ship millions of units.

Johny Srouji, Apple's senior vice president of hardware technologies and the executive who oversees the company's Israeli operations, confirmed the deal. "Q is an exceptional company, pioneering new and creative ways to use imaging and machine learning technologies," he told YNet News.

Strip away the marketing and you get a system that watches your jaw, your lips, the muscles around your throat. When you mouth the words "set a timer for five minutes" without producing any sound, Q.AI's models decode those micro-movements into text. The patent applications go further, describing technology that can assess heart rate, respiration, and emotional state from the same facial signals. Not through a separate sensor. Through the same camera already pointed at your face.

This is not voice recognition. Voice recognition needs sound waves. Q.AI needs optics and software that understands what your muscles are doing. MIT built something similar a few years ago, a project called AlterEgo that picked up neuromuscular signals in the face and throat while people talked to themselves inside their heads. Turns out your larynx and tongue fire tiny electrical signals even when you think in words, no audible sound required. Several Hacker News commenters noted the overlap on Thursday, with one pointing to patent filings from Q.AI's founders that track the same research lineage. The difference is that AlterEgo required electrodes stuck to the user's skin. Q.AI appears to do it optically, using cameras small enough to embed in consumer hardware.

For Apple, the applications split into two categories. First, input. Imagine wearing AirPods on a crowded train platform and asking Siri a question by mouthing it. No one around you hears anything. The response comes through your earbuds. You carry on a conversation with a machine while the person next to you reads their newspaper in peace.

Second, audio enhancement. Q.AI's technology can improve speech capture in noisy environments by reading facial movements to fill in what the microphone misses. Anyone who has tried dictating a text message on a windy street knows how badly current systems handle background noise. Cross-referencing audio input with visual data from facial micro-movements gives the device two channels of information instead of one. That redundancy makes speech recognition dramatically more reliable in conditions where microphones alone fail.

Strategic AI news from San Francisco. No hype, no "AI will change everything" throat clearing. Just what moved, who won, and why it matters. Daily at 6am PST.

No spam. Unsubscribe anytime.

Apple rarely buys companies at this price. Its acquisition history reads like a list of careful, quiet absorptions, the kind Wall Street barely notices. Five hundred million for Anobit's flash memory in 2011. Six hundred million for Dialog Semiconductor in 2018. A billion for Intel's modem business in 2019. The only deal larger than Q.AI was Beats at $3 billion in 2014, and that came with a celebrity brand, a streaming service, and Dr. Dre.

Two billion for a company with 100 employees, no commercial product, and $24.5 million in total funding tells you something about how anxious Apple feels. The valuation represents roughly an 80x return on invested capital for Q.AI's backers, a number that reflects not what the company has built so far but what Apple believes it needs. Apple bought a lock for a door it hasn't built yet. Not a product, not revenue, but the right to own silent human-machine communication before anyone else does. And the race is not theoretical. Meta already moved millions of Ray-Ban smart glasses with AI baked in. Google and Snap both have glasses coming before the end of the year. OpenAI acquired Jony Ive's hardware startup IO and is reportedly developing a compact AI device for the second half of 2026.

And while Cupertino was writing checks, its own AI ambitions kept tripping over themselves in public. Siri was going to get smart. Understand context, pull up apps for you, act like a real assistant. That version was supposed to ship in 2024. Got bumped to 2025. Slipped again. Investors grew restless. Some called publicly for Apple to make a big AI acquisition rather than continuing to fall behind. Earlier this month, the company struck a deal with Google to use Gemini models for parts of Apple Intelligence. For a company that built its identity on controlling every layer of the stack, from silicon to software, licensing a competitor's AI was not a partnership. It was a concession.

"We're very open to M&A that accelerates our roadmap," Tim Cook said last July. At the time, it sounded like boilerplate. Now it looks like a preview.

Q.AI's technology needs a camera on your face. Close, too. Mounted in glasses or clipped to headphones, running all day, catching those muscle contractions whenever you decide to mouth a command. Apple has been selling itself as the privacy company for years now, the one that keeps your data on your phone instead of shipping it to some server farm in Virginia. Q.AI's on-device inference models fit that narrative perfectly. No server farms involved. No audio recordings leaving your glasses. Your face, your device, your data.

But there is a gap between Face ID and what Q.AI promises. You pick up your phone, look at it, Face ID fires. A deliberate action with a clear start and end. Silent speech requires something different. The camera watches your facial muscles continuously, waiting for input, the way a microphone stays hot on a smart speaker. Except this microphone has eyes. One Hacker News commenter put it bluntly: "Sounds pretty invasive for privacy, if this was ever paired with smart glasses in public." Another raised a darker possibility. What happens when the cameras face outward? When the same technology that reads your lips starts reading the lips of the person across the coffee shop table?

Apple will almost certainly process everything locally on the device. That is the engineering direction Q.AI was already heading, given the on-device models and tight hardware integration visible in its job postings. But the lock cuts both ways. Sure, Apple owns it. Sure, nothing leaves your device. The data never touches a server. Fine. But that lock still guards a door that opens onto everything your face does while you wear the glasses. Your pulse. Your breathing. Whether you're stressed or calm or angry. Every micro-expression, captured and processed on a chip sitting two inches from your eyeball. On-device doesn't mean invisible. It means Apple gets to decide what counts as private.

The acquisition fits a pattern that has been forming for over a year. Apple bought Israeli 3D avatar startup TrueMeeting in 2025, along with generative AI company Pointable. It added live translation to AirPods. Remember Vision Pro? Three and a half grand for a headset that weighed more than most ski goggles. Apple found out, painfully, that the audience for face computers is mostly developers and the kind of early adopters who enjoy explaining their purchases at dinner parties. Sales were soft. The product looked like a technology demo that hadn't found its reason to exist.

Daily at 6am PST

No breathless headlines. No "everything is changing" filler. Just who moved, what broke, and why it matters.

Free. No spam. Unsubscribe anytime.

Smart glasses rumored for later this year or early next need a different value proposition entirely. Something that justifies wearing a computer on your face beyond watching spatial video in your living room.

Silent speech could be that justification. A pair of glasses that lets you communicate with an AI assistant without anyone around you knowing, that understands what you want before you say it out loud, offers something genuinely different from pulling out a phone. Meta's Ray-Bans require you to talk. Google's glasses will likely require you to talk. Apple's version, if Q.AI's technology works, would let you mouth a command in a silent meeting and get a response piped directly to your ear. The glasses become a private channel between you and the machine, invisible to everyone else in the room.

Maizels seemed to understand the weight of the moment. "We combined advanced machine learning with physics to build something truly deep and unique," he said in a statement. "Becoming part of Apple opens extraordinary possibilities to push boundaries and bring these experiences to people everywhere."

His track record suggests he means it. PrimeSense gave Apple the sensor that changed how a billion people unlock their phones. If Q.AI's silent speech technology works at scale, it could change how those same people talk to their devices. Not with voice commands shouted across a room. With a thought, mouthed silently, read by a camera the size of a grain of rice, processed on a chip that fits behind your ear.

The glasses on Srouji's desk in Herzliya are not a product yet. But two billion dollars says they will be.

Q: What does Q.AI's technology actually do?

A: Q.AI built machine learning models that track facial skin micro-movements to interpret mouthed or whispered words. Using small cameras embedded in devices like glasses or earbuds, the system decodes jaw, lip, and throat contractions into text without requiring audible speech. Patents also describe health monitoring capabilities including heart rate and respiration tracking.

Q: How much did Apple pay for Q.AI and how does it compare to past deals?

A: The Financial Times reported the deal at close to $2 billion, making it Apple's second-largest acquisition ever. Only Beats at $3 billion in 2014 was bigger. For context, Q.AI had raised just $24.5 million in venture funding and had roughly 100 employees with no commercial product on the market.

Q: Who is Aviad Maizels and why does his track record matter?

A: Maizels founded PrimeSense, which Apple acquired in 2013 for roughly $350 million. That technology became the foundation for Face ID, shipping in over a billion iPhones. His return to Apple with Q.AI marks the rare case of a founder selling two companies to the same buyer, both centered on facial sensing technology.

Q: How does silent speech differ from voice recognition?

A: Voice recognition requires sound waves captured by a microphone. Silent speech reads muscle movements optically, using cameras to detect contractions in the jaw, lips, and throat when a person mouths words without making noise. MIT's AlterEgo project demonstrated similar principles using electrodes, but Q.AI appears to achieve it with cameras small enough for consumer hardware.

Q: What are the privacy concerns with always-on facial monitoring?

A: Unlike Face ID, which activates only when you pick up your phone, silent speech requires continuous camera monitoring of facial muscles. While Apple will likely process data on-device rather than in the cloud, the system still captures micro-expressions, emotional states, and biometric data throughout the day. Critics have raised concerns about outward-facing cameras potentially reading other people's facial movements.

Get the 5-minute Silicon Valley AI briefing, every weekday morning — free.